Chapter 6 On Uncertainty

6.1 Introduction

Fitting a model to a set of data involves searching for parameter estimates that optimize the relationship between the observed data and the predictions of the model. The model parameter estimates are taken to represent properties of the population in which we are interested. While it should be possible to find optimum parameter values in each situation, it remains the case that whatever data is used constitutes only one set of samples from the population. Assuming it were possible to take different, independent, samples of the same kinds of data from the same population, if they were then analysed independently, they would very likely lead to at least slightly different parameter estimates. Thus, the exact value of the estimated parameters is not really the most important issue when fitting a model to data, rather, we want to know how repeatable such estimates are likely to be if we were to have the luxury of multiple independent samples. That is, the parameters are just estimates and we want to know how confident we can be in those estimates. For example, it is common that highly variable data usually leads to each model parameter potentially having a wide distribution of plausible estimates, typically they would be expected to have wide confidence intervals.

In this chapter we will explore alternative ways available for characterizing at least a proportion of the uncertainty inherent in any modelling situation. While there can be many sources of potential uncertainty that can act to hamper our capacity to manage natural resources, only some of these can be usefully investigated here. Some sources of uncertainty influence the variability of data collected, other sources can influence the type of data available.

6.1.1 Types of Uncertainty

There are various ways of describing the different types of uncertainty that can influence parameter estimates from fisheries models and thus, how such estimates can be used. These are often called sources of error, usually in the sense of residual error rather than as a mistake having been made. Unfortunately, the term “error”, as in residual error, has the potential to lead to confusion, so it is best to use the term “uncertainty”. Although as long as you are aware of the issue it should not be a problem for you.

Francis & Shotton (1997) listed six different types of uncertainty relating to natural resource assessment and management, while Kell et al (2005) following Rosenberg and Restrepo (1994), contract these to five. Alternatively, they can all be summarized under four headings (Haddon, 2011), some with sub-headings, as follows:

Process uncertainty: underlying natural random variation in demographic rates (such as growth, inter-annual mean recruitment, inter-annual natural mortality ) and other biological properties and processes.

Observation uncertainty: sampling error and measurement error reflect the fact that samples are meant to represent a population but remain only a sample. Inadequate or non-representative data collection would contribute to observational uncertainty as would any mistakes or the deliberate misreporting or lack of reporting of data, which is not unknown in the world of fisheries statistics.

Model uncertainty: relates to the capacity of the selected model structure to describe the dynamics of the system under study:

- Different structural models may provide different answers and uncertainty exists over which is the better representation of nature.

- The selection of the residual error structure for a given process is a special case of model uncertainty that can have important implications for parameter estimates.

- Estimation uncertainty is an outcome of the model structure having interactions or correlations between parameters such that slightly different parameter sets can lead to identical values of log-likelihood (to the limit of precision used in the numerical model fitting).

- Different structural models may provide different answers and uncertainty exists over which is the better representation of nature.

Implementation uncertainty: where the effects or extent of management actions may differ from those intended. Poorly defined management options may lead to implementation uncertainty.

- Institutional uncertainty: inadequately defined management objectives leading to unworkable management.

- Time-lags between making decisions and implementing them can lead to greater variation. Assessments are often made a year or more after fisheries data is collected and management decisions often take another year or more to be implemented.

- Institutional uncertainty: inadequately defined management objectives leading to unworkable management.

Here we are especially concerned with process, observational, and model uncertainty. Each can influence model parameter estimates and model outcomes and predictions. Implementation uncertainty is more about how the outcomes from a model or models are used when managing natural resources. This can have important effects on the efficiency of different management strategies based on the outcomes of stock assessment models but it does not influence those immediate outcomes (Dichmont et al, 2006).

Model uncertainty can be both quantitative and qualitative. Thus, different models that use exactly the same data and residual structures could be compared to one another and the best fitting selected. Such models may be considered to be related but different. However, where models are incommensurate, for example, when different residual error structures are used with the same structural model, they can each generate an optimum fit and model selection must be based on factors other than just quality of fit. Such models do not grade smoothly into each other but constitute qualitatively and quantitatively different descriptions of the system under study. Model uncertainty is one of the driving forces behind model selection (Burnham and Anderson, 2002). Even where there is only one model developed invariably this has been implicitly selected from many possible models. Working with more than one type of model in a given situation (perhaps a surplus production model along with a fully age-structured model) can often lead to insights that using one model alone would be missed. Sadly, with decreasing resources generally made available for stock assessment modelling, working with more than one model is now an increasingly rare option.

Model and implementation uncertainty are both important in the management of natural resources. We ahve already compared the outcomes from alternative models, however, here we are going to concentrate on methods that allow us to characterize the confidence with which we can accept the various parameter estimates and other model outputs obtained from given models that have significance for management advice.

There are a number of strategies available for characterizing the uncertainty around any parameter estimates or other model outputs. Some methods focus on data issues while other methods focus on the potential distributions of plausible parameter values given the available data. We will consider four different approaches:

bootstrapping, focusses on the uncertainty inherent in the data samples and operates by examining the implications for the parameter estimates had somewhat different samples been taken.

asymptotic errors use a variance-covariance matrix between parameter estimates to describe the uncertainty around those parameter values,

likelihood profiles are constructed on parameters of primary interest to obtain more specific distributions of each parameter, and finally,

Bayesian marginal posteriors characterize the uncertainty inherent in estimates of model parameters and outputs.

Each of these approaches permit the characterization of the uncertainty of both parameters and model outputs and provide for the identification of selected central percentile ranges around each parameter’s mean or expectation (e.g. \(\bar{x}\pm 90\%{CI}\), in some case may not be symmetric).

We will use a simple surplus production model to illustrate all of these methods, although they should be applicable more generally.

6.1.2 The Example Model

This is the same model as described in the section on fitting a dynamic model using log-normal likelihoods in the chapter on Model Parameter Estimation. We will use this in many of the examples in this chapter and it has relatively simple population dynamics, Equ(6.1).

\[\begin{equation} \begin{split} {B_{t=0}} &= {B_{init}} \\ {B_{t+1}} &= {B_t}+r{B_t}\left( 1-\frac{{B_{t}}}{K} \right)-{C_t} \end{split} \tag{6.1} \end{equation}\]

where \(B_t\) represents the available biomass in year \(t\), with \(B_{init}\) being the biomass in the first year of available data (\(t=0\)), taking account of any initial depletion when records begin. \(r\) is the intrinsic rate of natural increase (a population growth rate term), \(K\) is the carrying capacity or unfished available biomass, commonly referred to elsewhere as \(B_0\) (not to be confused with \(B_{init}\)). Finally, \(C_t\) is the total catch taken in year \(t\). To connect these dynamics to observations from the fishery other than the catches we use an index of relative abundance (\(I_t\), often catch-per-unit-effort or cpue, but could be a survey index).

\[\begin{equation} {{I}_{t}}=\frac{{{C}_{t}}}{{{E}_{t}}}=q{{B}_{t}} \;\;\; or \;\;\; {{C}_{t}}=q{{E}_{t}}{{B}_{t}} \tag{6.2} \end{equation}\]

where \(I_t\) is the catch-rate (CPUE or cpue ) in year \(t\), \(E_t\) is the effort in year \(t\), and \(q\) is known as the catchability coefficient (which can also change through time but we have assumed it to be constant). Because the \(q\) merely scales the stock biomass against catches, we will use the closed form of estimating catchability to reduce the number of parameters requiring estimation (Polacheck et al, 1993).

\[\begin{equation} q={{e}^{\frac{1}{n}\sum{\log\left( \frac{{{I}_{t}}}{{{{\hat{B}}}_{t}}} \right)}}}=\exp \left( \frac{1}{n}\sum{\log\left( \frac{{{I}_{t}}}{{{{\hat{B}}}_{t}}} \right)} \right) \tag{6.3} \end{equation}\]

We will use the MQMF abdat data set and fit the model here, then we can examine the uncertainty in that model fit in the following sections. We will be fitting the model by minimizing the negative log-likelihood with residuals based upon the Log-Normal distribution. Simplified these become:

\[\begin{equation} -LL(y|\theta ,\hat{\sigma },I)=\frac{n}{2}\left( \log{\left( 2\pi \right)}+2\log{\left( {\hat{\sigma }} \right)} + 1 \right)+\sum\limits_{i=1}^{n}{\log{\left( {I_t} \right)}} \tag{6.4} \end{equation}\]

where \(\theta\) is the vector of parameters (\(r, K\), and \(B_{init}\)), the \(I_t\) are the \(n\) observed cpue values across the years \(t\), and the maximum likelihood estimate of \(\hat{\sigma}^2\) is defined as:

\[\begin{equation} {{\hat{\sigma }}^{2}}=\sum\limits_{t=1}^{n}{\frac{{{\left( \log{({I_t} )}-\log{( {{{\hat{I}}}_{t}} )} \right)}^{2}}}{n}} \tag{6.5} \end{equation}\]

Note the division by \(n\) and not \(n-1\). Given the only non-constant values are the \({\log}(\hat{I_t})\), the result obtained from using maximum likelihood will be the same as when using a least-squared residual approach (as long as the observed and predicted cpue are both log-transformed). Nevertheless, using maximum likelihood methods has advantages over sum-of-squares when we are examining uncertainty. Strictly, the \(\hat\sigma\) value is also a model parameter but we are treating it specially here just to illustrate the equivalence with least-squared approaches.

#Fit a surplus production model to abdat fisheries data

data(abdat); logce <- log(abdat$cpue)

param <- log(c(0.42,9400,3400,0.05))

label=c("r","K","Binit","sigma") # simpspm returns

bestmod <- nlm(f=negLL,p=param,funk=simpspm,indat=abdat,logobs=logce)

outfit(bestmod,title="SP-Model",parnames=label) #backtransforms # nlm solution: SP-Model

# minimum : -41.37511

# iterations : 20

# code : 2 >1 iterates in tolerance, probably solution

# par gradient transpar

# r -0.9429555 6.707523e-06 0.38948

# K 9.1191569 -9.225209e-05 9128.50173

# Binit 8.1271026 1.059296e-04 3384.97779

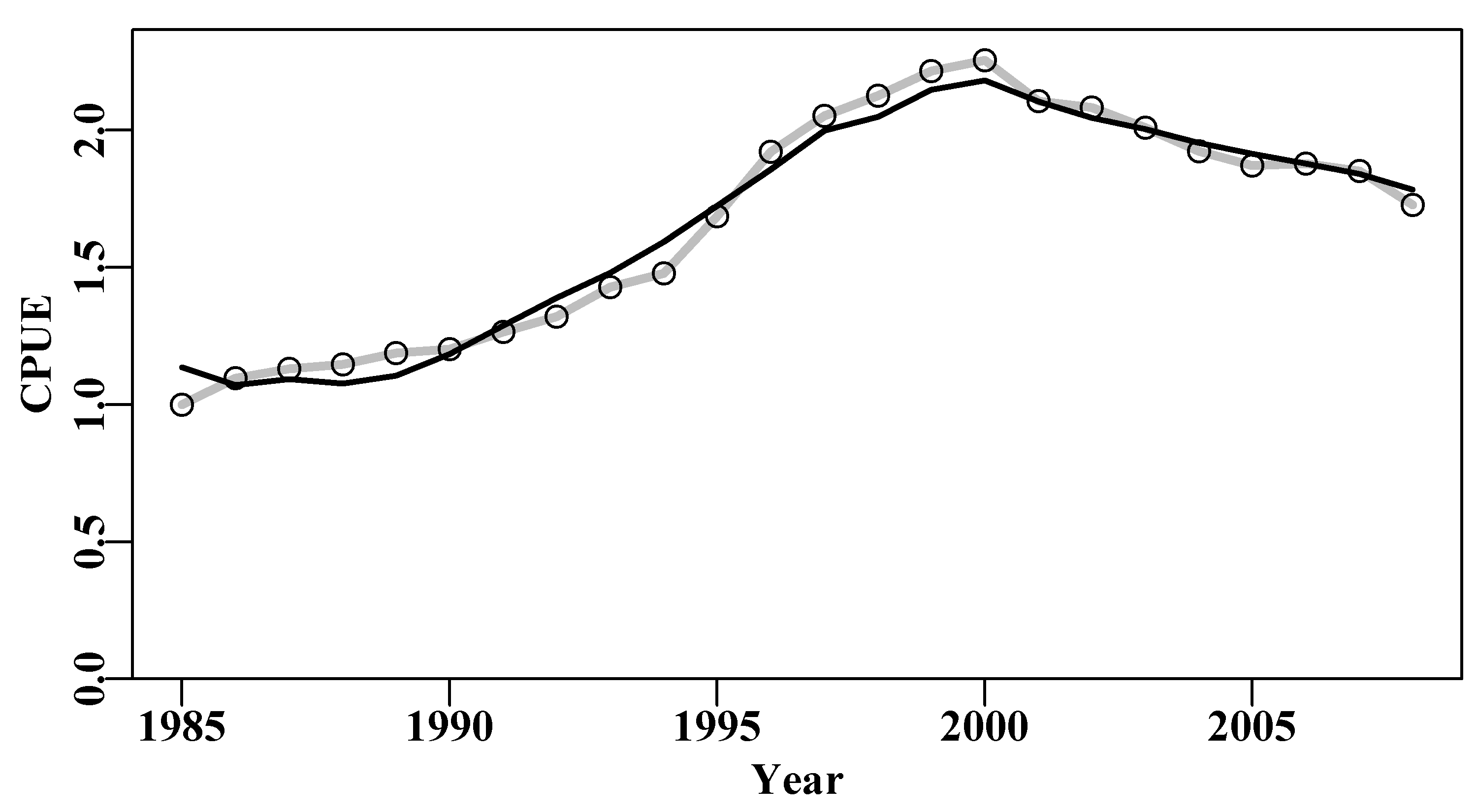

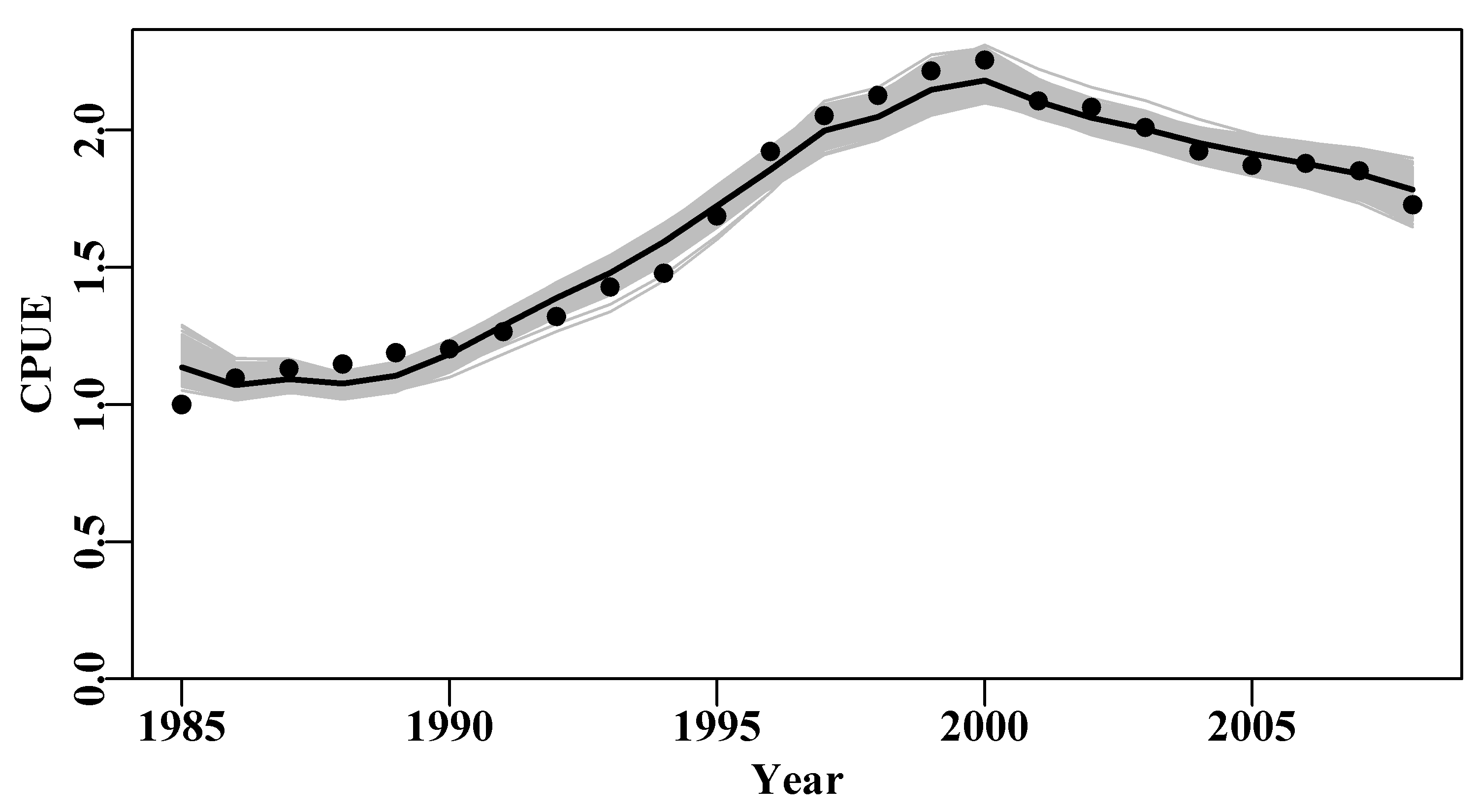

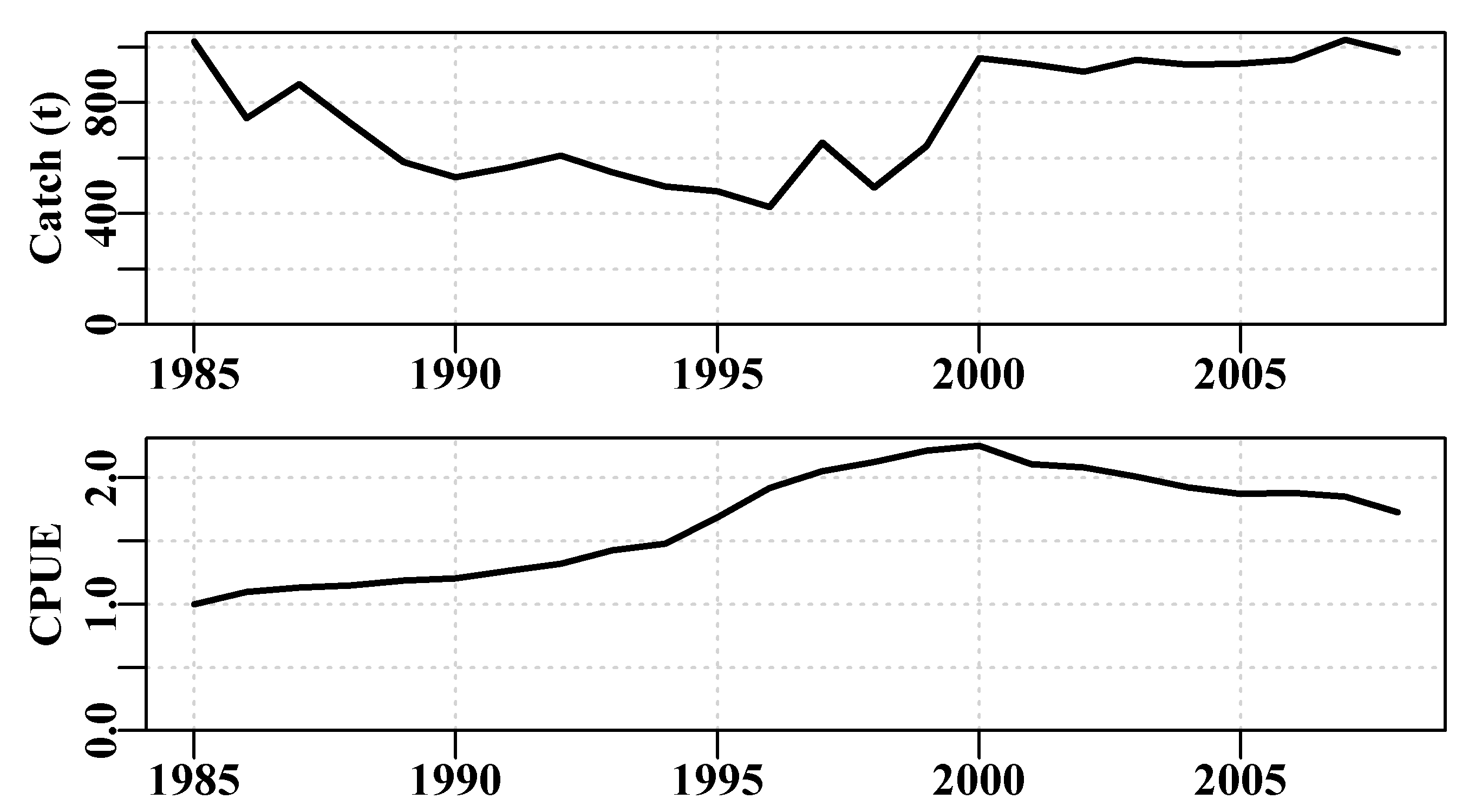

# sigma -3.1429030 -8.161433e-07 0.04316The model fit can be visualized as in Figure(6.1). The simple dynamics of the Schaefer model appear to provide a plausible description of these observed abalone catch-rate data. In reality, there were changes to legal minimum sizes, the introduction of zonation within the fishery, and other important changes across the time-series, so this result can only be considered an approximation at very best and should only be considered as providing an example of the methodology. The log-normal residuals are observed/predicted, and if multiplied by the predicted would obviously return the observed (grey-line). This is illustrated here because we are about to use this simple relationship when bootstrapping the data.

The Maximum Sustainable Yield (MSY) can be estimated from the Schaefer model simply as \(\text{MSY} = rK/4\), which means this optimal fit implies an \(\text{MSY} = 888.842t\). The two questions we will attempt to answer in each of the following sections will be: what is the plausible spread of the predicted cpue around the observed data in Figure(6.1)? and, what would be the 90th percentile confidence bounds around the mean MSY estimate?

#plot the abdat data and the optimum sp-model fit Fig 6.1

predce <- exp(simpspm(bestmod$estimate,abdat))

optresid <- abdat[,"cpue"]/predce #multiply by predce for obsce

ymax <- getmax(c(predce,abdat$cpue))

plot1(abdat$year,(predce*optresid),type="l",maxy=ymax,cex=0.9,

ylab="CPUE",xlab="Year",lwd=3,col="grey",lty=1)

points(abdat$year,abdat$cpue,pch=1,col=1,cex=1.1)

lines(abdat$year,predce,lwd=2,col=1) # best fit line

Figure 6.1: The optimum fit of the Schaefer surplus production model to the abdat data set plotted in linear-space (solid red-line). The grey line passes through the data points to clarify the difference with the predicted line.

6.2 Bootstrapping

Data sampled from a population are treated as being (assumed to be) representative of that population and of the underlying probability density distribution of expected sample values. This is a very important assumption first suggested by Efron (1979). He asked the question: when a sample contains or is all of the available information about a population, why not proceed as if the sample really is the population for purposes of estimating the sampling distribution of the test statistic? Thus, given an original sample of \(n\) observations, bootstrap samples would be random samples of \(n\) observations taken from the original sample with replacement. bootstrap samples (i.e., random re-sampling from the sample data values with replacement) are assumed to approximate the distribution of values that would have arisen from repeatedly sampling the original sampled population. Each of these bootstrapped samples is treated as an independent random sample from the original population. This resampling with replacement appears counter-intuitive to some, but can be used to fit standard errors, percentile confidence intervals, and to test hypotheses. The name bootstrap is reported to derive from the story The Adventures of Baron Munchausen, in which the Baron escaped drowning by pulling himself up by his own bootstraps and thereby escaping from a water well (Efron and Tibshirani, 1993).

Efron (1979) first suggested bootstrapping as a practical procedure and Bickel and Freedman (1981) provided a demonstration of the asymptotic consistency of the bootstrap (convergent behaviour as the number of bootstrap samples increased). Given this demonstration, the bootstrap approach has been applied to numerous standard applications, such as multiple regression (Freedman, 1981; ter Braak, 1992) and stratified sampling (Bickel and Freedman, 1984, who found a limitation). Efron eventually converted the material he had been teaching to senior-level students at Stanford into a general summary of progress (Efron and Tibshirani, 1993).

6.2.1 Empirical Probability Density Distributions

The assumption is that given a sample from a population, the non-parametric, maximum likelihood estimate of the population’s probability density distribution is the sample itself. That is, if the sample consists of \(n\) observations \((x_1, x_2, x_3,..., x_n)\), the maximum likelihood, non parametric estimator of the probability density distribution is the function that places probability mass \(1/n\) on each of the \(n\) observations \(x_i\). It must be emphasized that this is not saying that all values have equal likelihood, instead, it implies that each observation has equal likelihood of occurring, although there may be multiple observations having the same value (make sure you are clear about this distinction before proceeding!). If the population variable being sampled has a modal value then one expects, some of the time, to obtain the same or similar values near that mode more often that values out at the extremes of the samples distribution.

Bootstrapping consists of applying Monte Carlo procedures, sampling with replacement but from the original sample itself, as if it were a theoretical statistical distribution (analogous to the Normal, Gamma, and Beta distributions ). Sampling with replacement is consistent with a population that is essentially infinite. Therefore, we are treating the sample as representing the total population.

In summary, bootstrap methods are used to estimate the uncertainty around the value of a parameter or model output. This is done by summarizing many bootstrap parameter estimates from replicate samples derived from replacing the true population sample by one estimated from the original sample from the population.

6.3 A Simple Bootstrap Example

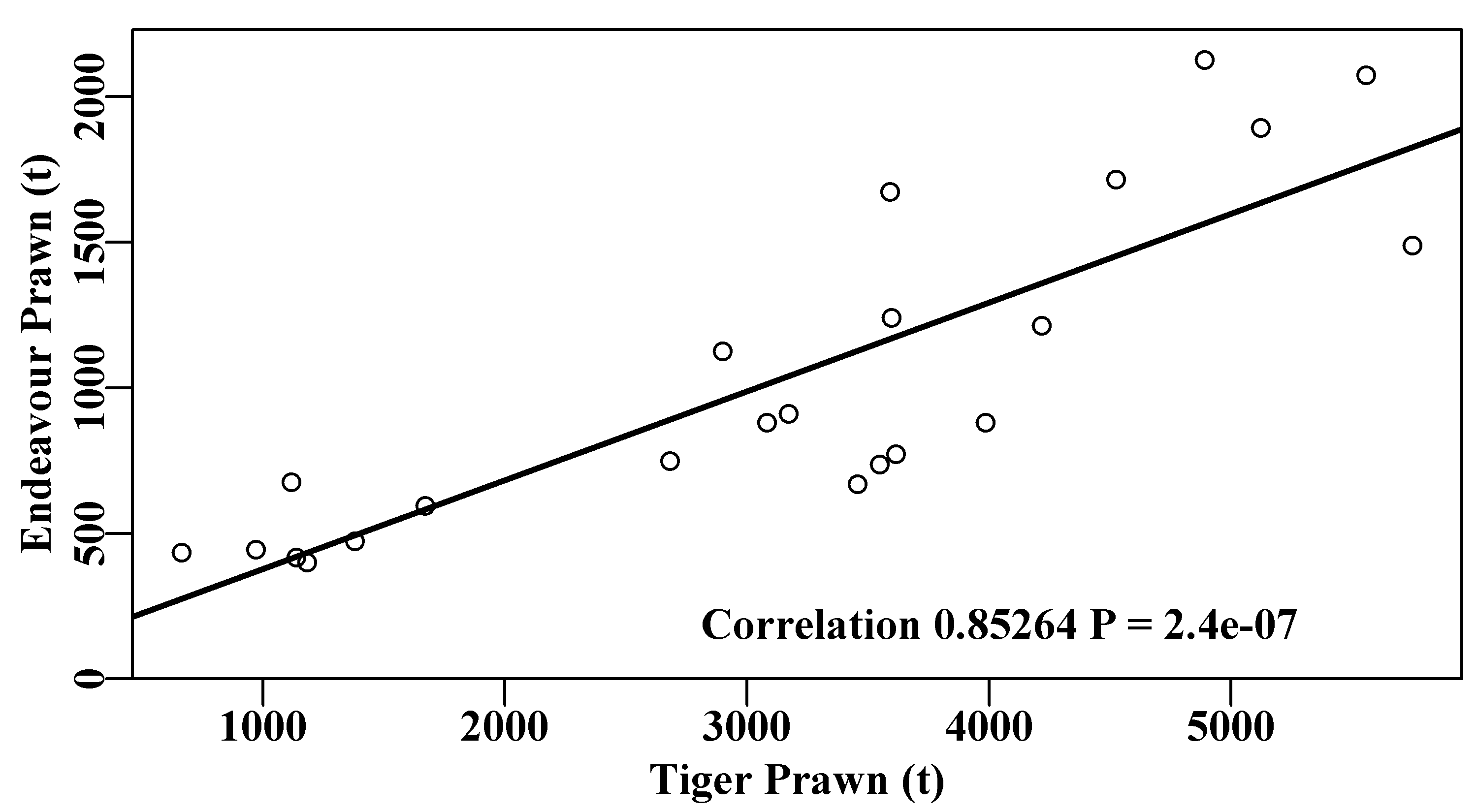

To gain an appreciation of how to implement a bootstrap in R it is sensible to start with a simple example. The Australian Northern prawn fishery, within the Gulf of Carpentaria and across to Joseph Bonaparte Bay, along the top right hand side of the continent, is a mixed fishery that captures multiple species of prawn (Dichmont et al, 2006; Robins and Somers, 1994). We will use an example of the catches of tiger prawns (Penaeus semisulcatus and P. esculentus) and endeavour prawns (Metapenaeus endeavouri and M. ensis) taken between 1970 to 1992. There appears to be a correlation between those catches, Figure(6.2), but there is a good deal of scatter in the data. The endeavour prawns are invariably a bycatch in the much more valuable tiger prawn fishery, and this is reflected in their relative catch quantities.

The prawn catch data are relatively noisy, which is not unexpected with prawn catches. That endeavour and tiger prawn catches are correlated should also not be surprising. The endeavour prawns are generally taken as bycatch in the more valuable tiger prawn fishery, so one would expect the total tiger prawn catch to have some relationship with the total catch of endeavour prawns. If, on the other hand, you were to plot the banana prawn catch relative to the tiger prawn catch no such relationship would be expected because these are two almost independent fisheries (mostly in the same areas) but one fished during daytime the other at night. Exploring such relationships in mixed fisheries can often leads to hypotheses concerning the possibility of interactions between species or between individual fisheries.

#regression between catches of NPF prawn species Fig 6.2

data(npf)

model <- lm(endeavour ~ tiger,data=npf)

plot1(npf$tiger,npf$endeavour,type="p",xlab="Tiger Prawn (t)",

ylab="Endeavour Prawn (t)",cex=0.9)

abline(model,col=1,lwd=2)

correl <- sqrt(summary(model)$r.squared)

pval <- summary(model)$coefficients[2,4]

label <- paste0("Correlation ",round(correl,5)," P = ",round(pval,8))

text(2700,180,label,cex=1.0,font=7,pos=4)

Figure 6.2: The positive correlation between the catches of endeavour and tiger prawns in the Australian Northern Prawn Fishery between 1970 - 1992 (data from Robins and Somers, 1994).

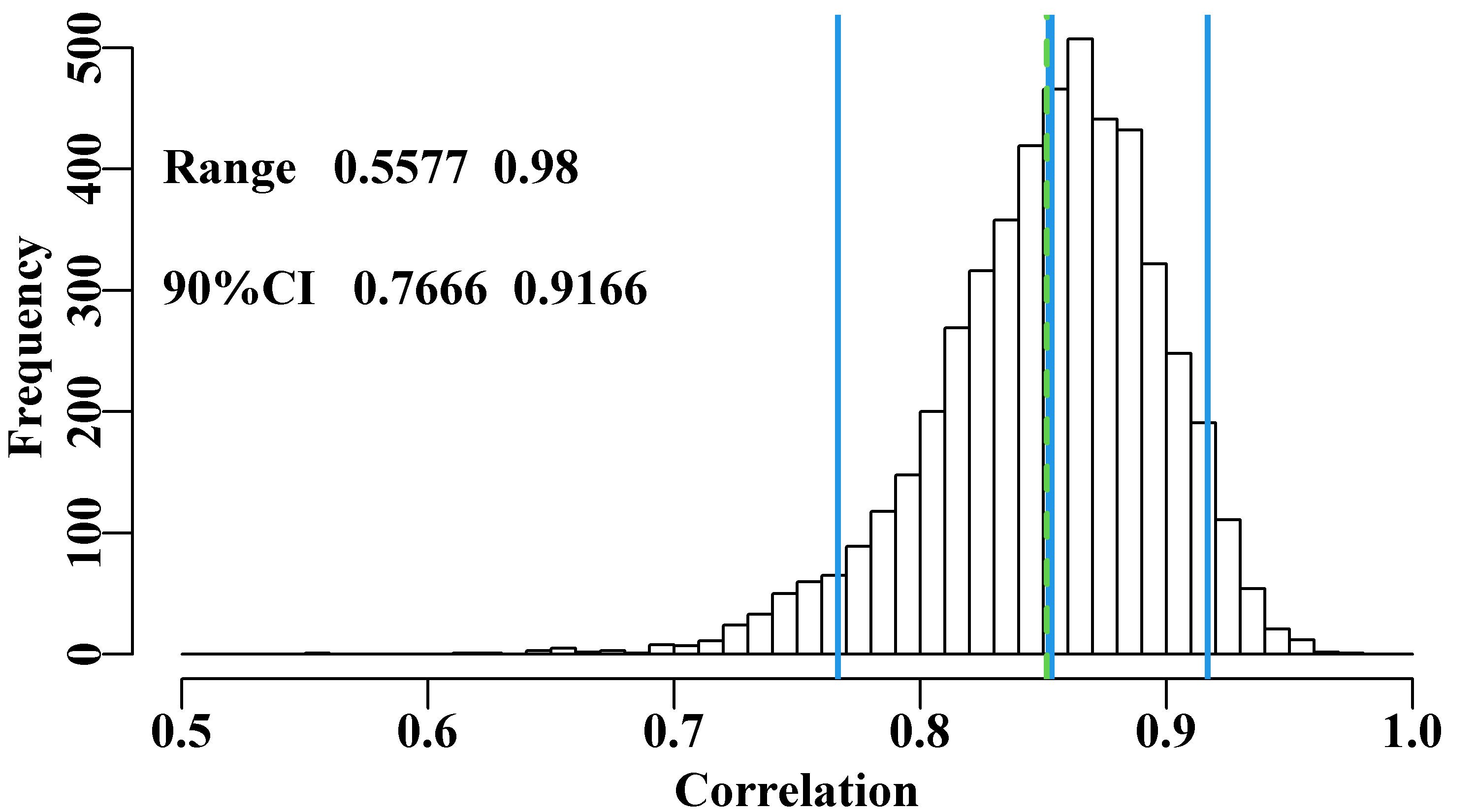

While the regression is highly significant (\(P < 1e^{-06}\)) the variability of the prawn catches means it is difficult to know with what confidence we can believe the correlation. Estimating confidence intervals around correlation coefficients is not usually straight-forward, fortunately, using bootstrapping, this can be done easily. We might take 5000 bootstrap samples of the 23 pairs of data from the original data set and each time calculate the correlation coefficient. Once finished we can calculate the mean and various quantiles. In this case we are not bootstrapping single values but pairs of values; we have to take pairs to maintain any intrinsic correlation. Re-sampling pairs can be done in R by first sampling the positions along each vector of data, with replacement, to identify which pairs to take for each bootstrap sample.

# 5000 bootstrap estimates of correlation coefficient Fig 6.3

set.seed(12321) # better to use a less obvious seed, if at all

N <- 5000 # number of bootstrap samples

result <- numeric(N) #a vector to store 5000 correlations

for (i in 1:N) { #sample index from 1:23 with replacement

pick <- sample(1:23,23,replace=TRUE) #sample is an R function

result[i] <- cor(npf$tiger[pick],npf$endeavour[pick])

}

rge <- range(result) # store the range of results

CI <- quants(result) # calculate quantiles; 90%CI = 5% and 95%

restrim <- result[result > 0] #remove possible -ve values for plot

parset(cex=1.0) # set up a plot window and draw a histogram

bins <- seq(trunc(range(restrim)[1]*10)/10,1.0,0.01)

outh <- hist(restrim,breaks=bins,main="",col=0,xlab="Correlation")

abline(v=c(correl,mean(result)),col=c(4,3),lwd=c(3,2),lty=c(1,2))

abline(v=CI[c(2,4)],col=4,lwd=2) # and 90% confidence intervals

text(0.48,400,makelabel("Range ",rge,sep=" ",sigdig=4),font=7,pos=4)

label <- makelabel("90%CI ",CI[c(2,4)],sep=" ",sigdig=4)

text(0.48,300,label,cex=1.0,font=7,pos=4)

Figure 6.3: 5000 bootstrap estimates of the correlation between Endeavour and Tiger prawn catches with the original mean in dashed green and bootstrap mean and 90% CI in solid blue. Possible negative correlations have been removed for plotting purposes (though none occurred).

While the resulting distribution of correlation values is certainly skewed to the left, we can be confident that the high correlation in the original data is a fair representation of the available data on the relationship.

6.4 Bootstrapping Time-Series Data

Even though the prawn catches in the previous example were taken across a series of years the particular year in which the data arrived was not relevant to the correlation between them. Thus, we could just bootstrap the data pairs and continue with the analysis. However, when bootstrapping information concerning the population dynamics of a species it is necessary to maintain the time-series nature of the data, where the values (of numbers or biomass) in one year depend in some way on the values in the previous year. For example, in a surplus production model the order in which the observed data enter the analysis is a vital aspect of the stock dynamics, so naively bootstrapping data pairs is not a valid or sensible option. The solution we adopt is that we obtain an optimum model fit with its predicted cpue time-series and we then bootstrap the individual residuals at each point. In each cycle the bootstrap sampled residuals are applied to the optimum predicted values so as to generate a new bootstrapped ‘observed’ data series, which is then refitted. you will remember that the observed values can be derived from multiplying the optimum predicted cpue values by the log-normal residuals, Figure(6.1). Given the optimum solution from the original data the Log-Normal residual for a particular year \(t\) is:

\[\begin{equation} resid_t = {\frac{I_t}{\hat{I_t}}} = \exp\left(\log(I_t)-\log(\hat{I_t})\right) \tag{6.6} \end{equation}\]

where \(I_t\) refers to the observed cpue in year \(t\) and \(\hat{I_t}\) is the optimum predicted cpue in year \(t\). An optimum solution would imply a time-series of optimum predicted cpue values and a time-series of associated log-normal residuals. Given we are using multiplicative log-normal residuals, once we take a bootstrap sample of the residuals (random samples with replacement) we would need to multiple the optimum predicted cpue time-series by the bootstrap series of residuals. Log-Normal residuals would be expected to center around 1.0 with lower values constrained by zero and upper values not constrained; hence the skew that can occur with Log-Normal distributions.

\[\begin{equation} {I_t}^* = {\hat{I_t}} \times \left({\frac{I_t}{\hat{I_t}}}\right)^* = \exp \left( \log{(\hat{I_t})} + \left(\log{(I_t)}-\log{(\hat{I_t})}\right)^*\right) \tag{6.7} \end{equation}\]

where the \(*\) superscript denotes either a bootstrap value, as in \({I_t}^*\) or a bootstrap sample, as in \((I_t/{\hat{I_t}})^*\). Had we been using simple additive normal random residuals then we would have used the equation on the right but without the log-transformation and exponentiation. For the surplus production model the log-normal version can be implemented thus:

# fitting Schaefer model with log-normal residuals with 24 years

data(abdat); logce <- log(abdat$cpue) # of abalone fisheries data

param <- log(c(r= 0.42,K=9400,Binit=3400,sigma=0.05)) #log values

bestmod <- nlm(f=negLL,p=param,funk=simpspm,indat=abdat,logobs=logce)

optpar <- bestmod$estimate # these are still log-transformed

predce <- exp(simpspm(optpar,abdat)) #linear-scale pred cpue

optres <- abdat[,"cpue"]/predce # optimum log-normal residual

optmsy <- exp(optpar[1])*exp(optpar[2])/4

sampn <- length(optres) # number of residuals and of years | year | catch | cpue | predce | optres | year | catch | cpue | predce | optres |

|---|---|---|---|---|---|---|---|---|---|

| 1985 | 1020 | 1.000 | 1.135 | 0.881 | 1997 | 655 | 2.051 | 1.998 | 1.027 |

| 1986 | 743 | 1.096 | 1.071 | 1.023 | 1998 | 494 | 2.124 | 2.049 | 1.037 |

| 1987 | 867 | 1.130 | 1.093 | 1.034 | 1999 | 644 | 2.215 | 2.147 | 1.032 |

| 1988 | 724 | 1.147 | 1.076 | 1.066 | 2000 | 960 | 2.253 | 2.180 | 1.033 |

| 1989 | 586 | 1.187 | 1.105 | 1.075 | 2001 | 938 | 2.105 | 2.103 | 1.001 |

| 1990 | 532 | 1.202 | 1.183 | 1.016 | 2002 | 911 | 2.082 | 2.044 | 1.018 |

| 1991 | 567 | 1.265 | 1.288 | 0.983 | 2003 | 955 | 2.009 | 2.003 | 1.003 |

| 1992 | 609 | 1.320 | 1.388 | 0.951 | 2004 | 936 | 1.923 | 1.952 | 0.985 |

| 1993 | 548 | 1.428 | 1.479 | 0.966 | 2005 | 941 | 1.870 | 1.914 | 0.977 |

| 1994 | 498 | 1.477 | 1.593 | 0.927 | 2006 | 954 | 1.878 | 1.878 | 1.000 |

| 1995 | 480 | 1.685 | 1.724 | 0.978 | 2007 | 1027 | 1.850 | 1.840 | 1.005 |

| 1996 | 424 | 1.920 | 1.856 | 1.034 | 2008 | 980 | 1.727 | 1.782 | 0.969 |

Typically one would conduct at 1000 bootstrap samples as a minimum. Note we have set bootfish to a matrix rather than a data.frame. If you remove the as.matrix so that bootfish becomes a data.frame instead compare the time it takes to do that and see the advantage of using a matrix in computer-intensive work.

# 1000 bootstrap Schaefer model fits; takes a few seconds

start <- Sys.time() # use of as.matrix faster than using data.frame

bootfish <- as.matrix(abdat) # and avoid altering original data

N <- 1000; years <- abdat[,"year"] # need N x years matrices

columns <- c("r","K","Binit","sigma")

results <- matrix(0,nrow=N,ncol=sampn,dimnames=list(1:N,years))

bootcpue <- matrix(0,nrow=N,ncol=sampn,dimnames=list(1:N,years))

parboot <- matrix(0,nrow=N,ncol=4,dimnames=list(1:N,columns))

for (i in 1:N) { # fit the models and save solutions

bootcpue[i,] <- predce * sample(optres, sampn, replace=TRUE)

bootfish[,"cpue"] <- bootcpue[i,] #calc and save bootcpue

bootmod <- nlm(f=negLL,p=optpar,funk=simpspm,indat=bootfish,

logobs=log(bootfish[,"cpue"]))

parboot[i,] <- exp(bootmod$estimate) #now save parameters

results[i,] <- exp(simpspm(bootmod$estimate,abdat)) #and predce

}

cat("total time = ",Sys.time()-start, "seconds \n") # total time = 4.584487 secondsOn the computer I used when writing this, the bootstrap took about 4 seconds to run. That was made up of taking the bootstrap sample of 24 years and fitting the surplus production model to the sample, with each iteration taking about 0.004 seconds. This is worth knowing with any computer-intensive method that requires fitting models or calculating likelihoods many times over. Knowing the time expected to run the analysis assists in designing the scale of those analyses. The surplus production model takes very little time to fit, but if a complex age-structured model takes say 1 minute to fit then 1000 replicates (a minimum by modern standards, and more would invariably provide for more precise results) would take over 16 hours! There is still very much a place for optimizing the speed of code where possible; We will discuss ways of optimizing for speed using the Rcpp package later in this chapter when we discuss approximating Bayesian posterior distributions.

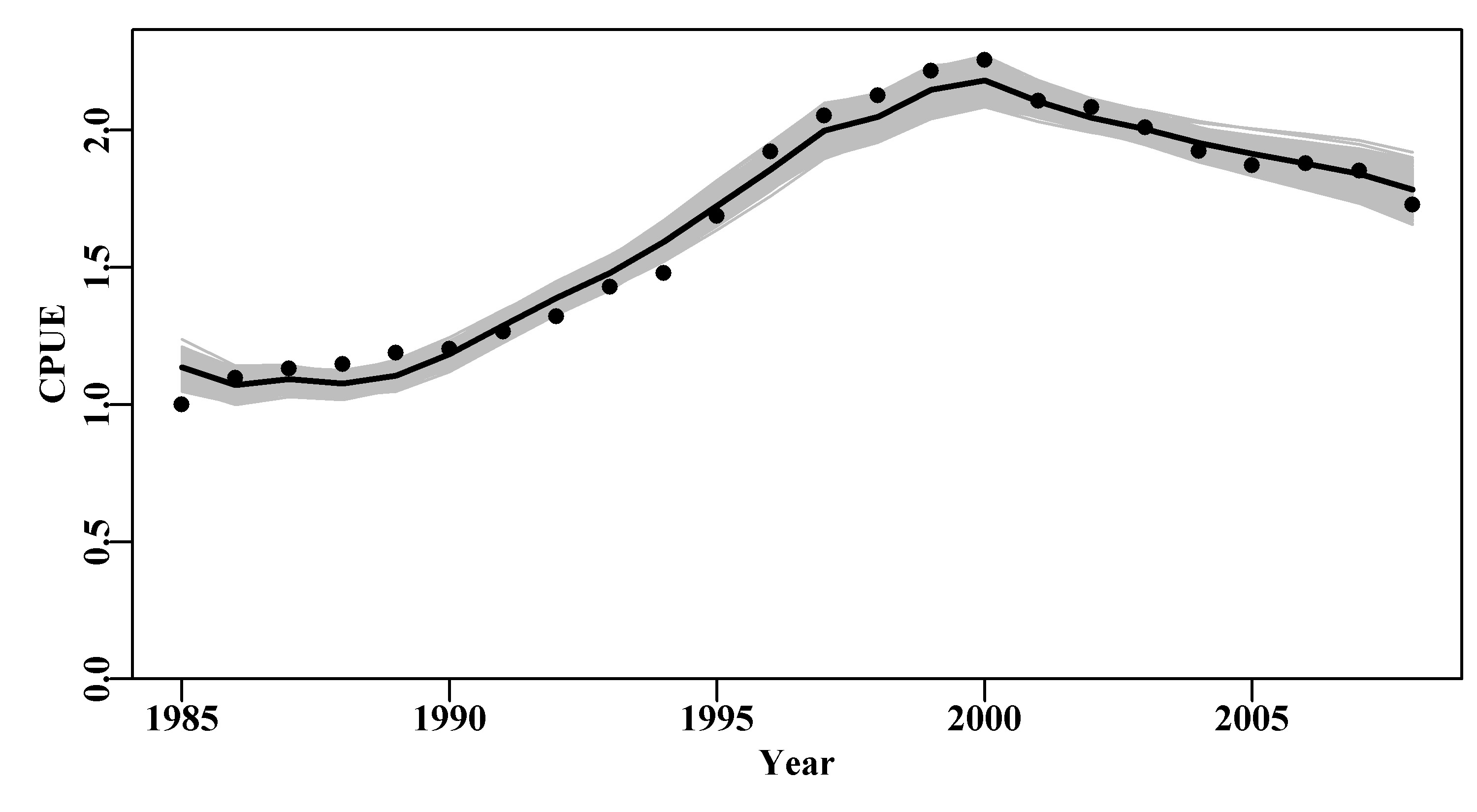

The bootstrap samples can usually be plotted to provide a visual impression of the quality of a model fit, Figure(6.4). It would be possible to apply the quants() function to the results matrix containing the bootstrap estimates of the optimum predicted cpue and thereby plot percentile confidence intervals within the grey bounds of the plot, but here the outcome is so tight that it would mostly just make the plot untidy.

The values in years 1988 and 1989 have the largest residuals and so can never be exceeded.

# bootstrap replicates in grey behind main plot Fig 6.4

plot1(abdat[,"year"],abdat[,"cpue"],type="n",xlab="Year",

ylab="CPUE") # type="n" just lays out an empty plot

for (i in 1:N) # ready to add the separate components

lines(abdat[,"year"],results[i,],lwd=1,col="grey")

points(abdat[,"year"],abdat[,"cpue"],pch=16,cex=1.0,col=1)

lines(abdat[,"year"],predce,lwd=2,col=1)

Figure 6.4: 1000 bootstrap estimates of the optimum predicted cpue from the abdat data set for an abalone fishery. Black points are the original data, the black line is the optimum predicted cpue from the original model fit, and the grey trajectories are the 1000 bootstrap estimates of the predicted cpue.

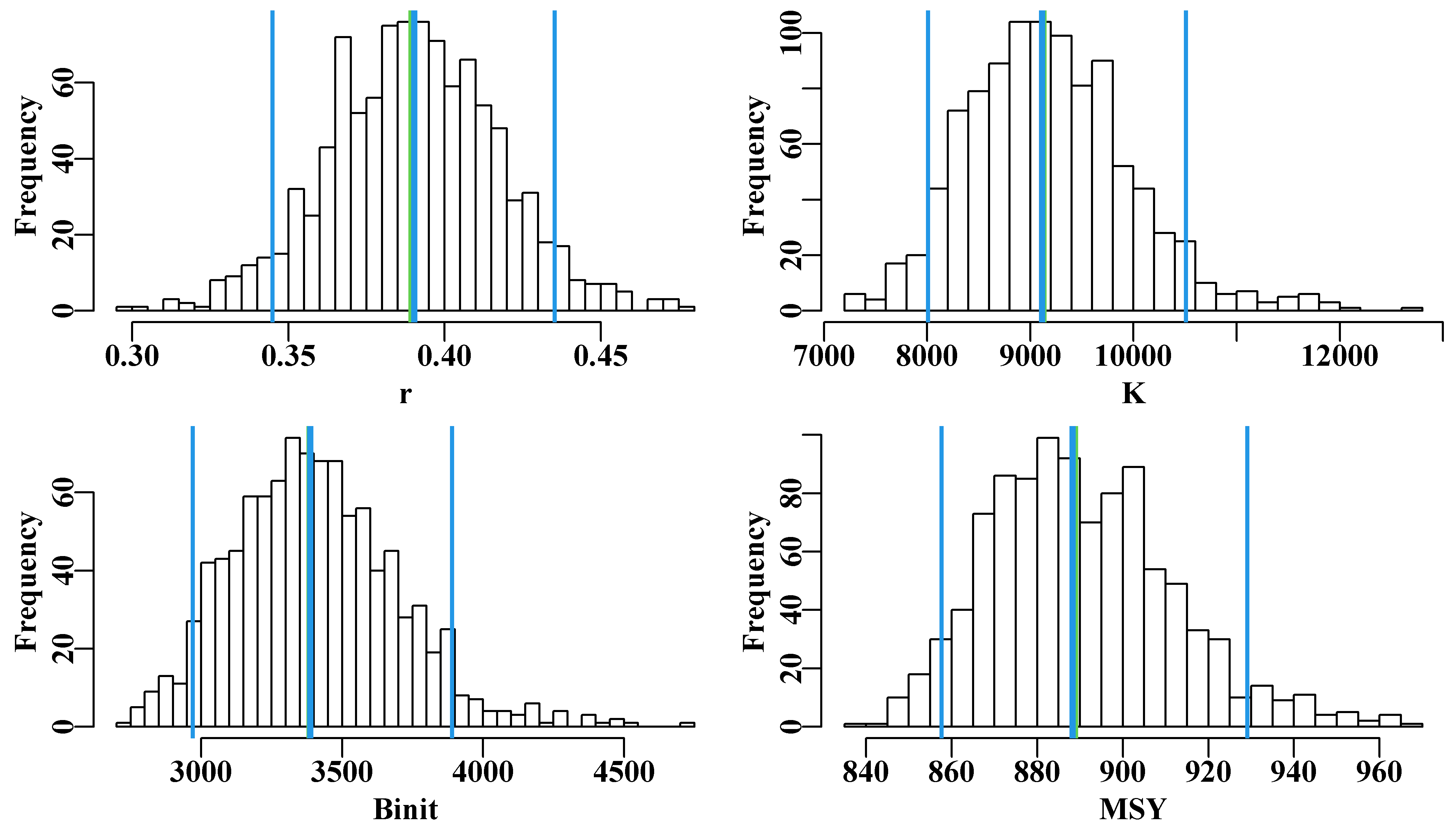

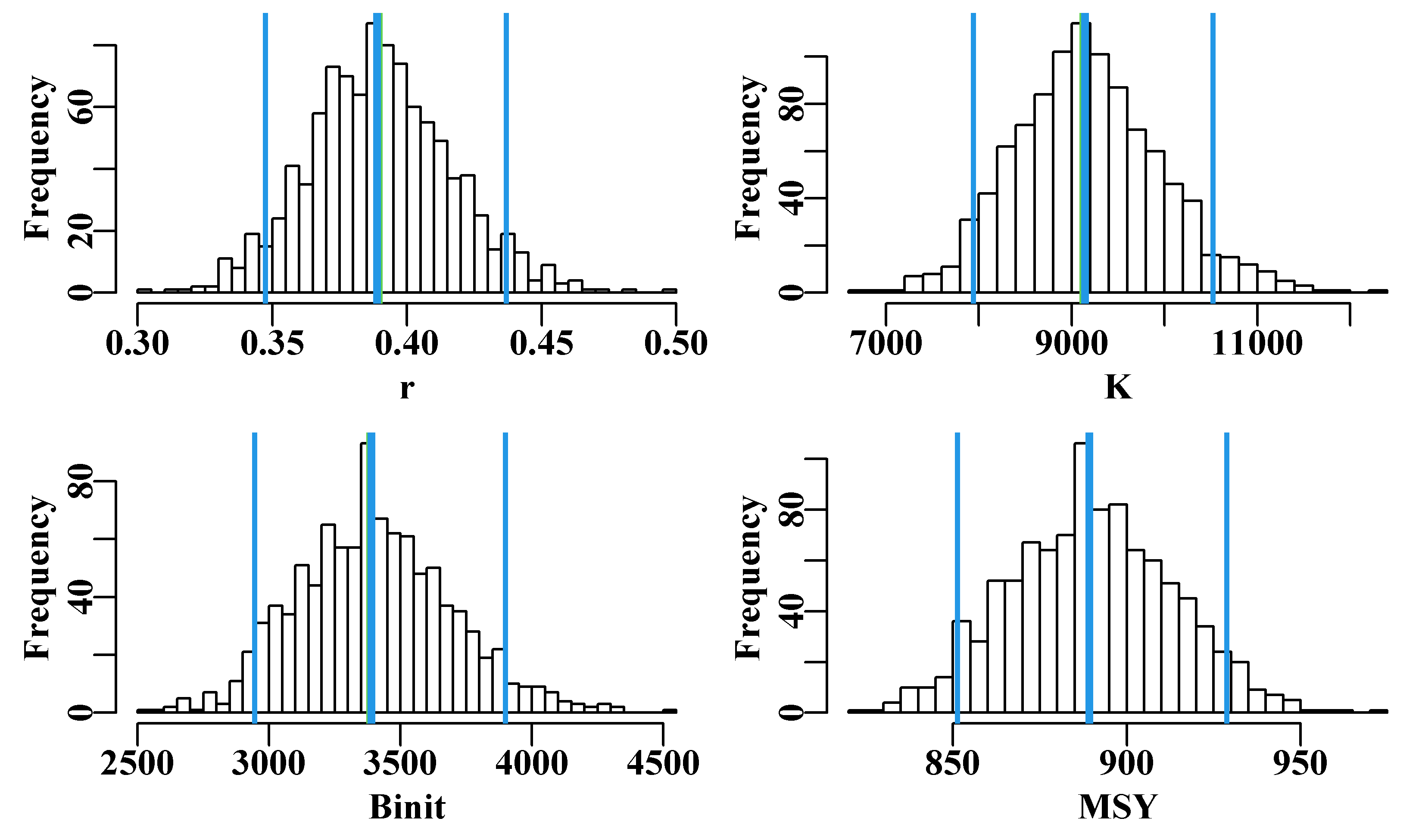

With 1000 replicates there remain some less well-defined parts in each plot, especially in the later years, for this reason one must be careful not just to plot an outline of the grey trajectories combined (perhaps defined using chull()), otherwise relatively thin areas of the predicted trajectory space may become un-noticed. Generally, doing 2000 - 5000 bootstraps may appear to be overkill, but to avoid actual gaps in the trajectory space then such numbers can be advantageous. Such diagrams can be helpful, but the principle findings relate to the model parameters and outputs, such as the MSY. We can use the bootstrap estimates of \(r\) and \(K\) to estimate the bootstrap estimates of MSY and all can be plotted as histograms and, using quants(), we can identify whatever percentile confidence bounds we wish. quants() defaults to extracting the 0.025, 0.05, 0.5, 0.95, and 0.975 quantiles (other ranges can be entered), allowing for the identification of the central 95% and 90% confidence bounds as well as the median.

#histograms of bootstrap parameters and model outputs Fig 6.5

dohist <- function(invect,nmvar,bins=30,bootres,avpar) { #adhoc

hist(invect[,nmvar],breaks=bins,main="",xlab=nmvar,col=0)

abline(v=c(exp(avpar),bootres[pick,nmvar]),lwd=c(3,2,3,2),

col=c(3,4,4,4))

}

msy <- parboot[,"r"]*parboot[,"K"]/4 #calculate bootstrap MSY

msyB <- quants(msy) #from optimum bootstrap parameters

parset(plots=c(2,2),cex=0.9)

bootres <- apply(parboot,2,quants); pick <- c(2,3,4) #quantiles

dohist(parboot,nmvar="r",bootres=bootres,avpar=optpar[1])

dohist(parboot,nmvar="K",bootres=bootres,avpar=optpar[2])

dohist(parboot,nmvar="Binit",bootres=bootres,avpar=optpar[3])

hist(msy,breaks=30,main="",xlab="MSY",col=0)

abline(v=c(optmsy,msyB[pick]),lwd=c(3,2,3,2),col=c(3,4,4,4))

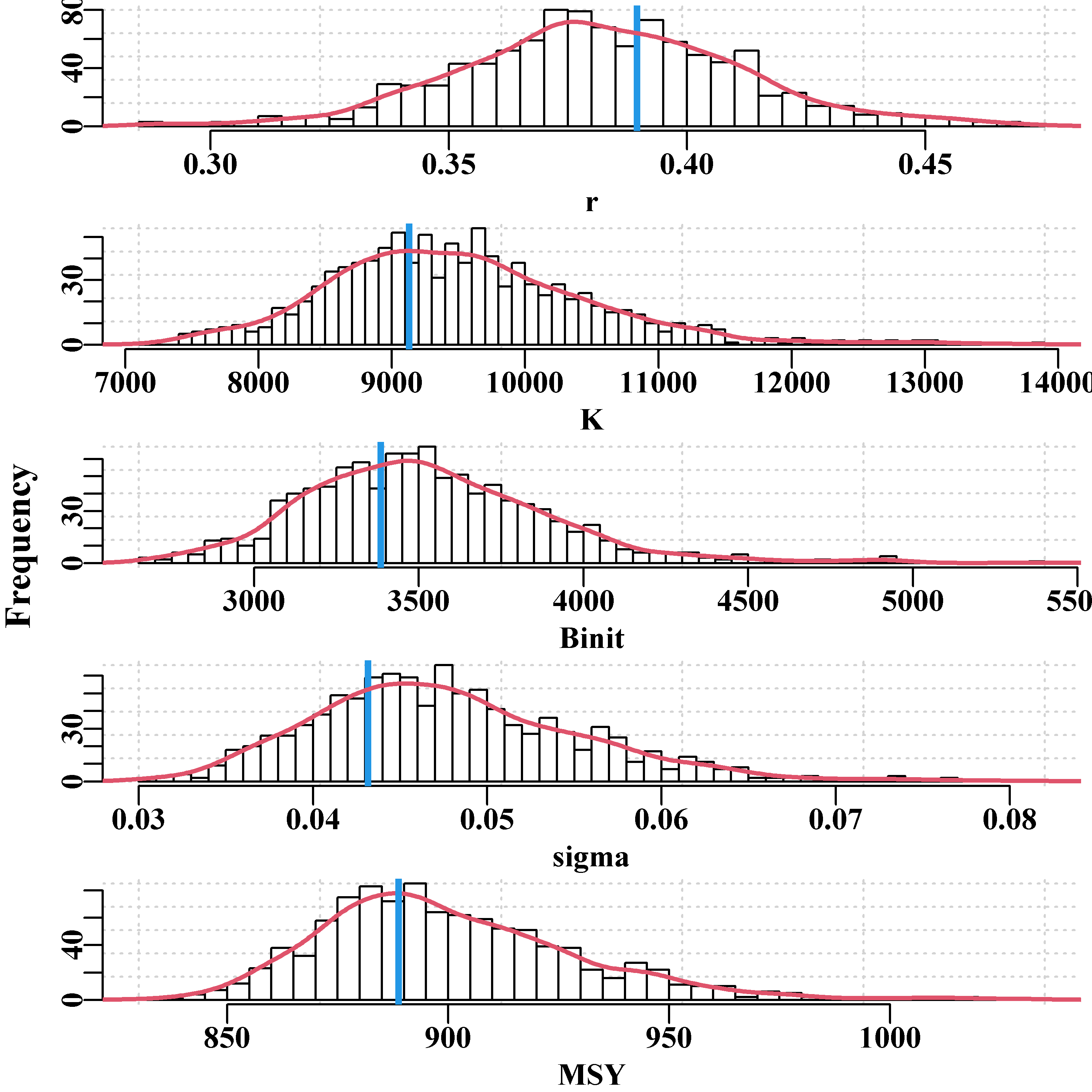

Figure 6.5: The 1000 bootstrap estimates of each of the first three model parameters and MSY as histograms. In each plot the two fine outer lines define the inner 90% confidence bounds around the median, the central vertical line denotes the optimum estimates, but these are generally immediately below the medians, except for the Binit.

Once again, with only 1000 bootstrap estimates, the histograms are not really smooth representations of the empirical distribution for all values of the parameters and outputs in question. More replicates would smooth the outputs and stabilize the quantile or percentile confidence bounds. Even so it is possible to generate an idea of the the precision with which such parameters and outputs are generated.

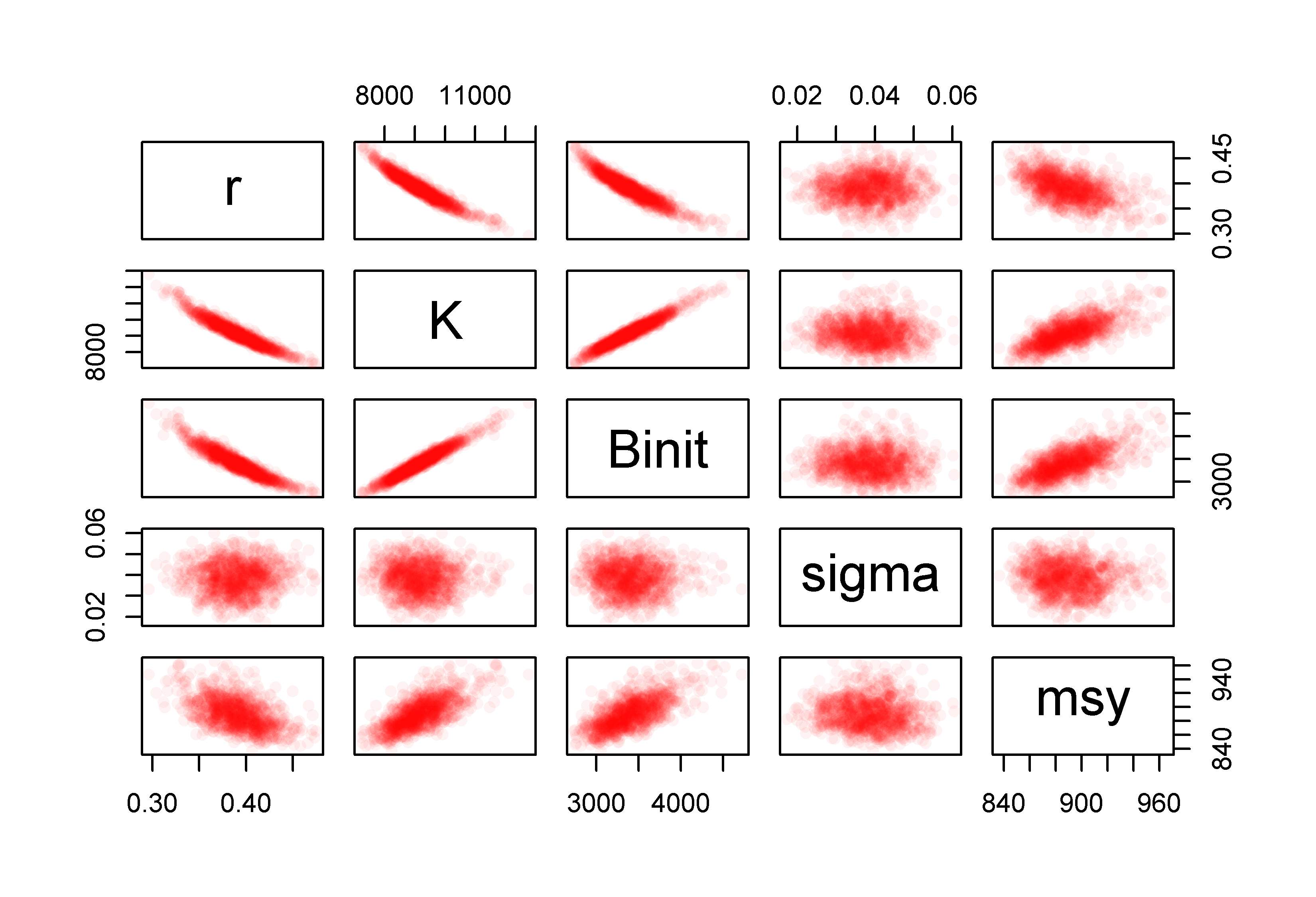

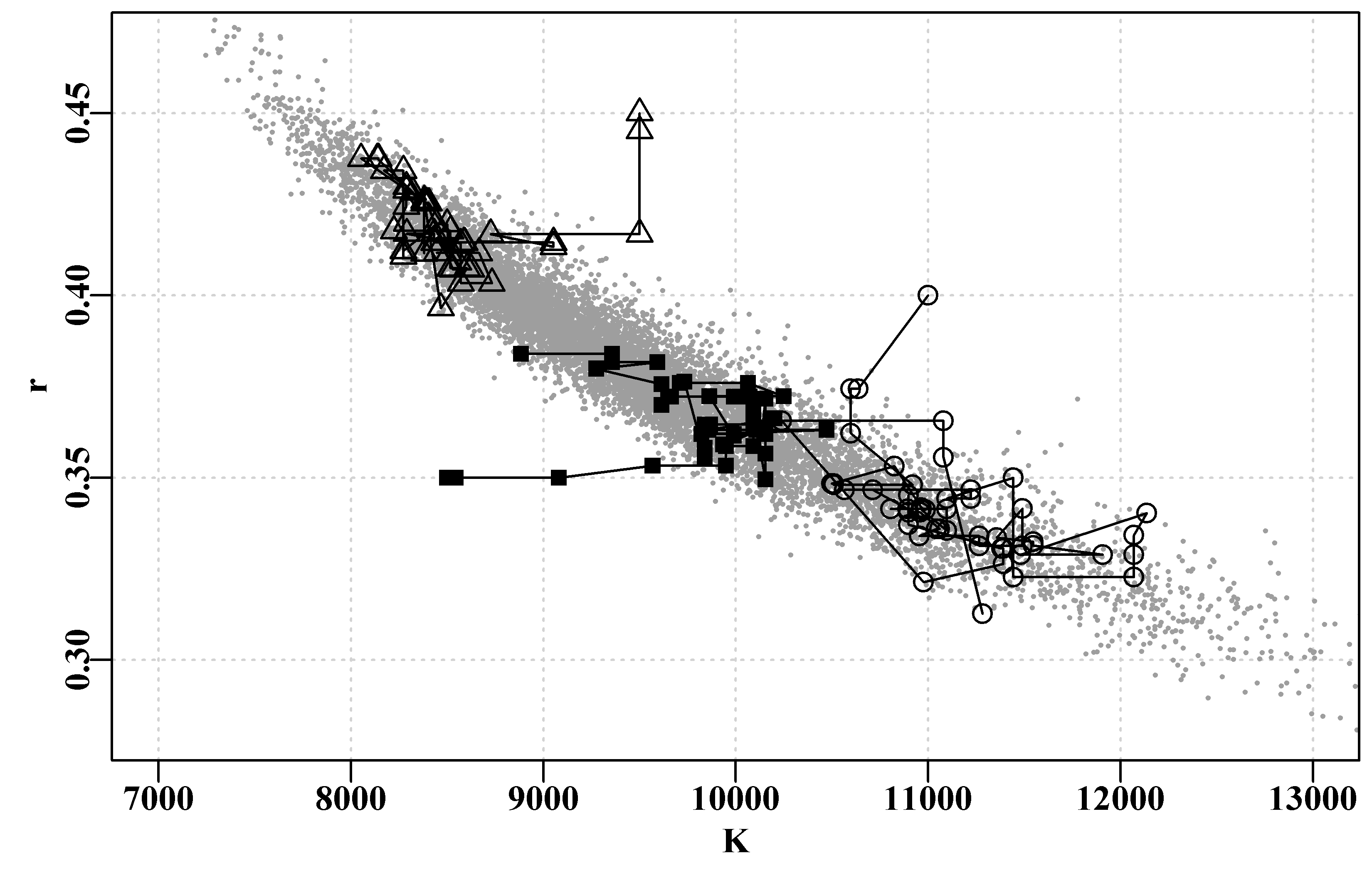

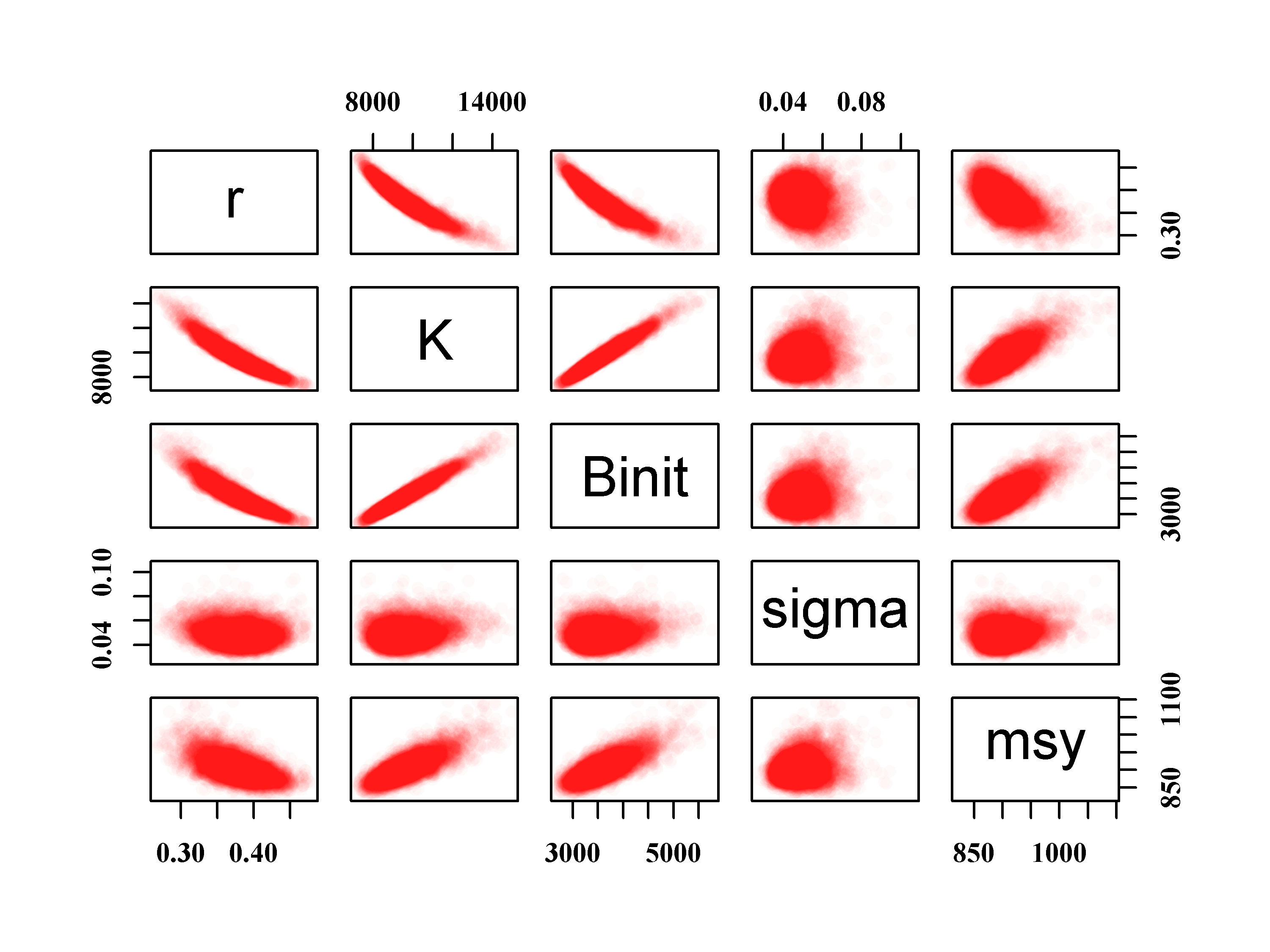

6.4.1 Parameter Correlation

We can also examine any correlations between the parameters and model outputs by plotting each against the others using the R function pairs(). The expected strong correlation between \(r\), \(K\), and \(B_\text{init}\) is immediately apparent. The lack of correlations with the value of sigma is typical of how variation within the residuals should behave. More interesting is the fact that the \(msy\), which is a function of \(r\) and \(K\), exhibits a reduced correlation with the other parameters. This reduction is a reflection of the negative correlation between \(r\) and \(K\) which acts to cancel out the effects of changes to each other. We can thus have similar values of \(msy\) for rather different values of \(r\) and \(K\). The use of the rgb() function to vary the intensity of colour relative to the density of points in Figure(6.6) could also be used when plotting the 1000 trajectories to make it possible to identify the most common trajectories in Figure(6.4).

#relationships between parameters and MSY Fig 6.6

parboot1 <- cbind(parboot,msy)

# note rgb use, alpha allows for shading, try 1/15 or 1/10

pairs(parboot1,pch=16,col=rgb(red=1,green=0,blue=0,alpha = 1/20))

Figure 6.6: The relationships between the 1000 bootstrap estimates of the optimum parameter estimates and the derived MSY values for the Schaefer model fitted to the abdat data-set. Full colour intensity derived from a minimum of 20 points. More bootstrap replicates would fill out these intensity plots.

6.5 Asymptotic Errors

The concept of confidence intervals (often 90% or 95% CI) is classically defined in Snedecor and Cochran (1967, 1989), and very many others, as:

\[\begin{equation} \bar{x}\pm {{t}_{\nu}}\frac{\sigma }{\sqrt{n}} \tag{6.8} \end{equation}\]

where \(\bar{x}\) is the mean of the sample of \(n\) observations (in fact, any parameter estimate), \(\sigma\) is the sample standard deviation, a measure of sample variation, and \(t_{\nu}\) is the t-distribution with \(\nu=(n-1)\) degrees of freedom (see also rt(), dt(), pt(), and qt()). If we had multiple independent samples it would be possible to estimate the standard deviation of the group of sample means. The \({\sigma}/{\sqrt{n}}\) is known as the standard error of the variable \(x\), and is one analytical way of estimating the expected standard deviation of a set of sample means when there is only one sample (bootstrapping to generate multiple samples is another way, though then it would be better to use percentile CI directly). As we are dealing with normally distributed data, such classical confidence intervals are distributed symmetrically around the mean or expectation. This is fine when dealing with single samples but the problem we are attempting to solve is to determine with what confidence we can trust the parameter estimates and model outputs obtained when fitting a multi-parameter model to data.

In such circumstance asymptotic standard errors as in Equ(6.8) can be produced by estimating the variance-covariance matrix for the model parameters in the vicinity of the optimum parameter set. In practice, the gradient of the maximum likelihood or sum of squares surface at the optimum of a multi-parameter model is assumed to approximate a multivariate normal distributions and can be used to characterize any relationships between the various parameters. These relationships are the basis of generating the required variance-covariance matrix. This matrix is used to generate standard errors for each parameter, as in Equ(6.8), and these can then be used to estimate approximate confidence intervals for the parameters. They are approximate because this method assumes that the fitted surface near the optimum is regular and smooth, and that the surface is approximately multi-variate normal (= symmetrical) near the optimum. This means the resulting standard errors will be symmetric around the optimal solution, which may or may not be appropriate. Nevertheless, as a first approximation, asymptotic standard errors can provide an indication of the inherent variation around the parameter estimates.

The Hessian matrix describes the local curvature or gradient of the maximum likelihood surface near the optimum. More formally, this is made up of the second-order partial derivatives of the function describing the likelihood of different parameter sets. That is, the rate of change of the rate of change of each parameter relative to itself and all the other parameters. All Hessian matrices are square. If we only consider \(r\), \(K\), and \(Binit\), three of the four parameters of the Schaefer model, Equ(6.1), the Hessian describing these three would be:

\[\begin{equation} H(f)=\left\{ \begin{matrix} \frac{{{\partial }^{2}}f}{\partial {{r}^{2}}} & \frac{{{\partial }^{2}}f}{\partial r\partial K} & \frac{{{\partial }^{2}}f}{\partial r\partial {{B}_{init}}} \\ \frac{{{\partial }^{2}}f}{\partial r\partial K} & \frac{{{\partial }^{2}}f}{\partial {{K}^{2}}} & \frac{{{\partial }^{2}}f}{\partial K\partial {{B}_{init}}} \\ \frac{{{\partial }^{2}}f}{\partial r\partial {{B}_{init}}} & \frac{{{\partial }^{2}}f}{\partial K\partial {{B}_{init}}} & \frac{{{\partial }^{2}}f}{\partial B_{init}^{2}} \\ \end{matrix} \right\} \tag{6.9} \end{equation}\]

If the function \(f\), for which we are calculating the second-order partial differentials, uses log-likelihoods to fit the model to the data then the variance-covariance matrix is the inverse of the Hessian matrix. However, and note this well, if the \(f\) function uses least-squares to conduct the analysis then formally the variance-covariance matrix is the product of the residual variance at the optimum fit and the inverse of the Hessian matrix. The residual variance reflects the number of parameters estimated:

\[\begin{equation} S{{x}^{2}}=\frac{{{\sum{\left( x-\hat{x} \right)}}^{2}}}{n-p} \tag{6.10} \end{equation}\]

which is the sum-of-squared deviations, between the observed and predicted values, divided by the number of observations (\(n\)) minus the number of parameters (\(p\)).

Thus, either the variance-covariance matrix (\(\mathbf{A}\)) is estimated by inverting the Hessian, \(\mathbf{A}=\mathbf{H}^{-1}\) (when using maximum likelihood), or, when using least-squares, we multiply the elements of the inverse of the Hessian by the residual variance:

\[\begin{equation} \mathbf{A}=S{{x}^{2}}{{\mathbf{H}}^{-1}} \tag{6.11} \end{equation}\]

Here we will focus on using maximum likelihood methods but it is worthwhile knowing the difference in procedure between using maximum likelihood and least squares methodologies just in case (it is recommended to always use maximum likelihood when using asymptotic errors).

The estimate of the standard error for each parameter in the \(\theta\) vector is obtained by taking the square root of the diagonal elements (the variances) of the variance-covariance matrix:

\[\begin{equation} StErr(\theta)=\sqrt{diag(\mathbf{A})} \tag{6.12} \end{equation}\]

In Excel we used the method of finite differences to estimate the Hessian (Haddon, 2011) but this approach does not always perform well with strongly correlated parameters. Happily, in R many of the non-linear solvers available provide the option of automatically generating an estimate of the Hessian when fitting a model.

#Fit Schaefer model and generate the Hessian

data(abdat)

param <- log(c(r= 0.42,K=9400,Binit=3400,sigma=0.05))

# Note inclusion of the option hessian=TRUE in nlm function

bestmod <- nlm(f=negLL,p=param,funk=simpspm,indat=abdat,

logobs=log(abdat[,"cpue"]),hessian=TRUE)

outfit(bestmod,backtran = TRUE) #try typing bestmod in console

# Now generate the confidence intervals

vcov <- solve(bestmod$hessian) # solve inverts matrices

sterr <- sqrt(diag(vcov)) #diag extracts diagonal from a matrix

optpar <- bestmod$estimate #use qt for t-distrib quantiles

U95 <- optpar + qt(0.975,20)*sterr # 4 parameters hence

L95 <- optpar - qt(0.975,20)*sterr # (24 - 4) df

cat("\n r K Binit sigma \n")

cat("Upper 95% ",round(exp(U95),5),"\n") # backtransform

cat("Optimum ",round(exp(optpar),5),"\n")#\n =linefeed in cat

cat("Lower 95% ",round(exp(L95),5),"\n") # nlm solution:

# minimum : -41.37511

# iterations : 20

# code : 2 >1 iterates in tolerance, probably solution

# par gradient transpar

# 1 -0.9429555 6.707523e-06 0.38948

# 2 9.1191569 -9.225209e-05 9128.50173

# 3 8.1271026 1.059296e-04 3384.97779

# 4 -3.1429030 -8.161433e-07 0.04316

# hessian :

# [,1] [,2] [,3] [,4]

# [1,] 3542.8630987 2300.305473 447.63247 -0.3509669

# [2,] 2300.3054733 4654.008776 -2786.59928 -4.2155105

# [3,] 447.6324677 -2786.599276 3183.93947 -2.5662898

# [4,] -0.3509669 -4.215511 -2.56629 47.9905538

#

# r K Binit sigma

# Upper 95% 0.45025 10948.12 4063.59 0.05838

# Optimum 0.38948 9128.502 3384.978 0.04316

# Lower 95% 0.33691 7611.311 2819.693 0.03196.5.1 Uncertainty about the Model Outputs

Asymptotic standard errors can also provide approximate confidence intervals around the model outputs, such as the MSY estimate, although this requires a somewhat different approach. This uses the assumption that the log-likelihood surface about the optimum solution is approximated by a multi-variate Normal distribution. This is usually defined as:

\[\begin{equation} \text{Multi-Variate Normal} = N \left( {\mu}, {\Sigma} \right) \tag{6.13} \end{equation}\]

where, in the cases considered here, \({\mu}\) is the vector of optimal parameter estimates (a vector of means), and \({\Sigma}\) is the variance-covariance matrix for the vector of optimum parameters.

Once these inputs are estimated, we can generate random parameter vectors by sampling from the estimated multi-variate Normal distribution that has a mean of the optimum parameter estimates and a variance-covariance matrix estimated from the inverse Hessian. Such random parameter vectors can be used, in the same way as with bootstrap parameter vectors, to generate percentile confidence intervals around parameters and model outputs. Using the multi-variate normal (some write it as multivariate normal) any parameter correlations between parameters are automatically accounted for.

6.5.2 Sampling from a Multi-Variate Normal Distribution

Base R does not have functions for working with the multi-variate Normal distribution, but one can use a couple of R packages that include a suitable function. The MASS library (Venables and Ripley, 2002) includes a suitable random number generator while the mvtnorm library has an even wider array of multi-variate probability density functions. Here we will use mvtnorm.

# Use multi-variate normal to generate percentile CI Fig 6.7

library(mvtnorm) # use RStudio, or install.packages("mvtnorm")

N <- 1000 # number of multi-variate normal parameter vectors

years <- abdat[,"year"]; sampn <- length(years) # 24 years

mvncpue <- matrix(0,nrow=N,ncol=sampn,dimnames=list(1:N,years))

columns <- c("r","K","Binit","sigma")

# Fill parameter vectors with N vectors from rmvnorm

mvnpar <- matrix(exp(rmvnorm(N,mean=optpar,sigma=vcov)),

nrow=N,ncol=4,dimnames=list(1:N,columns))

# Calculate N cpue trajectories using simpspm

for (i in 1:N) mvncpue[i,] <- exp(simpspm(log(mvnpar[i,]),abdat))

msy <- mvnpar[,"r"]*mvnpar[,"K"]/4 #N MSY estimates

# plot data and trajectories from the N parameter vectors

plot1(abdat[,"year"],abdat[,"cpue"],type="p",xlab="Year",

ylab="CPUE",cex=0.9)

for (i in 1:N) lines(abdat[,"year"],mvncpue[i,],col="grey",lwd=1)

points(abdat[,"year"],abdat[,"cpue"],pch=16,cex=1.0)#orig data

lines(abdat[,"year"],exp(simpspm(optpar,abdat)),lwd=2,col=1)

Figure 6.7: The 1000 predicted cpue trajectories derived from random parameter vectors sampled from the multi-variate Normal distribution defined by the optimum parameters and their related variance-covariance matrix.

As with the bootstrap example, even a sample of 1000 trajectories were insufficient to fill in the trajectory space completely so that some regions were less dense than others. More replicates might fill in those spaces, Figure(6.7). The use of rgb() with the bootstrap cpue lines would also help identify less dense regions.

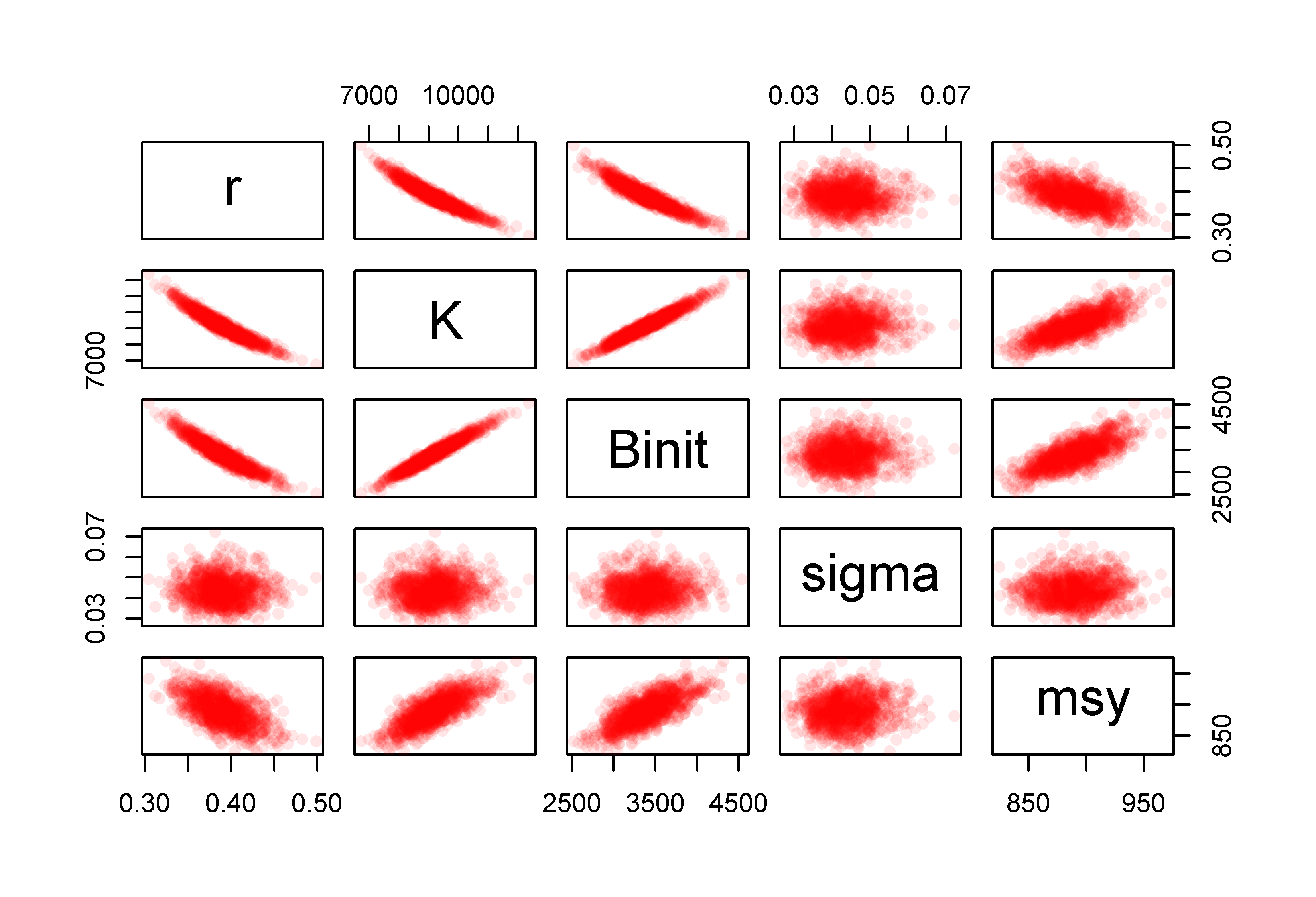

We can also plot out the implied parameter correlations (if any) using pairs(), as we did for the bootstrap samples, Figure(6.8). Here, however, the results appear to be relatively evenly and cleanly distributed, relative to those from the bootstrapping process, Figure(6.6). The smoother distributions reflect the fact the values are all sampled from a well-defined probability density function rather than an empirical distribution. It would be reasonable to argue that the bootstrapping procedure would be expected to provide a more accurate representation of the properties of the data. However, which set of results better represents the population from which the original sample came from is less simple to answer with confidence. What really matters is whether their summary statistics differ and, considering Table(6.2), we can see that while the exact details of the distributions for each parameter and model output differ slightly, there does not appear to be any single pattern of one being wider or narrower than the other in a consistent fashion.

#correlations between parameters when using mvtnorm Fig 6.8

pairs(cbind(mvnpar,msy),pch=16,col=rgb(red=1,0,0,alpha = 1/10))

Figure 6.8: The relationships between the 1000 parameter estimates sampled from the estimated multi-variate Normal distribution assumed to surround the optimum parameter estimates and the derived MSY values for the Schaefer model fitted to the abdat data set.

Finally, we can illustrate the asymptotic confidence intervals by plotting out an array of histograms for parameters and MSY along with their predicted confidence bounds, Figure(6.9). In this case we use the inner 90% bounds. The mean and median values are even closer than with the bootstrapping example, which is a further reflection of the symmetry of using the multi-variate Normal distribution.

#N parameter vectors from the multivariate normal Fig 6.9

mvnres <- apply(mvnpar,2,quants) # table of quantiles

pick <- c(2,3,4) # select rows for 5%, 50%, and 95%

meanmsy <- mean(msy) # optimum bootstrap parameters

msymvn <- quants(msy) # msy from mult-variate normal estimates

plothist <- function(x,optp,label,resmvn) {

hist(x,breaks=30,main="",xlab=label,col=0)

abline(v=c(exp(optp),resmvn),lwd=c(3,2,3,2),col=c(3,4,4,4))

} # repeated 4 times, so worthwhile writing a short function

par(mfrow=c(2,2),mai=c(0.45,0.45,0.05,0.05),oma=c(0.0,0,0.0,0.0))

par(cex=0.85, mgp=c(1.35,0.35,0), font.axis=7,font=7,font.lab=7)

plothist(mvnpar[,"r"],optpar[1],"r",mvnres[pick,"r"])

plothist(mvnpar[,"K"],optpar[2],"K",mvnres[pick,"K"])

plothist(mvnpar[,"Binit"],optpar[3],"Binit",mvnres[pick,"Binit"])

plothist(msy,meanmsy,"MSY",msymvn[pick])

Figure 6.9: Histograms of the 1000 parameter estimates for r, K, Binit, and the derived MSY, from the multi-variate normal estimated at the optimum solution. In each plot, the green line denotes the arithmetic mean, the thick blue line the median, and the two fine blue lines the inner 90% confidence bounds around the median.

| r | K | Binit | sigma | msyB | |

|---|---|---|---|---|---|

| 2.5% | 0.3353 | 7740.636 | 2893.714 | 0.0247 | 854.029 |

| 5% | 0.3449 | 8010.341 | 2970.524 | 0.0263 | 857.660 |

| 50% | 0.3903 | 9116.193 | 3387.077 | 0.0385 | 888.279 |

| 95% | 0.4353 | 10507.708 | 3889.824 | 0.0501 | 929.148 |

| 97.5% | 0.4452 | 11003.649 | 4055.071 | 0.0523 | 940.596 |

| r | K | Binit | sigma | msymvn | |

|---|---|---|---|---|---|

| 2.5% | 0.3387 | 7717.875 | 2882.706 | 0.0328 | 844.626 |

| 5% | 0.3474 | 7943.439 | 2945.264 | 0.0342 | 851.257 |

| 50% | 0.3889 | 9145.146 | 3389.279 | 0.0432 | 889.216 |

| 95% | 0.4368 | 10521.651 | 3900.034 | 0.0550 | 928.769 |

| 97.5% | 0.4435 | 10879.571 | 4031.540 | 0.0581 | 934.881 |

6.6 Likelihood Profiles

The name ‘Likelihood Profile’ is suggestive of the process used to generate these analyses. In this chapter we have already optimally fitted a four parameter model and examined ways of characterizing the uncertainty around those parameter estimates. One can imagine selecting a single parameter from the four and fixing its value a little way away from its optimum value. If one then refitted the model holding the selected parameter fixed but allowing the others to vary we can imagine that a new optimum solution would be found for the remaining parameters but that the negative log-likelihood would be larger than the optimum obtained when all four parameters were free to vary. This process is the essential idea behind the generation of likelihood profiles

The idea is to fit a model using maximum likelihood methods (minimization of the -ve log-likelihood) but only fitting specific parameters while keeping others constant at values surrounding the optimum value. In this way new “optimum” fits can be obtained while a given parameter has been given an array of fixed values. We can thus determine the influence of the fixed parameter(s) on the total likelihood of the model fit. An example may help make the process clear.

Once again using the Schaefer surplus production model on the abdat fishery data we obtain the optimum parameters r = 0.3895, K = 9128.5, Binit = 3384.978, and sigma = 0.04316, which imply an MSY = 888.842t.

#Fit the Schaefer surplus production model to abdat

data(abdat); logce <- log(abdat$cpue) # using negLL

param <- log(c(r= 0.42,K=9400,Binit=3400,sigma=0.05))

optmod <- nlm(f=negLL,p=param,funk=simpspm,indat=abdat,logobs=logce)

outfit(optmod,parnames=c("r","K","Binit","sigma")) # nlm solution:

# minimum : -41.37511

# iterations : 20

# code : 2 >1 iterates in tolerance, probably solution

# par gradient transpar

# r -0.9429555 6.707523e-06 0.38948

# K 9.1191569 -9.225209e-05 9128.50173

# Binit 8.1271026 1.059296e-04 3384.97779

# sigma -3.1429030 -8.161433e-07 0.04316The problem of how to fit a restricted number of model parameters, while holding the remainder constant is solved by modifying the function used to minimize the negative log-likelihood. Instead of using the negLL() function to calculate the negative log-likelihood of the Log-Normally distributed cpue values we have used the MQMF function negLLP() (negative log-likelihood profile). This adds the capacity to fix some of the parameters while solving for an optimum solution by only varying the non-fixed parameters. If we look at the R code for the negLLP() function and compare it to the negLL() function we can see that the important differences, other than in the arguments, is in the three lines of code before the logpred statement. See the help (?) page for further details, though I hope you can see straight away that if you ignore the initpar and notfixed arguments negLLP() should give the same answer as negLL().

#the code for MQMF's negLLP function

negLLP <- function(pars, funk, indat, logobs, initpar=pars,

notfixed=c(1:length(pars)),...) {

usepar <- initpar #copy the original parameters into usepar

usepar[notfixed] <- pars[notfixed] #change 'notfixed' values

npar <- length(usepar)

logpred <- funk(usepar,indat,...) #funk uses the usepar values

pick <- which(is.na(logobs)) # proceed as in negLL

if (length(pick) > 0) {

LL <- -sum(dnorm(logobs[-pick],logpred[-pick],exp(pars[npar]),

log=T))

} else {

LL <- -sum(dnorm(logobs,logpred,exp(pars[npar]),log=T))

}

return(LL)

} # end of negLLP For example, to determine the precision with which the \(r\) parameter has been estimated we can force it to take on constant values from about 0.3 to 0.45 while fitting the other parameters to obtain an optimum fit and then plot up the total likelihood with respect to the given \(r\) value. As with all model fitting in R we need two functions, one to generate the required predicted values and the other to act as a wrapper to bring the observed and predicted values together to be used by the minimizer, in this case nlm(). To proceed we use negLLP() to allow for some parameters to be fixed in value. nlm() works by iteratively modifying the input parameters in the direction that ought to improve the model fit and then feeding those parameters back into the input model function that generates the predicted values against which the observed are compared. We therefore need to arrange the model function to continually return the particular parameter values that we want fixed to the initial set values. The code in negLLP() is one way in which to do this. The changes required include having an independent set of the original pars in initpar, which must contain the specified fixed parameters, and a notfixed argument identifying which values from initpar to over-write with values from pars, which will become modified by nlm.

It is best practice to check that negLLP() generates the same solution as the use of negLL(), even though inspection of the code assures us that it will (I am no longer surprised to find out that I can make mistakes), by allowing all parameters to vary (the default setting).

#does negLLP give same answers as negLL when no parameters fixed?

param <- log(c(r= 0.42,K=9400,Binit=3400,sigma=0.05))

bestmod <- nlm(f=negLLP,p=param,funk=simpspm,indat=abdat,logobs=logce)

outfit(bestmod,parnames=c("r","K","Binit","sigma")) # nlm solution:

# minimum : -41.37511

# iterations : 20

# code : 2 >1 iterates in tolerance, probably solution

# par gradient transpar

# r -0.9429555 6.707523e-06 0.38948

# K 9.1191569 -9.225209e-05 9128.50173

# Binit 8.1271026 1.059296e-04 3384.97779

# sigma -3.1429030 -8.161433e-07 0.04316Happily, as expected, we obtain the same solution and so now we can proceed to examine the effect of fixing the value of \(r\) and re-fitting the model. Using hind-sight (so much better than reality), we have selected \(r\) values between 0.325 and 0.45. We can set up a loop to sequentially apply these values and fit the respective models, saving the solutions as the loop proceeds. Below we have tabulated the first few results, Table(6.3), to illustrate exactly how the negative log-likelihood increases as the fixed value of \(r\) is set further and further away from its optimum value.

#Likelihood profile for r values 0.325 to 0.45

rval <- seq(0.325,0.45,0.001) # set up the test sequence

ntrial <- length(rval) # create storage for the results

columns <- c("r","K","Binit","sigma","-veLL")

result <- matrix(0,nrow=ntrial,ncol=length(columns),

dimnames=list(rval,columns))# close to optimum

bestest <- c(r= 0.32,K=11000,Binit=4000,sigma=0.05)

for (i in 1:ntrial) { #i <- 1

param <- log(c(rval[i],bestest[2:4])) #recycle bestest values

parinit <- param #to improve the stability of nlm as r changes

bestmodP <- nlm(f=negLLP,p=param,funk=simpspm,initpar=parinit,

indat=abdat,logobs=log(abdat$cpue),notfixed=c(2:4),

typsize=magnitude(param),iterlim=1000)

bestest <- exp(bestmodP$estimate)

result[i,] <- c(bestest,bestmodP$minimum) # store each result

}

minLL <- min(result[,"-veLL"]) #minimum across r values used. | r | K | Binit | sigma | -veLL | |

|---|---|---|---|---|---|

| 0.325 | 0.325 | 11449.17 | 4240.797 | 0.0484 | -38.61835 |

| 0.326 | 0.326 | 11403.51 | 4223.866 | 0.0483 | -38.69554 |

| 0.327 | 0.327 | 11358.24 | 4207.082 | 0.0481 | -38.77196 |

| 0.328 | 0.328 | 11313.35 | 4190.442 | 0.0480 | -38.84759 |

| 0.329 | 0.329 | 11268.83 | 4173.945 | 0.0478 | -38.92242 |

| 0.33 | 0.330 | 11224.69 | 4157.589 | 0.0477 | -38.99643 |

| 0.331 | 0.331 | 11180.91 | 4141.373 | 0.0475 | -39.06961 |

| 0.332 | 0.332 | 11137.49 | 4125.293 | 0.0474 | -39.14194 |

| 0.333 | 0.333 | 11094.43 | 4109.350 | 0.0472 | -39.21339 |

| 0.334 | 0.334 | 11051.72 | 4093.540 | 0.0471 | -39.28397 |

| 0.335 | 0.335 | 11009.11 | 4077.752 | 0.0469 | -39.35364 |

| 0.336 | 0.336 | 10967.34 | 4062.316 | 0.0468 | -39.42239 |

6.6.1 Likelihood Ratio Based Confidence Intervals

Venzon and Moolgavkar (1988) described a method of obtaining what they call “approximate \(1 - \alpha\) profile-likelihood-based confidence intervals”, which were based upon a re-arrangement of the usual approach to the use of a likelihood ratio test. The method relies on the fact that likelihood ratio tests asymptotically approach the \(\chi^2\) distribution as the sample size gets larger, so this method is only approximate. Not surprisingly, likelihood ratio tests are based upon a ratio of two likelihoods, or if dealing with log-likelihoods, the subtraction of one from another:

\[\begin{equation} \frac{L(\theta)_{max}}{L(\theta)} = e^{LL(\theta)_{max}-LL(\theta)} \tag{6.14} \end{equation}\]

where \(L(\theta)\) is the likelihood of the \(\theta\) parameters, the \(max\) subscript denotes the maximum likelihood (assuming all other parameters are also optimally fitted), and \(LL(\theta)\) is the equivalent log-likelihood. The expected log-likelihoods for the actual confidence intervals for a single parameter, assuming all others remain at the optimum (as in a likelihood profile), are given by the following (Venzon and Moolgavkar, 1988):

\[\begin{equation} \begin{split} & 2\times \left[ LL{{\left( \theta \right)}_{max}}-LL\left( \theta \right) \right]\le \chi _{1,1-\alpha }^{2} \\ & LL\left( \theta \right)=LL{{\left( \theta \right)}_{max}}-\frac{\chi _{1,1-\alpha }^{2}}{2} \end{split} \tag{6.15} \end{equation}\]

where \(\chi _{1,1-{\alpha}}^{2}\) is the \(1-{\alpha}\)th quantile of the \(\chi^2\) distribution with 1 degree of freedom (e.g., for 95% confidence intervals, \(\alpha = 0.95\) and \(1–{\alpha} = 0.05\), and \(\chi _{1,1-\alpha }^{2} = 3.84\).

For a single parameter \(\theta_i\), the approximate 95% confidence intervals are therefore those values of \(\theta_i\) for which two times the difference between the corresponding log-likelihood and the overall optimal log-likelihood is less than or equal to 3.84 (\(\chi _{1,1-\alpha }^{2} = 3.84\)). Alternatively (bottom line in Equ(6.15)), one can search for the \(\theta_i\) that generates a log-likelihood equal to the maximum log-likelihood minus half the required \(\chi^2\) value (i.e., \(LL(\theta)_{max} – {1.92}\), when with one degree of freedom). If conducting a 2-parameter likelihood surface, then one would search for the \(\theta_i\) with \(\chi^2\) set at 5.99 (2 degrees of freedom), that is one would subtract 2.995 from the maximum likelihood (and so on for higher degrees of freedom).

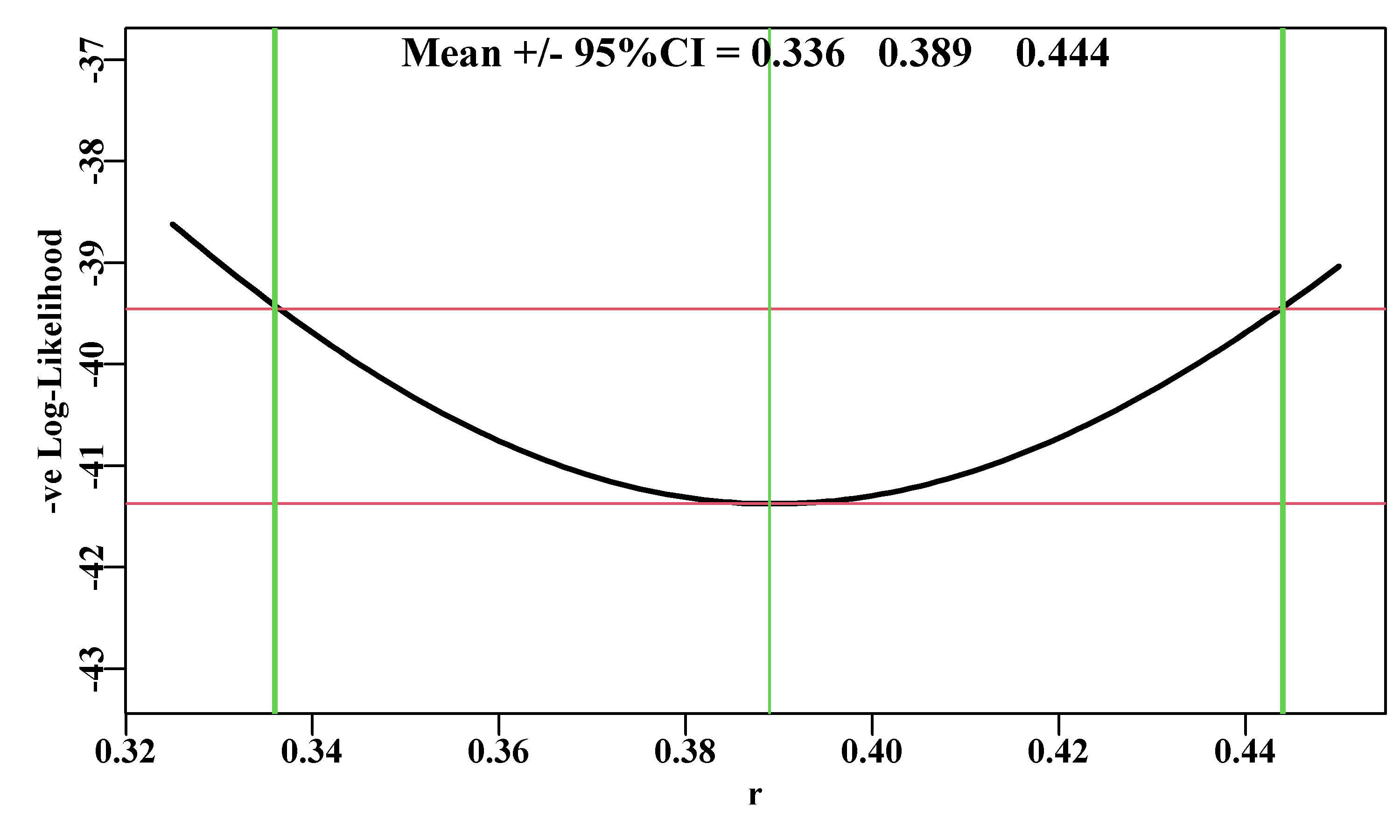

We can plot up the likelihood profile for the \(r\) parameter and include these approximate 95% confidence intervals. Examine the code within the function plotprofile() to see the steps involved in calculating the confidence intervals from the results of the analysis.

#likelihood profile on r from the Schaefer model Fig 6.10

plotprofile(result,var="r",lwd=2) # review the code

Figure 6.10: A likelihood profile for the r parameter from the Schaefer surplus production model fitted to the abdat data set. The horizontal solid lines are the minimum and the minimum minus 1.92 (95% level for \(\chi^2\) with 1 degree of freedom, see text). The outer vertical lines are the approximate 95% confidence bounds around the central mean of 0.389.

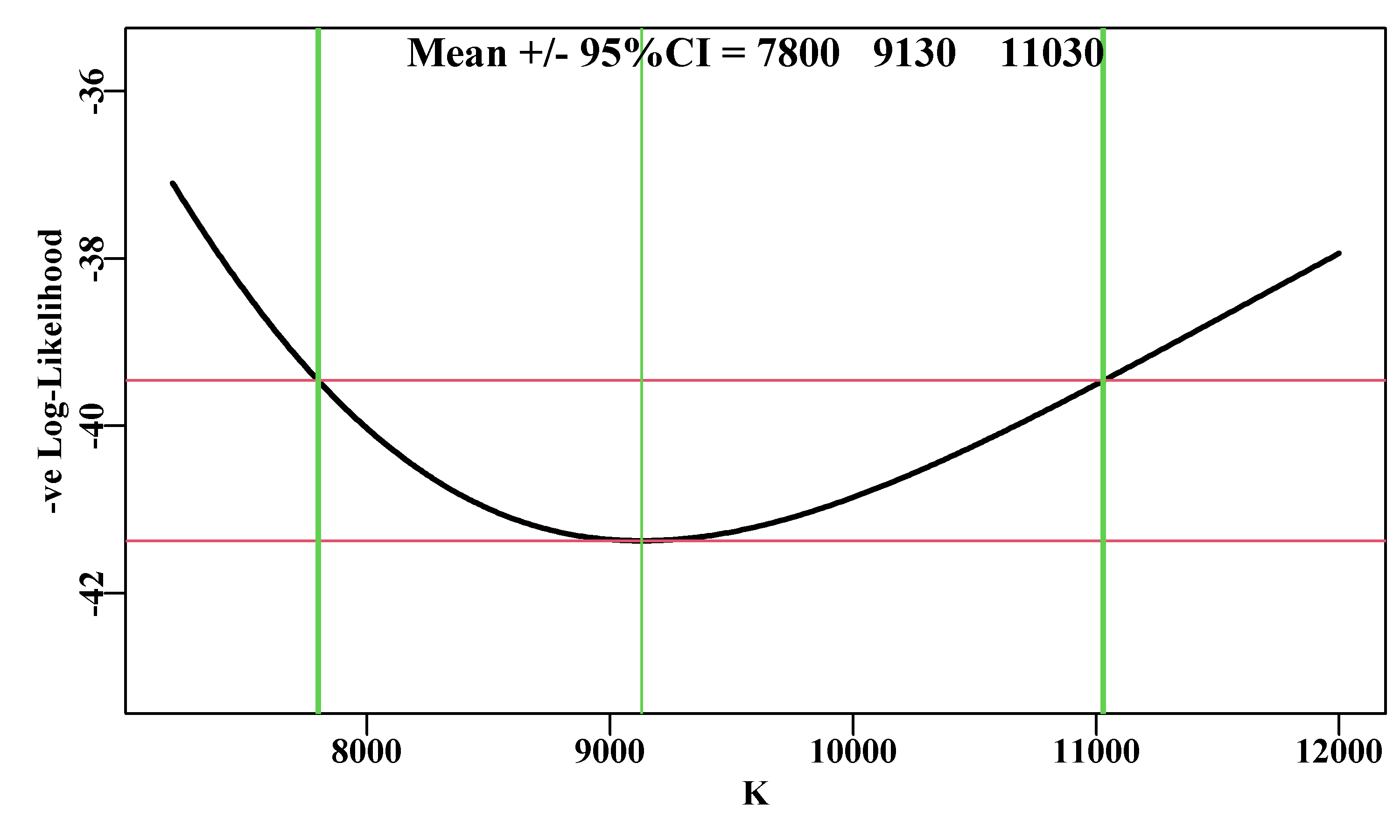

We can repeat this analysis for the other parameters in the Schaefer model. For example, we can look at the \(K\) parameter in a similar way using almost identical code. Note the stronger asymmetry in the profile plot for \(K\). There is slight asymmetry in the likelihood profile for \(r\) (subtract the 95% CI from the mean estimate), but visually it is obvious with the \(K\) parameter. The optimum parameter estimate for \(K\) is 9128.5, but the maximum likelihood in the profile data, points to a value of 9130. This is merely a reflection of the step length of the likelihood profile. It is currently set to 10, if it is set to 1 then we can obtain a closer approximation, although, of course, the analysis would take 10 times as long to run.

#Likelihood profile for K values 7200 to 12000

Kval <- seq(7200,12000,10)

ntrial <- length(Kval)

columns <- c("r","K","Binit","sigma","-veLL")

resultK <- matrix(0,nrow=ntrial,ncol=length(columns),

dimnames=list(Kval,columns))

bestest <- c(r= 0.45,K=7500,Binit=2800,sigma=0.05)

for (i in 1:ntrial) {

param <- log(c(bestest[1],Kval[i],bestest[c(3,4)]))

parinit <- param

bestmodP <- nlm(f=negLLP,p=param,funk=simpspm,initpar=parinit,

indat=abdat,logobs=log(abdat$cpue),

notfixed=c(1,3,4),iterlim=1000)

bestest <- exp(bestmodP$estimate)

resultK[i,] <- c(bestest,bestmodP$minimum)

}

minLLK <- min(resultK[,"-veLL"])

#kable(head(result,12),digits=c(4,3,3,4,5)) # if wanted.

Figure 6.11: A likelihood profile for the K parameter from the Schaefer surplus production model fitted to the abdat data-set, conducted in the same manner as the r parameter. The red lines are the minimum and the minimum plus 1.92 (95% level for Chi2 with 1 degree of freedom, see text). The vertical thick lines are the approximate 95% confidence bounds around the mean of 9128.5.

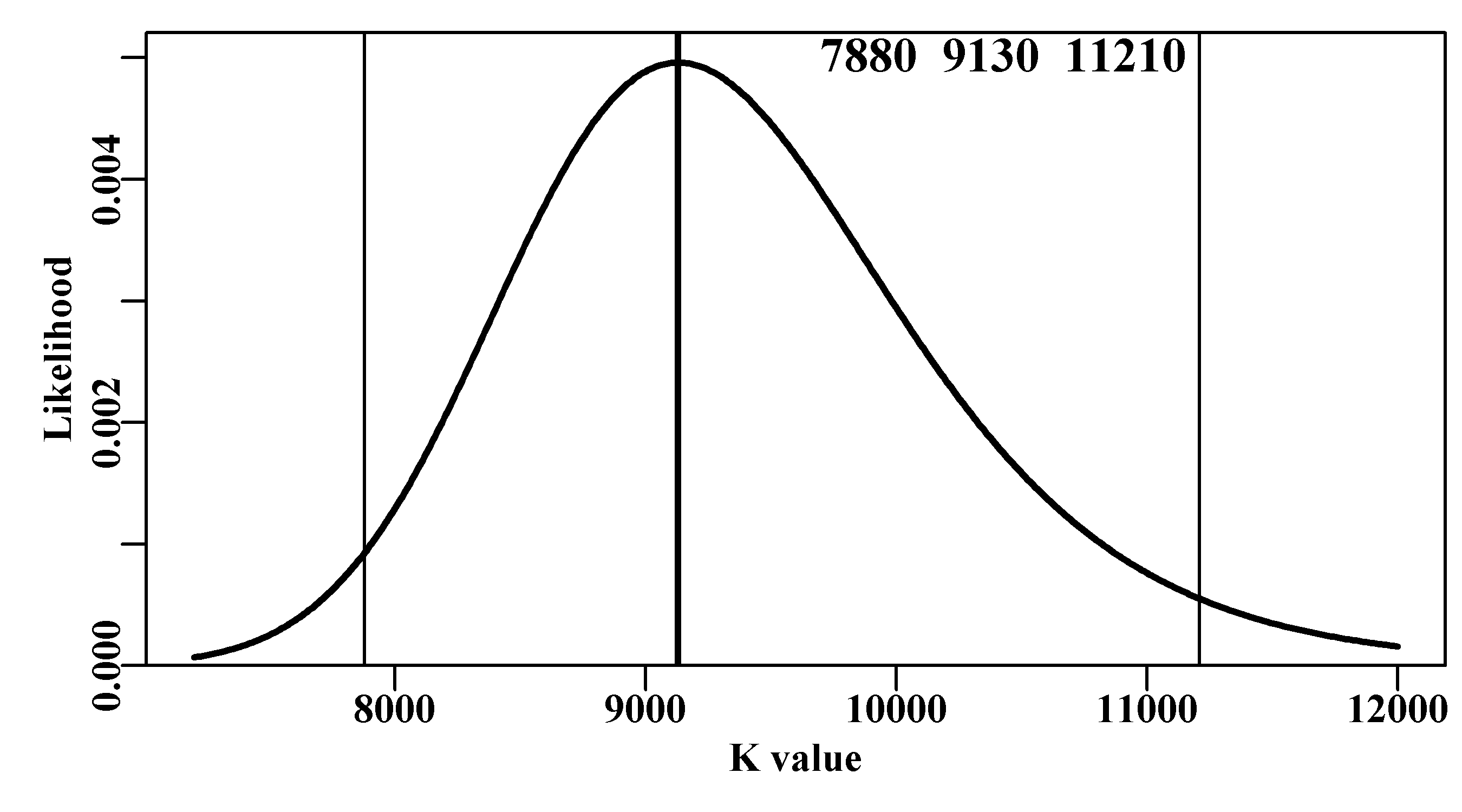

6.6.2 -ve Log-Likelihoods or Likelihoods

We have plotted the -ve Log-Likelihoods, e.g. Figure(6.11), and these are fine when operating in log-space, but few people have clear intuitions about log-space. An alternative, with which it may be simpler to understand the principle behind the percentile confidence intervals, would be to back-transform the -ve log-likelihoods into simple likelihoods. Of course, a log value of -41 denotes a very small number when back-transformed, but we can re-scale those values in the process of ensuring that the values of all the likelihoods sum to 1.0. To back-transform the negative log-likelihoods we need to negate them and then exponent them.

\[\begin{equation} L = exp(-(-veLL )) \tag{6.16} \end{equation}\]

So, for the likelihood profile applied to the K parameter we can see that the linear-space likelihoods begin to increase as the value of \(K\) approaches the optimum, and if we plot those likelihoods the shape of the distribution can be seen to take on a shape more intuitively understandable. The tails stay above zero but are well away from the 95% intervals:

#translate -velog-likelihoods into likelihoods

likes <- exp(-resultK[,"-veLL"])/sum(exp(-resultK[,"-veLL"]),

na.rm=TRUE)

resK <- cbind(resultK,likes,cumlike=cumsum(likes)) | r | K | Binit | sigma | -veLL | likes | cumlike | |

|---|---|---|---|---|---|---|---|

| 7200 | 0.4731 | 7200 | 2689.875 | 0.0516 | -37.09799 | 6.8863e-05 | 0.0000689 |

| 7210 | 0.4726 | 7210 | 2693.444 | 0.0515 | -37.14518 | 7.2191e-05 | 0.0001411 |

| 7220 | 0.4720 | 7220 | 2697.023 | 0.0514 | -37.19213 | 7.5660e-05 | 0.0002167 |

| 7230 | 0.4714 | 7230 | 2700.602 | 0.0513 | -37.23881 | 7.9276e-05 | 0.0002960 |

| 7240 | 0.4709 | 7240 | 2704.182 | 0.0512 | -37.28524 | 8.3044e-05 | 0.0003790 |

| 7250 | 0.4703 | 7250 | 2707.762 | 0.0511 | -37.33141 | 8.6968e-05 | 0.0004660 |

| 7260 | 0.4698 | 7260 | 2711.341 | 0.0510 | -37.37732 | 9.1054e-05 | 0.0005571 |

| 7270 | 0.4692 | 7270 | 2714.921 | 0.0509 | -37.42298 | 9.5307e-05 | 0.0006524 |

Figure 6.12: A likelihood profile for the K parameter from the Schaefer surplus production model fitted to the abdat data-set. In this case the -ve log-likelihoods have been back-transformed to likelihoods and scaled to sum to 1.0. The vertical lines are the approximate 95% confidence bounds around the mean. The top three numbers are the bounds and estimated optimum.

6.6.3 Percentile Likelihood Profiles for Model Outputs

Generally, the interest in stock assessment models relates to model outputs that are not directly estimated as parameters. As we have seen, generating confidence bounds around parameter estimates is relatively straightforward, but how do we provide similar estimates of uncertainty about model outputs such as the MSY (for the Schaefer model \(MSY = rK/4\))? For example, an assessment model might estimate stock biomass, or the maximum sustainable yield, or some other performance measure that can be considered an indirect output from a model. Likelihood profiles for such model outputs can be produced by adding a penalty term to the negative log-likelihood that attempts to constrain the likelihood to the optimal target (see Equ(6.17)). In this way, the impact on the log-likelihood of moving away from the optimum can be characterized.

\[\begin{equation} -veLL = -veLL-w \left(\frac{output-target}{target} \right)^2 \tag{6.17} \end{equation}\]

where -veLL is the negative log-likelihood, output is the variable of interest (in the example to follow this is the MSY), target is the optimum for that variable (the MSY from the overall optimal solution), and \(w\) is a weighting factor. The weighting factor should be as large as possible to generate the narrowest likelihood profile while still being able to converge on a solution. Below, we describe a specialized function negLLO() to deal with likelihood profiles around model outputs (this is not in MQMF). It is simply a modified version of the negLL() function that allow for the introduction of the weighting factor described in Equ(6.17). We can illustrate this by examining the likelihood profile around the optimum MSY value of 887.729t, which can be done across a range of 740 - 1050 tonnes, with a weighting of 900.

#examine effect on -veLL of MSY values from 740 - 1050t

#need a different negLLP() function, negLLO(): O for output.

#now optvar=888.831 (rK/4), the optimum MSY, varval ranges 740-1050

#and wght is the weighting to give to the penalty

negLLO <- function(pars,funk,indat,logobs,wght,optvar,varval) {

logpred <- funk(pars,indat)

LL <- -sum(dnorm(logobs,logpred,exp(tail(pars,1)),log=T)) +

wght*((varval - optvar)/optvar)^2 #compare with negLL

return(LL)

} # end of negLLO

msyP <- seq(740,1020,2.5);

optmsy <- exp(optmod$estimate[1])*exp(optmod$estimate[2])/4

ntrial <- length(msyP)

wait <- 400

columns <- c("r","K","Binit","sigma","-veLL","MSY","pen",

"TrialMSY")

resultO <- matrix(0,nrow=ntrial,ncol=length(columns),

dimnames=list(msyP,columns))

bestest <- c(r= 0.47,K=7300,Binit=2700,sigma=0.05)

for (i in 1:ntrial) { # i <- 1

param <- log(bestest)

bestmodO <- nlm(f=negLLO,p=param,funk=simpspm,indat=abdat,

logobs=log(abdat$cpue),wght=wait,

optvar=optmsy,varval=msyP[i],iterlim=1000)

bestest <- exp(bestmodO$estimate)

ans <- c(bestest,bestmodO$minimum,bestest[1]*bestest[2]/4,

wait *((msyP[i] - optmsy)/optmsy)^2,msyP[i])

resultO[i,] <- ans

}

minLLO <- min(resultO[,"-veLL"]) | r | K | Binit | sigma | -veLL | MSY | pen | |

|---|---|---|---|---|---|---|---|

| 740 | 0.389 | 9130.914 | 3385.871 | 0.0432 | -30.16 | 888.883 | 11.22 |

| 742.5 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -30.53 | 888.883 | 10.84 |

| 745 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -30.90 | 888.883 | 10.47 |

| 747.5 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -31.26 | 888.883 | 10.11 |

| r | K | Binit | sigma | -veLL | MSY | pen | |

|---|---|---|---|---|---|---|---|

| 1012.5 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -33.63 | 888.883 | 7.74 |

| 1015 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -33.32 | 888.883 | 8.06 |

| 1017.5 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -32.99 | 888.883 | 8.38 |

| 1020 | 0.389 | 9130.911 | 3385.872 | 0.0432 | -32.66 | 888.883 | 8.71 |

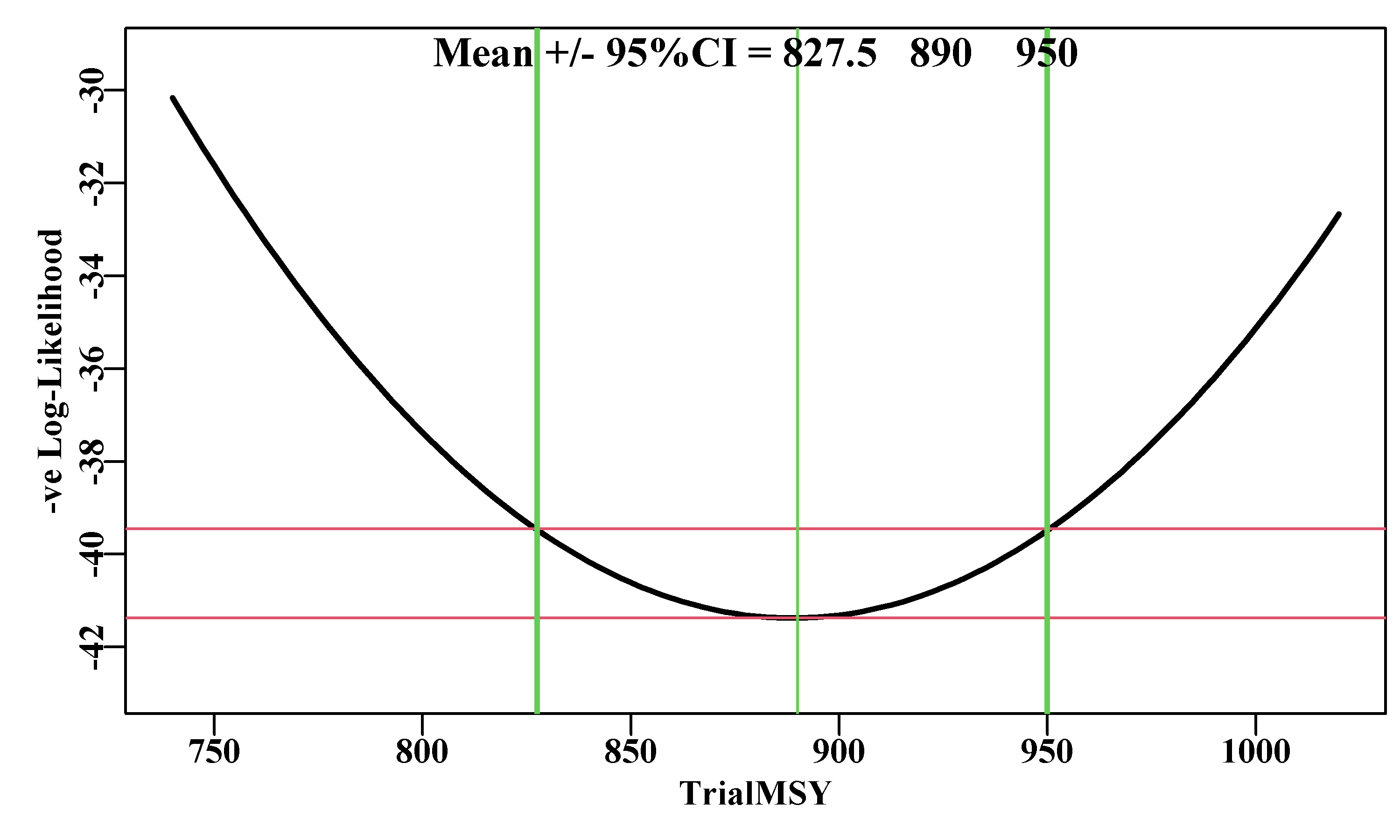

Figure 6.13: A likelihood profile for the MSY implied by the Schaefer surplus production model fitted to the abdat data-set. The horizontal red lines are the minimum and the minimum plus 1.92 (95% level for Chi2 with 1 degree of freedom, see text). The vertical lines are the approximate 95% confidence bounds around the mean of 887.729t.

Unfortunately, determining the optimum weighting to apply to the penalty term inside what we have termed negLLO(), can only be done empirically (trial and error). I recommended using a weight of 900 because I had already found that at that level the 95% CI stabilized. But it took my trying from 100 up to 700 in steps of 100, and then using steps of 50 to discover this. You should try using weight values of 500, 700, 800, 900 and 950, to see the convergence to stable values. The suggestion of the largest value that will still permit stable solutions remains vague guidance. This makes this approach perhaps the trickiest to apply in a repeatable manner. If you were to try the analysis above using a wght = 400, then the 95% CI become 825 - 950, rather than 847.5 - 927.5. In such cases there is no deterministic solution so it becomes a case of trying different weights and searching for a solution that is repeatable and consistent.

The 95% CI obtained using this likelihood profile approach are comparable to those from using a bootstrap (856.7 - 927.5) and from using asymptotic errors (849.7 - 927.4). Each of these methods could generate somewhat different answers as a function of the sample size selected. Nevertheless, the consistency between methods suggests they each generate a reasonable characterization of the variation inherent in the combination of this model and this data.

6.7 Bayesian Posterior Distributions

Fitting model parameters to observed data can be likened to searching for the location of the maximum likelihood on a multi-dimensional likelihood surface implied by a model and a given data set. If the likelihood surface is very steep in the vicinity of the optimum, then the uncertainty associated with those parameters would be relatively small. Conversely, if the likelihood surface was comparatively flat near the optimum, this would imply that similar likelihoods could be obtained from rather different parameter values, and the uncertainty around those parameters and any related model outputs would therefore be expected to be relatively high. Similar arguments can be made if there is strong parameter correlations. We are ignoring the complexities of dealing with highly multi-dimensional parameter spaces in more complex models because the geometrical concepts of steepness and surface still apply to multi-dimensional likelihoods.

As we have seen, if we assume that the likelihood surface is multi-variate normal in the vicinity of the optimum, then we can use asymptotic standard errors to define confidence intervals around parameter estimates. However, for many variables and model outputs in fisheries that might be a very strong assumption. The estimated likelihood profile for the Schaefer \(K\) parameter was, for example, relatively skewed, Figure6.12. Ideally, we would use methods that characterized the likelihood surface in the vicinity of the optimum solution independently of any predetermined probability density function. If we could manage that, we could use the equivalent of percentile methods to provide estimates of the confidence intervals around parameters and model outputs.

With formal likelihood profiles we can conduct a search across the parameter space to produce a 2-dimensional likelihood surface. However, formal likelihood profiles for more than two parameters, perhaps using such a grid search, would be very clumsy and more and more impractical as the number of parameters increased further. What is needed is a method of integrating across many dimensions at once to generate something akin to a multi-dimensional likelihood profile. In fact, there are a number of ways of doing this, including Sampling Importance Sampling (SIR; see McAllister and Ianelli, 1997; and McAllister et al, 1994), and Markov Chain Monte Carlo (MCMC ; see Gelman et al 2013). In the examples to follow we will implement the MCMC methodology. There are numerous alternative algorithms for conducting an MCMC, but we will focus on a relatively flexible approach called the Gibbs-within-Metropolis sampler (or sometimes Metropolis-Hastings-within-Gibbs). The Metropolis algorithm (Metropolis et al, 1953), which started as two-dimensional integration, was generalized by Hastings (1970), hence Metropolis-Hastings. In the literature you will find reference to the Markov Chain Monte Carlo but also the Monte Carlo Markov Chain. The former is used by the standard reference used in fisheries (Gelman et al, 2013) although personally I find the idea of a Monte Carlo approach to generating a Markov Chain more intuitively obvious. Despite this potential confusion I would suggest sticking with MCMC when writing and if necessary ignore my intuitions and use Markov Chain Monte Carlo. Such things are not sufficiently important to spend time worrying about, except sometimes the differences can actually mean something (such confusions remain a problem, but as long as you are aware of such pitfalls you can avoid them!).

An MCMC, obviously, uses a Markov chain to trace over the multi-dimensional likelihood surface. A Markov chain describes a process whereby each state is determined probabilistically from the previous state. A random walk would constitute one form of Markov chain, and its final state would be a description of a random distribution. However, the aim here is to produce a Markov chain whose final equilibrium state, the so-called stationary distribution, provides a description of the target or posterior distribution of Bayesian statistics.

The Markov chain starts with some combination of parameter values, the available observed data, and the model being used, and these together define a location in the likelihood space. Depending on the set of parameter values, the likelihood can obviously be small or large (with an upper limit of the model’s maximum likelihood ). The MCMC process entails iteratively stepping through the parameter space following a set of rules based on the likelihood of each new candidate parameter set relative to the previous set, to determine which steps will become part of the Markov chain. The decision being made each iteration is which parameter vectors will be accepted and which rejected? Each step of the process entails the production of a new candidate set of parameter values, which is done stochastically (hence Markov Chain Monte Carlo) and, in the Gibbs-within-Metropolis, one parameter at a time (Casella and George, 1992; Millar and Meyer, 2000). Each of these new candidate sets of parameters, combined with the available data and the model, defines a new likelihood. Whether this new parameter combination is accepted as the next step in the Markov chain depends on how much the likelihood has been changed. In all cases where the likelihood increases the step is accepted, which seems reasonable. Now comes the important part, where the likelihood decreases it can still be accepted if the ratio of the new likelihood relative to the old likelihood is larger than some uniform random number (between 0 and 1; see the equations below).

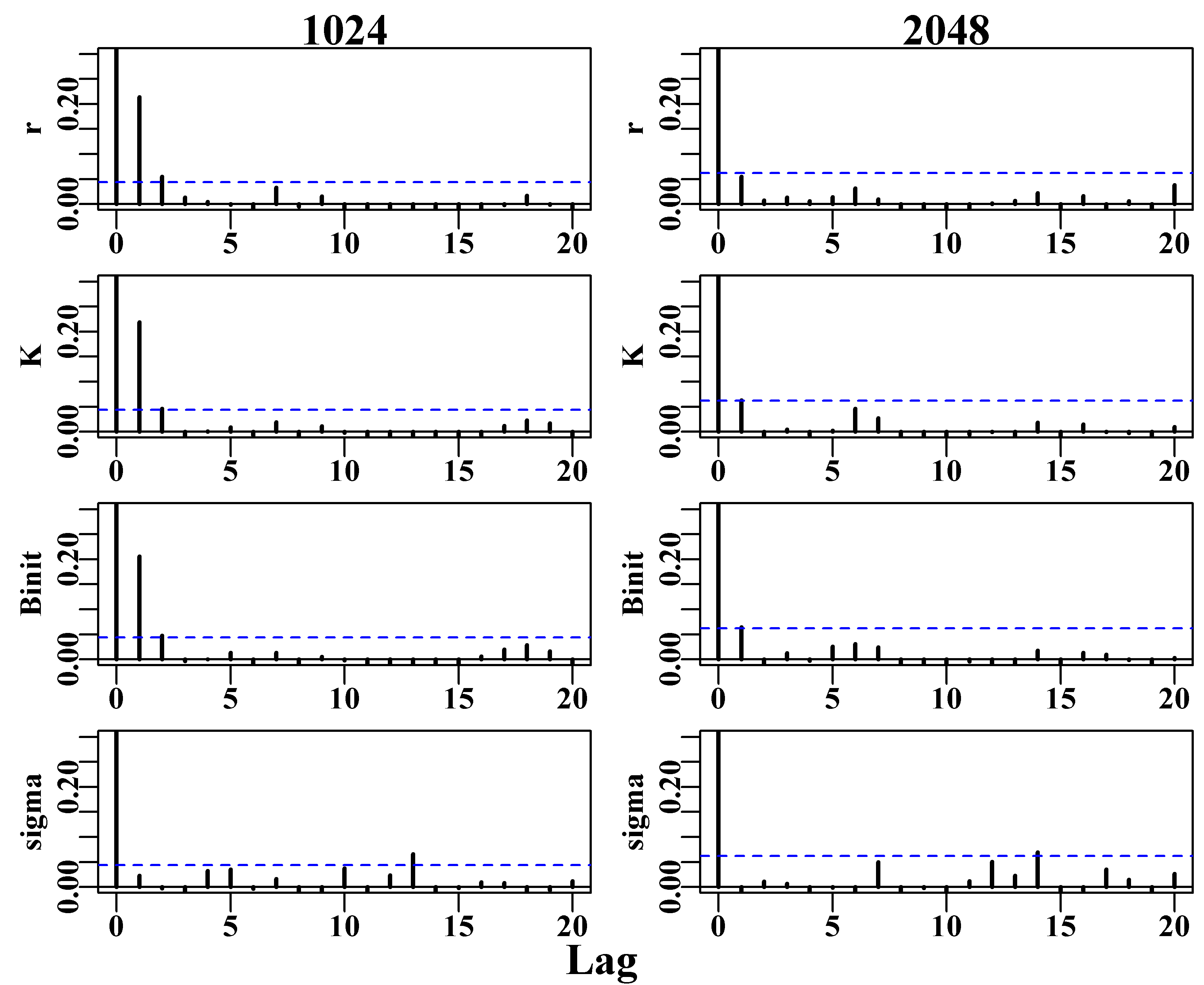

There is a further constraint that relates to parameter correlations and the fact that the Monte Carlo process is likely to lead to auto-correlation between sequential parameter sets. If there is significant serial correlation among parameter sets this can generate biased conclusions about the full extent of variation across the parameter space. The solution used is to thin out the resulting chain so that the final Markov chain will contain only every \(n^{th}\) point. The trick is to select this thinning rate so that the serial correlation between the \(n^{th}\) parameter vectors is sufficiently small that it no longer influences the overall variance. Among other things we will explore this thinning rate in the practical examples.

6.7.1 Generating the Markov Chain

A Markov chain can be generated if the likelihood of an initial set of a specific model’s parameters \(\theta_{t}\), can be defined given a set of data \(x\), and the Bayesian prior probability of the parameter set, \(L(\theta_t)\):

\[\begin{equation} L_t=L(\theta_t | x) \times L(\theta_t) \tag{6.18} \end{equation}\]

We can then generate a new trial or candidate (\(C\)) parameter set \(\theta^C\) by randomly incrementing at least one of the parameters in \({\theta^C} = {\theta_{t}} + \Delta{\theta_t}\), which will alter the implied likelihood:

\[\begin{equation} L^C = L(\theta^C | x) \times L(\theta_t) \tag{6.19} \end{equation}\]

If the ratio of \(L^C\) and \(L_t\) is greater than 1, then the jump from \(\theta_t\) to \(\theta^C\) is accepted into the Markov chain (\(\theta_{t+1} = \theta^C\)).

\[\begin{equation} r = \frac{L^C}{L_t} = \frac{L \left (\theta^C | x \right ) \times L(\theta_t)}{L \left (\theta_t | x\right ) \times L(\theta_t)} > 1.0 \tag{6.20} \end{equation}\]