Chapter 4 Model Parameter Estimation

4.1 Introduction

One of the more important aspects of modelling in ecology and fisheries science relates to the fitting of models to data. Such model fitting requires:

data from a process of interest in nature (samples, observations),

explicitly selecting a model structure suitable for the task in hand (model design and then selection - big subjects in themselves),

explicitly selecting probability density functions to represent the expected distribution of how predictions of the modelled process will differ from observations from nature when compared (choosing residual error structures), and finally,

searching for the model parameters that optimize the match between the predictions of the model and any observed data (the criterion of model fit).

Much of the skill/trickery/magic that is involved when fitting models to data centers on that innocent looking word or concept optimize in the last requirement above. This is an almost mischievous idea that can occasionally lead one into trouble, though it is also a challenge and can often be fun. The different ways of generating a so-called best-fitting model is an important focus for this chapter. It centers around the idea of what criterion to use when describing the quality of model fit to the available data and how then to implement the explicitly selected criteria.

I keep using the term “explicit”, and for good reason, but some clarification is needed. Very many people will have experienced fitting linear regressions to data but, I am guessing from experience, far fewer people realize that when they fit such a model they are assuming the use of additive normal random residual errors (normal errors) and that they are minimizing the sum of the square of those residuals. In terms of the four requirements above, when applying a linear regression to a data set, the assumption of a linear relationship answers the second requirement, the use of normal errors (with the additional assumption of a constant variance), answers the third requirement, and the minimization of the sum-of squares is the choice that meets the fourth requirement. As is generally the case, it is better to understand explicitly what one is doing rather than just operating out of habit or copying others. In order to make the most appropriate choice of these model fitting requirements (i.e. make selections that can be defended), an analyst also needs an understanding of the natural process being modelled. One can assume and assert almost anything but only so long as such selections can be defended validly. As a more general statement, if one cannot defend a set of choices then one should not make them.

4.1.1 Optimization

In Microsoft Excel, when fitting models to data, the optimum model parameters are found using the built-in Excel Solver. This involves setting up a spreadsheet so that the criterion of optimum fit (sum-of-squares, maximum likelihood, etc, see below) is represented by the contents of a single cell, and the model parameters and data used were contained in other inter-related cells. Altering a model’s parameters would alter the predicted values generated, and this in turn would alter the value of the criterion of optimum fit. A ‘best’ parameter set can be found by searching for the parameters that optimize the match between the observed and the predicted values. It sounds straightforward but turns out to be quite an art form with many assumptions and decisions to be made along the way. In the Excel Solver, one identified the cells containing the model parameters and the Solver’s internal code would then modify those values while monitoring the “criterion of best fit” cell until either a minimum (or maximum) value was found (or an exception was encountered). Effectively such a spreadsheet set-up constitutes the syntax for using the Solver within Excel. We will be using solver or optimization functions in R and they too have a required syntax, but it is no more complex than setting up a spreadsheet, it is just rather more abstract.

Model fitting is usually relatively straightforward when modelling a non-dynamic process to a single set of data, such as length-at-age, with perhaps just two to six parameters. However, it can become much more involved when perhaps dealing with a population’s dynamics through time involving recruitment, the growth of individuals, natural mortality, and fishing mortality from multiple fishing fleets. There may be many types of data, and possibly many 10’s or even 100’s of parameters. In such circumstances, to adjust the quality of the fit of predicted values to observed values some form of automated optimization or non-linear solver is a necessity.

Optimization is a very large subject of study and the very many options available are discussed at length in the CRAN Task View: Optimization and Mathematical Programming found at https://cran.r-project.org/. In the work here, we will mainly use the built-in function nlm() (try ?nlm) but there are many alternatives (including nlminb(), optim(), and others). If you are going to be involved in model fitting it is really worthwhile reading the Task View on optimization on R-CRAN and, as a first step, explore the help and examples given for the nlm() and the optim() functions.

It is sometimes possible to guess a set of parameters that generate what appears to be a reasonable visual fit to the available data, at least for simple static models. However, while such fitting-by-eye may provide usable starting points for estimating a model’s parameters it does not constitute a defensible criterion for fitting models to data. This is so because my fitting-by-eye (or wild-stab-in-the-dark) may well differ from your fitting-by-eye (or educated-guess). Instead of using such opinions some more formally defined criteria of quality of model fit to data is required.

The focus here will be on how to set-up R code to enable model parameter estimation using either least squares or maximum likelihood, especially the latter. Our later consideration of Bayesian methods will be focussed primarily on the characterization of uncertainty. We will illustrate the model fitting process through repeated examples and associated explanations. The objective is that reading this section should enable the reader to set up their own models to solve for the parameter values. We will attempt to do this in a general way that should be amenable to adaptation for many problems.

4.2 Criteria of Best Fit

There are three common options for determining what constitutes an optimum model fit to data.

In general terms, model fitting can involve the minimization of the sum of squared residuals (ssq()) between the values of the observed variable \(x\) and those predicted by candidate models proposed to describe the modelled process, \(\hat{x}\):

\[\begin{equation} ssq=\sum\limits_{i=1}^{n}{({x_i}-{\hat{x}_{i}}}{{)}^{2}} \tag{4.1} \end{equation}\]

where \(x_i\) is observation i from n observations and \({\hat{x}_{i}}\) is the model predicted value for a given observation i (for example, if \(x_i\) was the length-at-age for fish \(i\), then the \({\hat{x}_{i}}\) would be the predicted length-at-age for fish \(i\) derived from some candidate growth model). The use of the \(\hat{}\) in \(\hat{x}\) indicates a predicted value of \(x\).

Alternatively, model fitting can involve minimizing the negative log-likelihood (in this book -veLL or negLL), which entails determining how likely each observed data point is given 1) a defined model structure, 2) a set of model parameters, and 3) the expected probability distribution of the residuals. Minimizing the negative log-likelihood is equivalent to maximizing either the product of all likelihoods or the sum of all log-likelihoods for the set of data points. Given a collection of observations x, a model structure that can predict \(\hat{x}\), and a set of model parameters \(\theta\), then the total likelihood of those observations is defined as:

\[\begin{equation} \begin{split} {L} &= \prod\limits_{i=1}^{n}{{L}\left( {x_i|\theta}\right )} \\ {-veLL} &= -\sum\limits_{i=1}^{n}{ \log{({L}\left( {x_i|\theta}\right )})} \end{split} \tag{4.2} \end{equation}\]

where \(L\) is the total likelihood which is \(\prod{{L}\left( {x|\theta}\right )}\) or the product of the probability density (likelihood) for each observation of \(x\) given the parameter values in \(\theta\) (the further from the expected value in each case, \({\hat{x}_{i}}\), the lower the likelihood). \(-veLL\) is the total negative log-likelihood of the observations \(x\) given the candidate model parameters in \(\theta\), which is the negative sum of the n individual log-likelihoods for each data point in \(x\). We use log-likelihoods because most likelihoods are very small numbers, which when multiplied by many other very small numbers can become so small as to risk leading to floating-point overflow computer errors. Log-transformations change multiplications into additions and avoid such risks (the capital pi, \(\Pi\) turns into a capital sigma, \(\Sigma\)).

A third alternative is to use Bayesian methods that use prior probabilities, which are the initial relative weights given to each of the alternative parameter vectors being considered in the model fitting. Bayesian methods combine with and update any prior knowledge of the most likely model parameters (the prior probabilities) with the likelihoods of any new data that become available given the different candidate parameter vectors \(\theta\). The two key difference between Bayesian methods and maximum likelihood methods, for our purposes, is the inclusion of prior likelihoods and the re-scaling of values so that the posterior probabilities sum to a total of 1.0. An important point is that the likelihood of the data given a set of parameters is being converted into a true probability of the parameters given the data. The posterior probability of a particular parameter set \(\theta\) given the data \(x\) is thus defined as:

\[\begin{equation} P(\theta|x)=\frac{L(x|\theta)P(\theta)}{\sum\limits_{i=1}^{n}{\left[ L(x_i|\theta)P(\theta)\right]}} \tag{4.3} \end{equation}\]

where \({P \left (\theta \right)}\) is the prior probability of the parameter set \(\theta\), which is updated by the likelihood of the data, \(x\), given the parameters, \(\theta\), \(L(x|\theta)P(\theta)\), and the divisor \({\sum\limits_{i=1}^{n}{\left[ L\left (x|\theta \right ) P\left(\theta\right)\right]}}\) re-scales or normalizes the result so the sum of the posterior probabilities for all parameter vectors given the data, \(\sum{P(\theta|x)}\), sums to 1.0. Formally, Equ(4.3) is an approximation as the summation in the divisor should actually be an integration across a continuous distribution, but in practice the approximation suffices and is the only practical option when dealing with the parameters of a complex fisheries model whose Posterior distribution has no simple analytical solution.

Here we will focus mainly on minimizing negative log-likelihoods (equivalent to the maximum likelihood). Although the other methods will also be given some attention. The Bayesian methods will receive much more attention when we explore the characterization of uncertainty.

Microsoft Excel is excellent software for many uses but implementing both maximum likelihood and particularly Bayesian methods tends to be slow and clumsy, they are much more amenable to implementation in R.

Identifying the sum-of-squared residuals, maximum likelihood, and Bayesian methods is not an exhaustive list of the criteria it is possible to use when fitting models to data. For example, it is possible to use the ‘sum of absolute residuals’ (SAR) which avoids the problem of combining positive and negative residuals by using their absolute values rather than squaring them (Birkes and Dodge, 1993). Despite the existence of such alternative criteria of optimal model fit we will focus our attention only on the three mentioned. Other more commonly used alternatives include what are known as robustified methods, which work to reduce the effect of outliers, or extreme and assumed to be atypical values, in available data. As stated earlier, optimization is a large and detailed field of study, I commend it to you for study, and good luck with it.

4.3 Model Fitting in R

While a grid search covering the parameter space might be a possible approach to searching for an optimum parameter set it would become more and more unwieldy as the number of parameters increased above two until eventually it would became unworkable. We will not consider that possibility any further. Instead, to facilitate the search for an optimum parameter set, a non-linear optimizer implemented in software is required.

The R system has a selection of different optimization functions, each using a different algorithm (do see the CRAN task view on Optimization). The solver function (nlm()), like the others, needs to be given an initial guess at the parameter values and then those initial parameter values are varied by the optimizer function and at each change the predicted values are recalculated as in the ssq() or negLL(). Optimization functions, such as nlm(), continue to vary the parameter values (how they do this is where the algorithms vary) until a combination is found that is defined to be the ‘best fit’ according to whatever criterion has been selected (or further improvements cannot be found). Fisheries stock assessment models can often have numerous parameters in the order of 10s or 100s (some have many more and require more highly specialized software e.g. Fournier et al, 1998; Fournier et al, 2012; Kristensen et al, 2016). In this book we will not be estimating large numbers of parameters, but the principles remain similar no matter the number.

4.3.1 Model Requirements

Discussing the theory of model fitting is helpful but does not clarify how one would implement fitting a model in R in practice. The optimizing software is used to vary the values within a parameter vector but we need to provide it with the means of repeatedly calculating the predicted values that will be used to compare with the observed values as many times as required to find the optimum solution (if successful). We need to develop chunks of code that can be repeatedly called, which is exactly the purpose for which R functions are designed. To implement model fitting to real world problems within R we need to consider the four formal requirements:

observations (data) from the system under study. This may be a fishery with observed catches, cpue, age- and length-composition of catches, etc., or it may be something simpler such as the observed lengths and associated ages for a sample of fish (but how to get this into R?),

an R function representing a candidate model of the system that when provided with a vector of parameters is used to calculate predicted values for comparison with whatever observed values are available,

an R function calculating the selected criterion of best fit, the minimum least-squares or minimum negative log-likelihood, to enable a comparison of the observed with the predicted values. This needs to be able to return a single value, reflecting the input parameters and data, that can then be minimized by the final required function, which is

an R function (we will tend to use

nlm()) for automatically optimizing the value of the selected criterion of best fit.

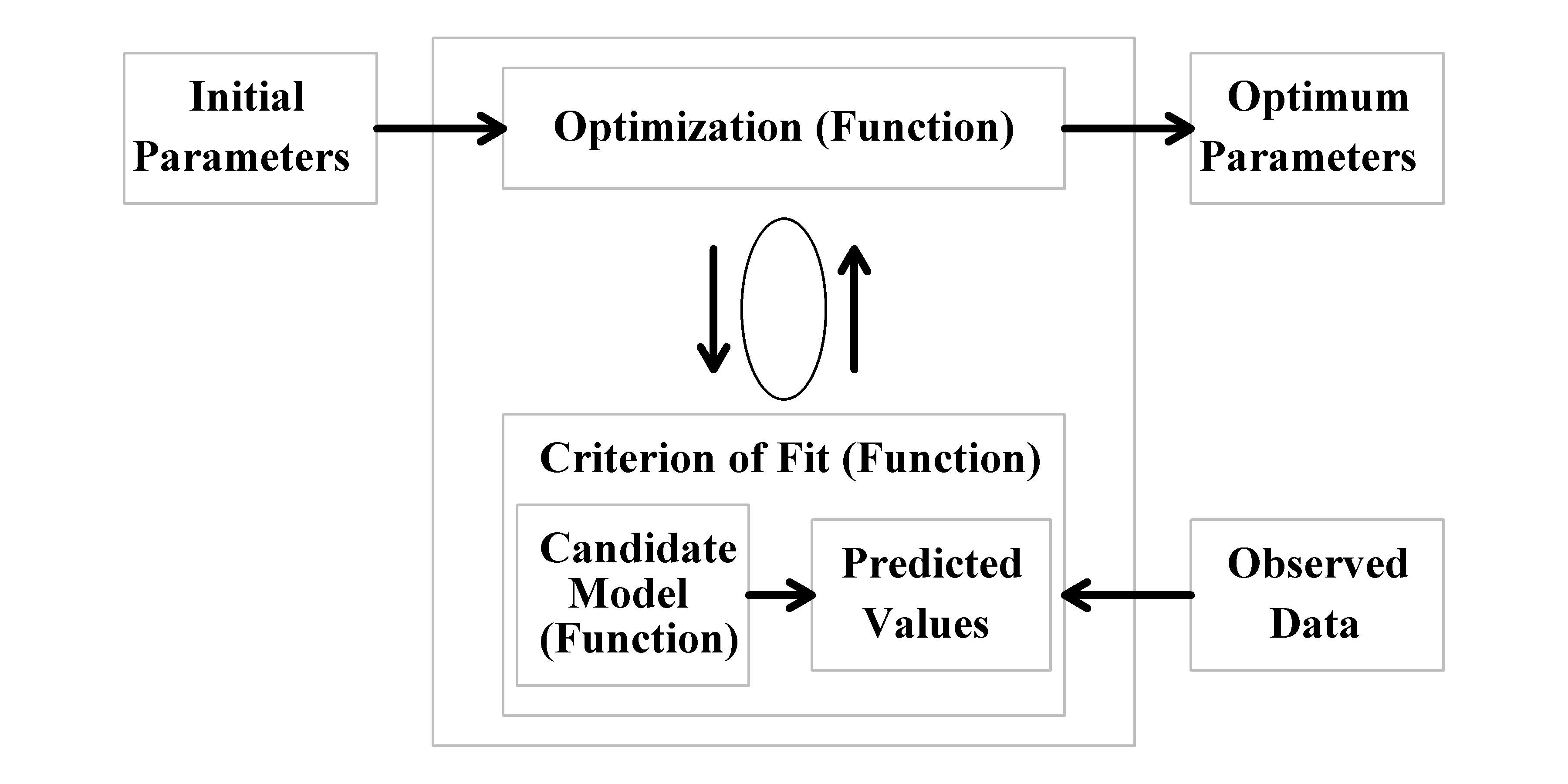

Thus, input data and three functions are needed Figure(4.1) but, because we can use built-in functions to conduct the optimization, model fitting usually entails writing at most two functions, one to calculate the predicted values from whatever model one is using and the other to calculate the criterion of fit (sometimes, in simpler exercises, these two can be combined into one function).

We assume in this book that the reader is at least acquainted with the concepts behind model fitting, as in fitting a linear regression, so we will move straight to non-linear model fitting. The primary aim of these relatively simple examples will be to introduce the use and syntax of the available solvers within R.

Figure 4.1: Inputs, functional requirements, and outputs, when fitting a model to data. The optimization function (here nlm()) minimizes the negative log-likelihood (or sum-of squares) and requires an initial parameter vector to begin. In addition, the optimizer requires a function (perhaps negLL()) to calculate the corresponding negative log-likelihood for each vector of parameters it produces in its search for the minimum. To calculate the negative log-likelihood requires a function (perhaps vB()) to generate predicted values for comparison with the input observed values.

4.3.2 A Length-at-Age Example

Fitting a model to data simply means estimating the model’s parameters so that its predictions match the observations as well as it can according to the criterion of best-fit chosen. As a first illustration of fitting a model to data in R we will use a simple example of fitting the well-known von Bertalanffy growth curve (von Bertalanffy, 1938), to a set of length-at-age data. Such a data set is included in the R package MQMF (try ?LatA). To use you own data one option is to produce a comma separated variable (csv) file with a minimum of columns of ages and lengths each with a column name (LatA has columns of just age and length; see its help page). Such csv files can be read into R using laa <- read.csv(file="filename.csv", header=TRUE).

The von Bertalanffy length-at-age growth curve is described by:

\[\begin{equation} \begin{split} & {{\hat{L}}_t}={L_{\infty}}\left( 1-{e^{\left( -K\left( t-{t_0} \right) \right)}} \right) \\ & {L_t}={L_{\infty}}\left( 1-{e^{\left( -K\left( t-{t_0} \right) \right)}} \right)+\varepsilon \\ & {L_t}= {\hat{L}}_t + \varepsilon \end{split} \tag{4.4} \end{equation}\]

where \({\hat{L}_t}\) is the expected or predicted length-at-age \(t\), \({L_\infty}\) (pronounced L-infinity) is the asymptotic average maximum body size, \(K\) is the growth rate coefficient that determines how quickly the maximum is attained, and \(t_0\) is the hypothetical age at which the species has zero length (von Bertalanffy, 1938). This non-linear equation provides a means of predicting length-at-age for different ages once we have estimates (or assumed values) for \({L_\infty}\), \(K\), and \(t_0\). When we are fitting models to data we use either of the the bottom two equations in Equ(4.4), where the \(L_t\) are the observed values and these are equated to the predicted values plus a normal random deviate \(\varepsilon = N(0,\sigma^2)\), each value of which could be negative or positive (the observed value can be larger or smaller than the predicted length for a given age). The bottom equation is really all about deciding what residual errors structure to use. In this chapter we will describe an array of alternative error structures used in ecology and fisheries. Not all of them are additive and some are defined using functional relationships rather than constants (such as \(\sigma\)).

The statement about Equ(4.4) being non-linear was made explicitly because earlier approaches to estimating parameter values for the von Bertalanffy (vB()) growth curve involved various transformations that aimed approximately to linearize the curve (e.g. Beverton and Holt, 1957). Fitting the von Bertalanffy curve was no minor undertaking in the late 1950s and 1960s. Happily, such transformations are no longer required, and such curve fitting has become straightforward.

4.3.3 Alternative Models of Growth

There is a huge literature on the study of individual growth and many different models are used to describe the growth of organisms (Schnute and Richards, 1990). The von Bertalanffy (vB()) curve has dominated fisheries models since Beverton and Holt (1957) introduced it more widely to fisheries scientists. However, just because it is very commonly used does not necessarily mean it will always provide the best description of growth for all species. Model selection is a vital if often neglected aspect of fisheries modelling (Burnham and Anderson, 2002; Helidoniotis and Haddon, 2013). Two alternative possibilities to the vB() for use here are the Gompertz growth curve (Gompertz, 1925):

\[\begin{equation} {{\hat{L}}_{t}}=a{{e}^{-b{{e}^{ct}}}}\text{ or }{{\hat{L}}_{t}}=a \exp (-b \exp (ct)) \tag{4.5} \end{equation}\]

and the generalized Michaelis-Menten equation (Maynard Smith and Slatkin, 1973; Legendre and Legendre, 1998):

\[\begin{equation} {{\hat{L}}_{t}}=\frac{at}{b+{{t}^{c}}} \tag{4.6} \end{equation}\]

each of which also have three parameters a, b, and c, and each can provide a convincing description of empirical data from growth processes. Biological interpretations (e.g. maximum average length) can be given to some of the parameters but in the end these models provide only an empirical description of the growth process. If the models are interpreted as reflecting reality this can lead to completely implausible predictions such as non-existent meters-long fish (Knight, 1968). In the literature the parameters can have different symbols (for example, Maynard Smith and Slatkin (1973), use R0 instead of a for the Michaelis-Menton ) but the underlying structural form remains the same. After fitting the von Bertalanffy growth curve to the fish in MQMF’s length-at-age data set LatA, we can use these different models to illustrate the value of trying such alternatives and maintaining an open mind with regard to which model one should use. This issue will arise again when we discuss uncertainty because we can get different results from different models. Model selection is one of the big decisions to be made when modelling any natural process. Importantly, trying different models in this way will also reinforce the processes involved when fitting models to data.

4.4 Sum of Squared Residual Deviations

The classical method for fitting models to data is known as ‘least sum of squared residuals’ (see Equs(4.1) and (4.7)), or more commonly ‘least-squares’ or ‘least-squared’. The method has been attributed to Gauss (Nievergelt, 2000, refers to a translation of Gauss’ 1823 book written in Latin: Theoria combinationis observationum erroribus minimis obnoxiae). Whatever the case, the least-squares approach fits into a strategy used for more than two centuries for defining the best fit of a set of predicted values to those observed. This strategy is to identify a so-called objective function (our criterion of best-fit) which can either be minimized or maximized depending on how the function is structured. In the case of the sum-of-squared residuals one subtracts each predicted value from its associated observed value, square the separate results (to avoid negative values), sum all the values and use mathematics (the analytical solution) or some other approach to minimize that summation:

\[\begin{equation} ssq=\sum\limits_{i=1}^{n}{{{\left( {{O}_{i}}-{\hat{E}_{i}} \right)}^{2}}} \tag{4.7} \end{equation}\]

where, \(ssq\) is the sum-of-squared residuals of \(n\) observations, \(O_i\) is observation \(i\), and \(\hat{E}_i\) is the expected or predicted value for observation \(i\). The function ssq() within MQMF is merely a wrapper surrounding a call to whatever function is used to generate the predicted values and then calculates and returns the sum-of-squared deviations. It is common that we will have to create new functions as wrappers for different problems depending on their complexity and data inputs. ssq() illustrates nicely the fact that in among the arguments that one might pass to a function it is also possible to pass other functions (in this case, within ssq(), we have called the passed a function to funk, but of course when using ssq() we input the actual function relating to the problem at hand, perhaps vB, note with no brackets when used as a function argument).

4.4.1 Assumptions of Least-Squares

A major assumption of least squares methodology is that the residual error terms exhibit a Normal distribution about the predicted variable with equal variance for all values of the observed variable; that is, in the \(\varepsilon=N(0,\sigma^2)\) the \(\sigma^2\) is a constant. If data are transformed in any way, the transformation effects on the residuals may violate this assumption. Conversely, a transformation may standardize the residual variances if they vary in a systematic way. Thus, if data are log-normally distributed then a log-transformation will normalize the data and least squares can then be used validly. As always, a consideration or visualization of the form of both the data and the residuals, resulting from fitting a model, is good practice.

4.4.2 Numerical Solutions

Most of the interesting problems in fisheries science do not have an analytical solution (e.g. as available for linear regression) and it is necessary to use numerical methods to search for an optimum model fit using a defined criterion of “best fit”, such as the minimum sum of squared residuals (least-squares). This will obviously involve a little R programming, but a big advantage of R is that once you have a set of analyses developed it can become straightforward to apply them to a new set of data.

In the examples below we use some of the utility functions from MQMF to help with the plotting. But for fitting and comparing the three different growth models defined above we need five functions, four of which we need to write. The first three are used to estimate the predicted values of length-at-age used to compare with the observed data. This example has three alternative model functions, vB(), Gz(), and mm(), one each for the three different growth curves. The fourth function is needed to calculate the sum-of-squared residuals from the predicted values and their related observations. Here we are going to be using the MQMF function ssq() (whose code you should examine and understand). This function returns a single value which is to be minimized by the final function, nlm(), which is needed to do the minimization in an automated manner. The R function nlm(), uses a user-defined generalized function, that it refers to as f (try args(nlm), or formals(nlm) to see the full list of arguments), for calculating the minimum (in this case of ssq()), which, in turn, uses the function defined for predicted lengths-at-age from the growth curve (e.g. vB()). Had we used a different growth curve function (e.g. Gz()) we only have to change everywhere the nlm() calling code points to vB() to Gz() and modify the parameter values to suit the Gz() function for the code to produce a usable result. Fundamentally, nlm() minimizes ssq() by varying the input parameters (which it refers to as p) that alter the outcome of the growth function vB(), Gz(), or mm(), whichever is selected.

nlm() is just one of the functions available in R for conducting non-linear optimization, alternatives include optim() and nlminb() (do read the documentation in ?nlm, and the task view on optimization on CRAN lists packages aimed at solving optimization problems).

#setup optimization using growth and ssq

data(LatA) # try ?LatA assumes library(MQMF) already run

#convert equations 4.4 to 4.6 into vectorized R functions

#These will over-write the same functions in the MQMF package

vB <- function(p, ages) return(p[1]*(1-exp(-p[2]*(ages-p[3]))))

Gz <- function(p, ages) return(p[1]*exp(-p[2]*exp(p[3]*ages)))

mm <- function(p, ages) return((p[1]*ages)/(p[2] + ages^p[3]))

#specific function to calc ssq. The ssq within MQMF is more

ssq <- function(p,funk,agedata,observed) { #general and is

predval <- funk(p,agedata) #not limited to p and agedata

return(sum((observed - predval)^2,na.rm=TRUE))

} #end of ssq

# guess starting values for Linf, K, and t0, names not needed

pars <- c("Linf"=27.0,"K"=0.15,"t0"=-2.0) #ssq should=1478.449

ssq(p=pars, funk=vB, agedata=LatA$age, observed=LatA$length) # [1] 1478.449The ssq() function replaces the MQMF::ssq() function in the global environment, but it also returns a single number, e.g. the 1478.449 above, which is the first input to the nlm() function and is what gets minimized.

4.4.3 Passing Functions as Arguments to other Functions

In the last example we defined some of the functions required to fit a model to data. We defined the growth models we were going to compare and we defined a function to calculate the sum-of-squares. A really important aspect of what we just did was that to calculate the sum-of-squares we passed the vB() function as an argument to the ssq() function. What that means is that we have passed a function that has arguments as one of the arguments to another function. You can see the potential for confusion here so it is necessary to concentrate and keep things clear. Currently, the way we have defined ssq() this all does not seem so remarkable because we have explicitly defined the arguments to both functions in the call to ssq(). But R has some tricks up its sleeve that we can use to generalize such functions that contain other functions as arguments and the main one uses the magic of the ellipsis .... With any R function, unless an argument has a default value set in its definition, each argument must be given a value. In the ssq() function above we have included both the arguments used only by ssq() (funk and observed), and those used only by the function funk (p and agedata). This works well for the example because we have deliberately defined the growth functions to have identical input arguments but what if the funk we wanted to use had different inputs, perhaps because we were fitting a selectivity curve and not a growth curve? Obviously we would need to write a different ssq() function. To allow for more generally useful functions that can be re-used in many more situations, the writers of R (R Core Team, 2019) included this concept of ..., which will match any arguments not otherwise matched, and so can be used to input the arguments of the funk function. So, we could re-define ssq() thus:

# Illustrates use of names within function arguments

vB <- function(p,ages) return(p[1]*(1-exp(-p[2] *(ages-p[3]))))

ssq <- function(funk,observed,...) { # only define ssq arguments

predval <- funk(...) # funks arguments are implicit

return(sum((observed - predval)^2,na.rm=TRUE))

} # end of ssq

pars <- c("Linf"=27.0,"K"=0.15,"t0"=-2.0) # ssq should = 1478.449

ssq(p=pars, funk=vB, ages=LatA$age, observed=LatA$length) #if no

ssq(vB,LatA$length,pars,LatA$age) # name order is now vital! # [1] 1478.449

# [1] 1478.449This means the ssq() function is now much more general and can be used with any input function that can be used to generate a set of predicted values for comparison with a set of observed values. This is how the ssq() function within MQMF is implemented; read the help ?ssq. The general idea is that you must define all arguments used within the main function but any arguments only used within the called function (here called funk), can be passed in the …. It is always best to explicitly name the arguments so that their order does not matter, and you need to be very careful with typing as if you misspell the name of an argument passed through the … this will not always throw an error! For example, using a uppercase LatA$Age rather than LatA$age does not throw an error but leads to a result of zero rather than 1478.440. This is because LatA$Age = NULL, which is a valid if incorrect input. Clearly the … can be very useful but it is also inherently risky if you type as badly as I do.

# Illustrate a problem with calling a function in a function

# LatA$age is typed as LatA$Age but no error, and result = 0

ssq(funk=vB, observed=LatA$length, p=pars, ages=LatA$Age) # !!! # [1] 0And if you were rushed and did not bother naming the arguments that too will fail if you get the arguments out of order. If, for example, you were to input ssq(LatA$length, vB, pars, LatA$age) instead of ssq(vB, LatA$length, pars, LatA$age) then you will get an error: Error in funk(…) : could not find function “funk”. You might try that yourself just to be sure. It rarely hurts to experiment with your code, you cannot break your computer and you might learn something.

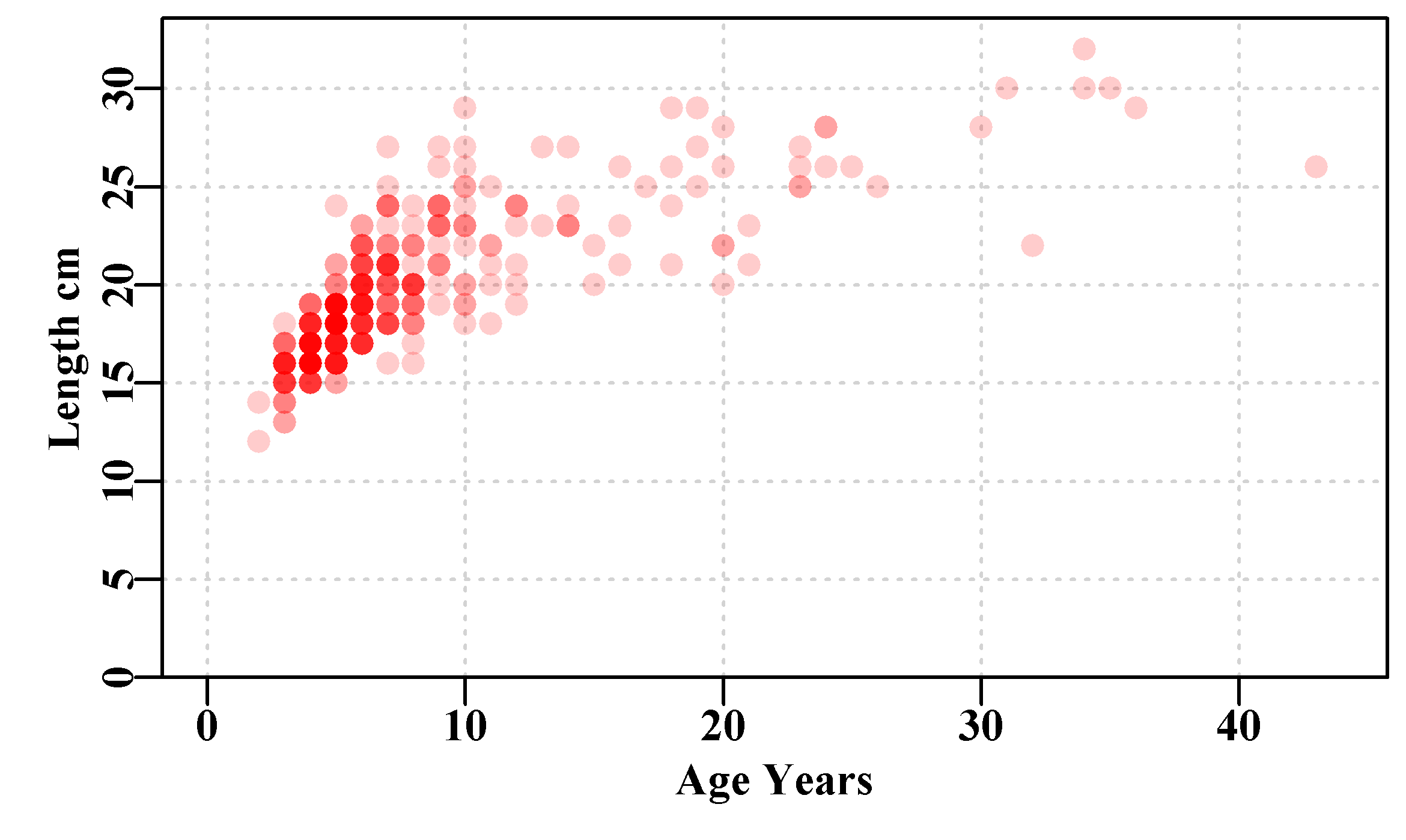

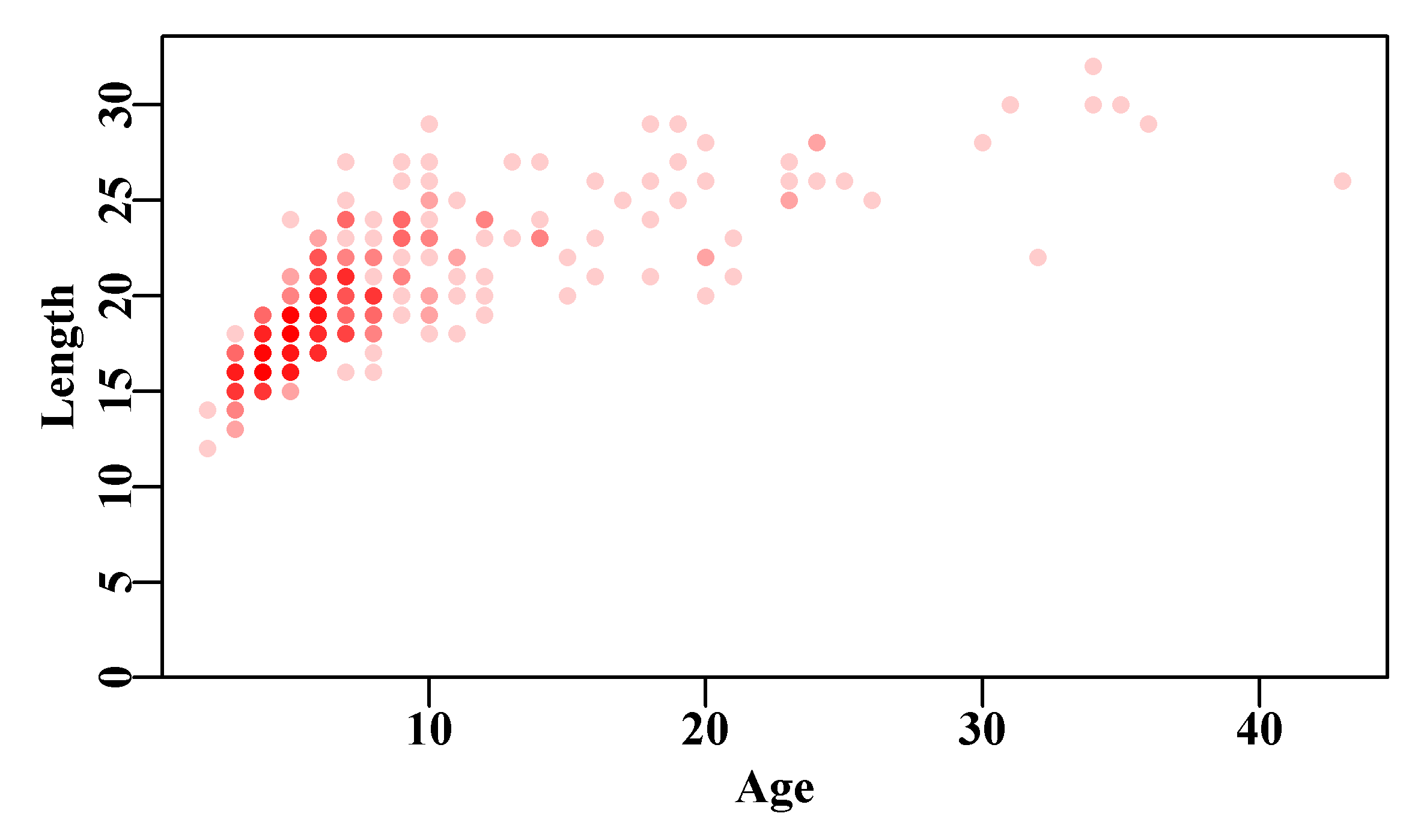

4.4.4 Fitting the Models

If we plot up the LatA data set Figure(4.2), we see some typical length-at-age data. There are 358 points (try dim(LatA)) and many lie on top of each other, but the relative sparseness of fish in the older age classes becomes apparent when we use the rgb() option within the plot() function to vary the transparency of colour in the plot. Alternatively, we could use jitter() to add noise to each plotted point’s position to see the relative density of data points. Whenever you are dealing with empirical data it is invariably worth your time to at least plot it up and otherwise explore its properties.

#plot the LatA data set Figure 4.2

parset() # parset and getmax are two MQMF functions

ymax <- getmax(LatA$length) # simplifies use of base graphics. For

# full colour, with the rgb as set-up below, there must be >= 5 obs

plot(LatA$age,LatA$length,type="p",pch=16,cex=1.2,xlab="Age Years",

ylab="Length cm",col=rgb(1,0,0,1/5),ylim=c(0,ymax),yaxs="i",

xlim=c(0,44),panel.first=grid())

Figure 4.2: Simulated female length-at-age data for 358 Redfish, Centroberyx affinis, based on samples from eastern Australia. Full intensity colour means >= 5 points.

Rather than continuing to guess parameter values and modifying them by hand we can use nlm() (or optim(), or nlminb(), which have different syntax for their use) to fit growth models or curves to the selected LatA data. This will illustrate the syntax of nlm() but also the use of two more MQMF utility R functions magnitude() and outfit() (check out ?nlm, ?magnitude, and ?outfit). You might also look at the code in each function (enter each function’s name into the console without arguments or brackets). From now on I will prompt you less often to check out the details of functions that get used, but if you see a function that is new to you hopefully, by now, it makes sense to review its help, its syntax, and especially its code, just as you might look at the contents of each variable that gets used.

Each of the three growth models require the estimation of three parameters and we need initial guesses for each to start off the nlm() solver. All we do is provide values for each of the nlm() function’s parameters/arguments. In addition, we have used two extra arguments, typsize and iterlim. The typsize is defined in the nlm() help as “an estimate of the size of each parameter at the minimum”. Including this often helps to stabilize the searching algorithm used as it works to ensure that the iterative changes made to each parameter value are of about the same scale. A very common alternative approach, which we will use with more complex models, is to log-transform each parameter when input into nlm() and back-transform them inside the function called to calculate the predicted values. However, this could only work where the parameters are guaranteed to always stay positive. With the von Bertalanffy curve, for example, the \(t_0\) parameter is often negative and so magnitude() should be used instead of the log-transformation strategy. The default iterlim=100 means a maximum of 100 iterations, which is sometimes not enough, so if 100 are reached you should expand this number to, say, 1000. You will quickly notice that the only thing changed between each model fitting effort is the function that funk, inside ssq(), is pointed at and the starting parameter values. This is possible by consciously designing the growth functions to use exactly the same arguments (passed via …). You could also try running one or two of these without setting the typsize and interlim options. Notice also that we have run the Michaelis-Menton curve with two slightly different starting points.

# use nlm to fit 3 growth curves to LatA, only p and funk change

ages <- 1:max(LatA$age) # used in comparisons

pars <- c(27.0,0.15,-2.0) # von Bertalanffy

bestvB <- nlm(f=ssq,funk=vB,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars))

outfit(bestvB,backtran=FALSE,title="vB"); cat("\n")

pars <- c(26.0,0.7,-0.5) # Gompertz

bestGz <- nlm(f=ssq,funk=Gz,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars))

outfit(bestGz,backtran=FALSE,title="Gz"); cat("\n")

pars <- c(26.2,1.0,1.0) # Michaelis-Menton - first start point

bestMM1 <- nlm(f=ssq,funk=mm,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars))

outfit(bestMM1,backtran=FALSE,title="MM"); cat("\n")

pars <- c(23.0,1.0,1.0) # Michaelis-Menton - second start point

bestMM2 <- nlm(f=ssq,funk=mm,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars))

outfit(bestMM2,backtran=FALSE,title="MM2"); cat("\n") # nlm solution: vB

# minimum : 1361.421

# iterations : 24

# code : 2 >1 iterates in tolerance, probably solution

# par gradient

# 1 26.8353971 -1.133838e-04

# 2 0.1301587 -6.195068e-03

# 3 -3.5866989 8.326176e-05

#

# nlm solution: Gz

# minimum : 1374.36

# iterations : 28

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 26.4444554 2.724757e-05

# 2 0.8682518 -6.455226e-04

# 3 -0.1635476 -2.046463e-03

#

# nlm solution: MM

# minimum : 1335.961

# iterations : 12

# code : 2 >1 iterates in tolerance, probably solution

# par gradient

# 1 20.6633224 -0.02622723

# 2 1.4035207 -0.37744316

# 3 0.9018319 -0.05039283

#

# nlm solution: MM2

# minimum : 1335.957

# iterations : 25

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 20.7464274 8.465730e-06

# 2 1.4183164 -3.856475e-05

# 3 0.9029899 -1.297406e-04These are numerical solutions and they do not guarantee a correct solution. Notice that the gradients in the first Michaelis-Menton solution (that started at 26.2, 1, 1) are relatively large and yet it’s SSQ, at 1335.96, is very close to the second Michaelis-Menton model fit and smaller (better) than either the vB or Gz curves. However, the gradient values indicate that this model fit can, and should be, improved. If you alter the initial parameter estimate for parameter a (the first MM parameter) down to 23 instead of 26.2, as in the last model fit, we obtain slightly different parameter values, a slightly smaller SSQ, and much smaller gradients giving greater confidence that the result is a real minimum. As it happens, if one were to run cbind(mm(bestMM1$estimate,ages), mm(bestMM2$estimate,ages)) you can work out that the predicted values differ from -0.018 to 0.21% while, if you include the vB predictions, MM2 differs from the vB predictions from -6.15 to 9.88% (ignoring the very largest deviation of 40.6%). You could also try omitting the typsize argument from the estimate of the vB model, which will still give the optimal result but inspect the gradients to see why using typsize helps the optimization along. When setting up these examples, occasional runs of the Gz() model gave rise to a comment that the steptol might be too small and changing it from the default of 1e-06 to 1e-05 quickly fixed the problem. If it happens to you, add a statement ,steptol=1e-05 to the nlm() command and see if the diagnostics improve.

The obvious conclusion is that one should always read the diagnostic comments from nlm(), consider the gradients of the solution one obtains, and it is always a good idea to use multiple sets of initial parameter guesses to ensure one has a stable solution. Numerical solutions are based around software implementations and the rules used to decide when to stop iterating can sometimes be fooled by sub-optimal solutions. The aim is to find the global minimum not a local minimum. Any non-linear model can give rise to such sub-optimal solutions so automating such model fitting is not a simple task. Never assume that the first answer you get in such circumstances is definitely the optimum you are looking for, even if the plotted model fit looks acceptable.

Within a function call if you name each argument then the order does not strictly matter, but I find that consistent usage simplifies reading the code so would recommend using the standard order even when using explicit names. If we do not use explicit names the syntax for nlm() requires the function to be minimized (f) to be defined first. It also expects the f function, whatever it is, to use the initial parameter guess in the p argument, which, if unnamed must come second. If you type formals(nlm) or args(nlm) into the console one obtains the possible arguments that can be input to the function along with their defaults if they exist:

# function (f, p, ..., hessian = FALSE, typsize = rep(1,

# length(p)),fscale = 1, print.level = 0, ndigit = 12,

# gradtol = 1e-06, stepmax = max(1000 *

# sqrt(sum((p/typsize)^2)), 1000), steptol = 1e-06,

# iterlim = 100, check.analyticals = TRUE)As you can see the function to be minimized f (in this case ssq()) comes first, followed by the initial parameters, p, that must be the first argument required by whatever function is pointed to by f. Then comes the ellipsis (three dots) that generalizes the nlm() code for any function f, and then a collection of possible arguments all of which have default values. We altered typsize and iterlim (and steptol in Gz() sometimes); see the nlm() help for an explanation of each.

In R the … effectively means whatever other inputs are required, such as the arguments for whatever function f is pointed at (in this case ssq). If you look at the args or code, or help for ?ssq, you will see that it requires the function, funk, that will be used to calculate the expected values relative to the next required input for ssq(), which is the vector of observed values (as in \(O_i - E_i\)). Notice there is no explicit mention of the arguments used by funk, which are assumed to be passed using the …. In each of our calls to ssq() we have filled in those arguments explicitly with, for example, nlm(f=ssq,funk=Gz, observed=LatA$length, p=pars, ages=LatA$age, ). In that way all the requirements are filled and ssq() can do its work. If you were to accidentally omit, say, the ages=LatA$age, argument then, helpfully (in this instance), R will respond with something like Error in funk(par, independent) : argument “ages” is missing, with no default (I am sure you believe me but it does not hurt to try it for yourself!).

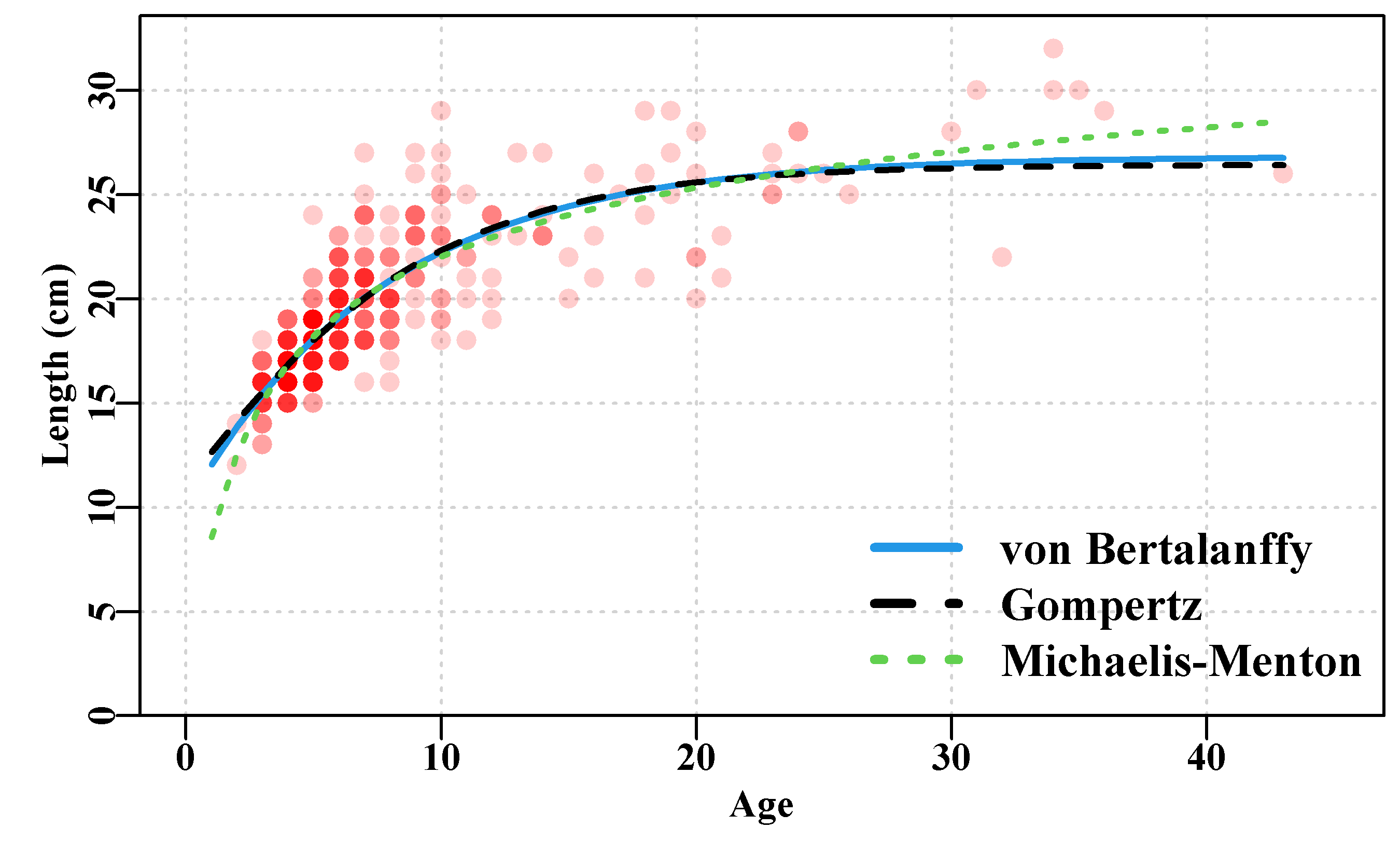

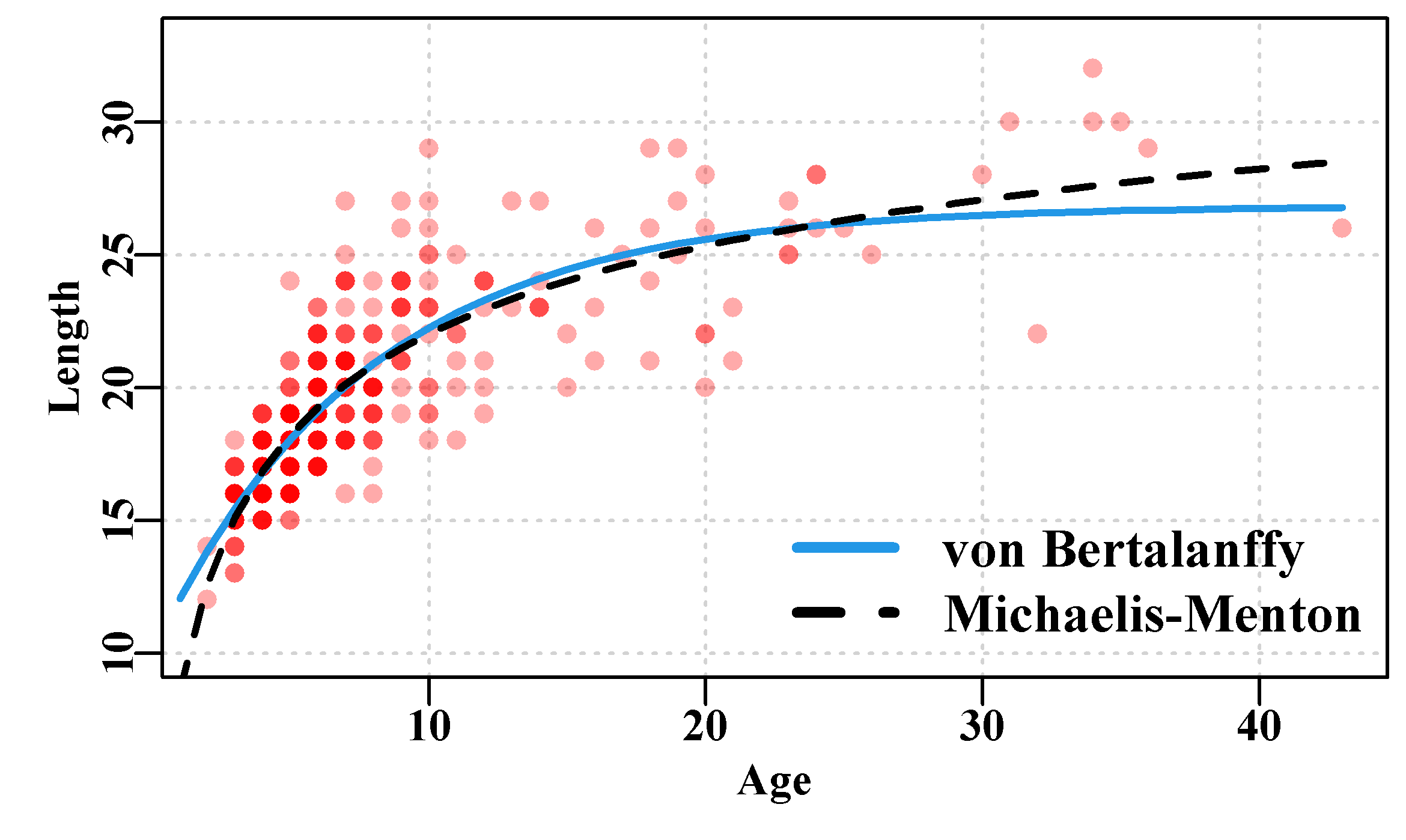

In terms of the growth curve model fitting, plotting the results provides a visual comparison that illustrates the difference between the three growth curves (Murrell, 2011).

#Female length-at-age + 3 growth fitted curves Figure 4.3

predvB <- vB(bestvB$estimate,ages) #get optimumpredicted lengths

predGz <- Gz(bestGz$estimate,ages) # using the outputs

predmm <- mm(bestMM2$estimate,ages) #from the nlm analysis above

ymax <- getmax(LatA$length) #try ?getmax or getmax [no brackets]

xmax <- getmax(LatA$age) #there is also a getmin, not used here

parset(font=7) # or use parsyn() to prompt for the par syntax

plot(LatA$age,LatA$length,type="p",pch=16, col=rgb(1,0,0,1/5),

cex=1.2,xlim=c(0,xmax),ylim=c(0,ymax),yaxs="i",xlab="Age",

ylab="Length (cm)",panel.first=grid())

lines(ages,predvB,lwd=2,col=4) # vB col=4=blue

lines(ages,predGz,lwd=2,col=1,lty=2) # Gompertz 1=black

lines(ages,predmm,lwd=2,col=3,lty=3) # MM 3=green

#notice the legend function and its syntax.

legend("bottomright",cex=1.2,c("von Bertalanffy","Gompertz",

"Michaelis-Menton"),col=c(4,1,3),lty=c(1,2,3),lwd=3,bty="n")

Figure 4.3: Female Length-at-Age data from 358 simulated female redfish with three optimally fitted growth curves drawn on top.

The rgb() function used in the plot implies that the intensity of colour represents the number of observations, with the most intense implying at least five observations. It is clear that with this data the vB() and Gz() curves are close to coincident over much of the extent of the observed data, while the mm() curve deviates from the other two but mainly at the extremes. The Michaelis-Menton curve is forced to pass through the origin, while the other two are not constrained in this way (even though the idea may appear to be closer to reality). One could include the length of post-larval fish to pull the Gompertz and von Bertalanffy curves downwards. But with living animals growth is complex. Many sharks and rays have live-birth and do start post-development life at sizes significantly above zero. Always remember that these curves are empirical descriptions of the data and have limited abilities to reflect reality.

Most of the available data is between ages 3 and 12 (try table(LatA$age)), and then only single occurrences above age 24. Over the ages 3 - 24 the Gompertz and von Bertalanffy curves follow essentially the same trajectory and the Michaelis-Menton curve differs only slightly (you could try the following code to see the actual differences cbind(ages, predvB, predGz, predmm). Outside of that age range bigger differences occur, although the lack of younger animals suggests the selectivity of the gear that caught the samples may under-represent fish less than 3 years old. In terms of the relative quality of fit (the sum-of-squared residuals) the final Michaelis-Menton curve has the smallest ssq(), followed by the von Bertalanffy, followed by the Gompertz. But each provides a plausible mean description of the growth between the range of 3 - 24 years, where the data is most dense. When data is as sparse as it is in the older age classes there is also the question of whether or not this sample is representative of the population for those ages. Questioning one’s data,the model used, and consequent interpretations, is an important aspect of producing useful models of natural processes.

4.4.5 Objective Model Selection

In the three growth models above the optimum model fit was defined as that which minimized the sum-of-squared residuals between the predicted and observed values. By that criterion the second Michaelis-Menton curve provided a ‘better’ fit than either von Bertalanffy or the Gompertz curves. But can we really say that the second Michaelis-Menton curve was ‘better’ fitting than the first? In terms of the gradients of the final solution things are clearly better with the second curve but strictly the criterion of fit was only the minimum SSQ and the difference was less than 0.01 units. Model selection is generally a trade-off between the number of parameters used and the quality of fit according to the criterion chosen. If we devise a model with more parameters this generally leads to greater flexibility and improved capacity to be closer to the observed data. In the extreme if we had as many parameters as we had observations we could have a perfect model fit, but, of course, would have learned nothing about the system we are modelling. With 358 parameters for the LatA data set that would clearly be a case of over-parameterization, but what if we had only increased the number of parameters to say 10? No doubt the curve would have been oddly shaped but would likely have a lower SSQ. Burnham and Anderson (2002) provide a detailed discussion of the trade-off between number of parameters and quality of model fit to data. In the 1970s there was a move to using Information Theory to develop a means of quantifying the trade-off between model parameters and quality of model fit. Akaike (1974) described his Akaike’s Information Criterion (AIC), which was based on maximum likelihood and information theoretic principles (more of which later) but fortunately, Burnham and Anderson (2002) provide an alternative when using the minimum sum-of-squared residuals, which is a variant of one included in Atkinson (1980):

\[\begin{equation} AIC=N \left(log\left(\hat\sigma^2 \right) \right)+2p \tag{4.8} \end{equation}\]

where \(N\) is the number of observations, \(p\) is the “number of independently adjusted parameters within the model” (Akaike, 1974, p716), and \(\hat\sigma^2\) is the maximum likelihood estimate of the variance, which just means the sum-of-squared residuals is divided by \(N\) rather than \(N-1\):

\[\begin{equation} \hat\sigma^2 = \frac{\Sigma\varepsilon^2}{N} = \frac{ssq}{N} \tag{4.9} \end{equation}\]

Even with the \(AIC\) it is difficult to determine, when using least-squares, whether differences can be argued to be statistically significantly different. There are ways related to the analysis of variance but such questions are able to be answered more robustly when using maximum likelihood, so we will address that in a later section.

If one wants to obtain biologically plausible or defensible interpretations when fitting a model, then model selection cannot be solely dependent upon quality of statistical fit. Instead, it should reflect the theoretical expectations (for example, should average growth in a population involve a smooth increase in size through time, etc.). Such considerations, other than statistical fit to data, do not appear to gain sufficient attention, but only become important when biologically implausible model outcomes arise or implausible model structures are proposed. It obviously helps to have an understanding of the biological expectations of the processes being modelled.

4.4.6 The Influence of Residual Error Choice on Model Fit

In the growth model example, we used Normal random deviates, but we can ask the question of whether we would have obtained the same answer had we used, for example, log-normal deviates? All we need to do in that case would be to log-transform the observed and predicted values before calculating the sum-of-squared residuals (see below with reference to log-normal residuals.

\[\begin{equation} ssq=\sum\limits_{i=1}^{n}{{{\left( log({O_i})- log({\hat{E_i}}) \right)}^{2}}} \tag{4.10} \end{equation}\]

Here we continue to use the backtran=FALSE option within outfit() because we are log-transforming the data not the parameters so no back-transformation is required.

# von Bertalanffy

pars <- c(27.25,0.15,-3.0)

bestvBN <- nlm(f=ssq,funk=vB,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars),iterlim=1000)

outfit(bestvBN,backtran=FALSE,title="Normal errors"); cat("\n")

# modify ssq to account for log-normal errors in ssqL

ssqL <- function(funk,observed,...) {

predval <- funk(...)

return(sum((log(observed) - log(predval))^2,na.rm=TRUE))

} # end of ssqL

bestvBLN <- nlm(f=ssqL,funk=vB,observed=LatA$length,p=pars,

ages=LatA$age,typsize=magnitude(pars),iterlim=1000)

outfit(bestvBLN,backtran=FALSE,title="Log-Normal errors") # nlm solution: Normal errors

# minimum : 1361.421

# iterations : 22

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 26.8353990 -3.649702e-07

# 2 0.1301587 -1.576574e-05

# 3 -3.5867005 3.198205e-07

#

# nlm solution: Log-Normal errors

# minimum : 3.153052

# iterations : 25

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 26.4409587 8.906655e-08

# 2 0.1375784 7.537147e-06

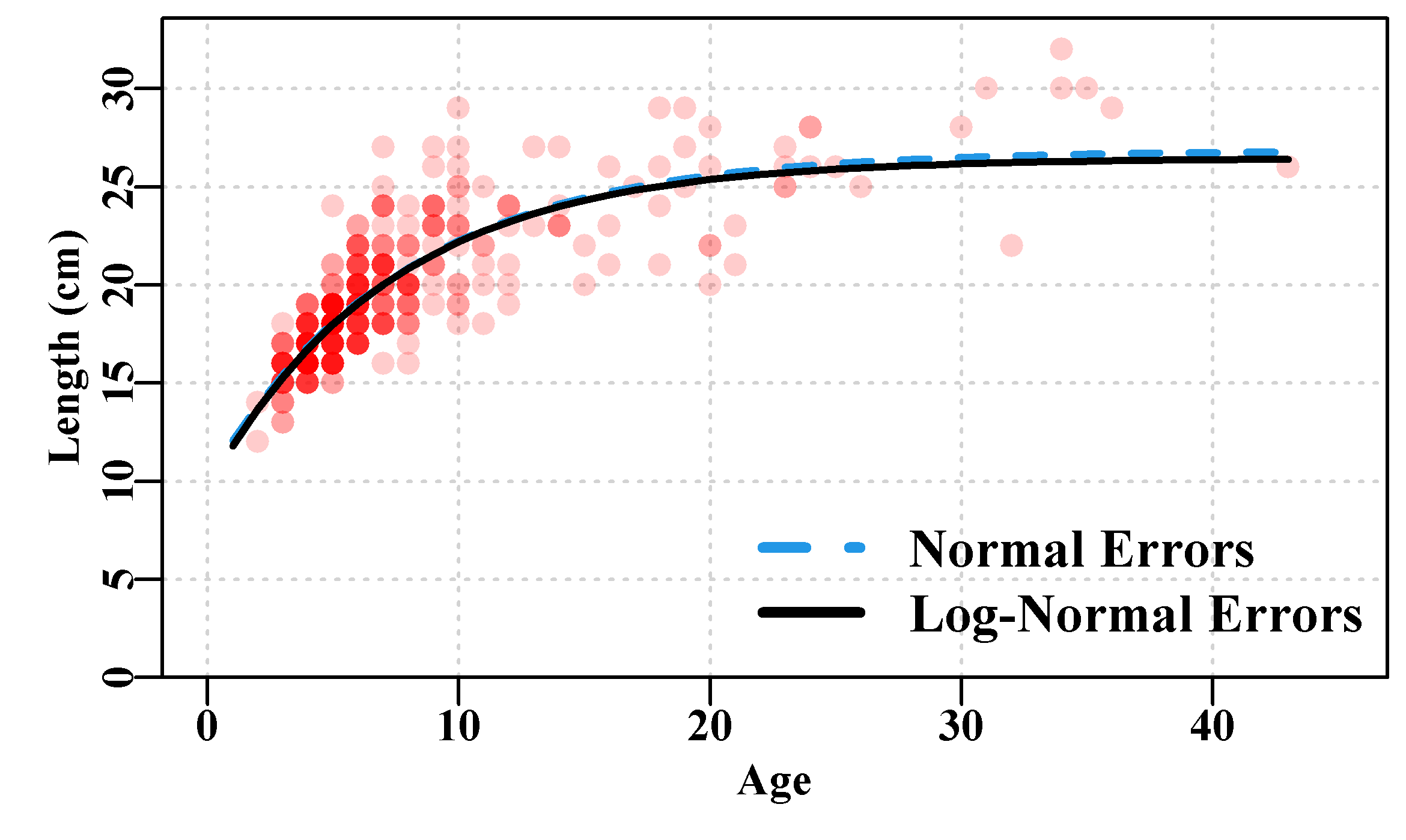

# 3 -3.2946087 -1.124171e-07In this case the curves produced by using normal and log-normal residual errors barely differ Figure(4.4), even though their parameters differ (use ylim=c(10,ymax) to make the differences clearer). More than differing visually, the different model fits are not even comparable. If we compare their respective sum-of-squared residuals one has 1361.0 and the other only 3.153. This is not surprising when we consider the effect of the log-transformations within the calculation of the sum-of-squared. But what this means is we cannot look at the tabulated outputs alone and decide which version fits the data better than the other. They are strictly incommensurate even though they are using exactly the same model. The use of the different residual error structure needs to be defended in ways other than considering the relative model fit. This example emphasizes that while the choice of model is obviously important, the choice of residual error structure is part of the model structure and equally important.

# Now plot the resultibng two curves and the data Fig 4.4

predvBN <- vB(bestvBN$estimate,ages)

predvBLN <- vB(bestvBLN$estimate,ages)

ymax <- getmax(LatA$length)

xmax <- getmax(LatA$age)

parset()

plot(LatA$age,LatA$length,type="p",pch=16, col=rgb(1,0,0,1/5),

cex=1.2,xlim=c(0,xmax),ylim=c(0,ymax),yaxs="i",xlab="Age",

ylab="Length (cm)",panel.first=grid())

lines(ages,predvBN,lwd=2,col=4,lty=2) # add Normal dashed

lines(ages,predvBLN,lwd=2,col=1) # add Log-Normal solid

legend("bottomright",c("Normal Errors","Log-Normal Errors"),

col=c(4,1),lty=c(2,1),lwd=3,bty="n",cex=1.2)

Figure 4.4: Female Length-at-Age data from 358 female redfish, Centroberyx affinis, with two von Bertalanffy growth curves fitted using Normal and Log-Normal residuals.

4.4.7 Remarks on Initial Model Fitting

The comparison of curves in the example above is itself interesting, however, what we have also done is illustrate the syntax of nlm() and how one might go about fitting models to data. The ability to pass functions as arguments into another function (as here we passed ssq as f into nlm(), and vB, Gz, and mm into ssq() as funk) is one of the strengths but also complexities of R. In addition, the use of the … to pass extra arguments without having to name them explicitly beforehand helps produce re-usable code. It simplifies the re-use of functions like ssq() where all we need do is change the input function, with potentially completely different input requirements, to get an entirely different answer from essentially the same code. The very best way of becoming familiar with these methods is to employ them with your own data sets. Plot your data and any model fits because if the model fit appears unusual then it quite likely is, and deserves a second and third look.

One can go a long way using sum-of-squares but the assumptions requiring normally distributed residuals around the expected values, and a constant variance are constraining when dealing with the diversity of the real world. In order to use probability density distributions other than Normal and to use non-constant variances, one should turn to using maximum likelihood.

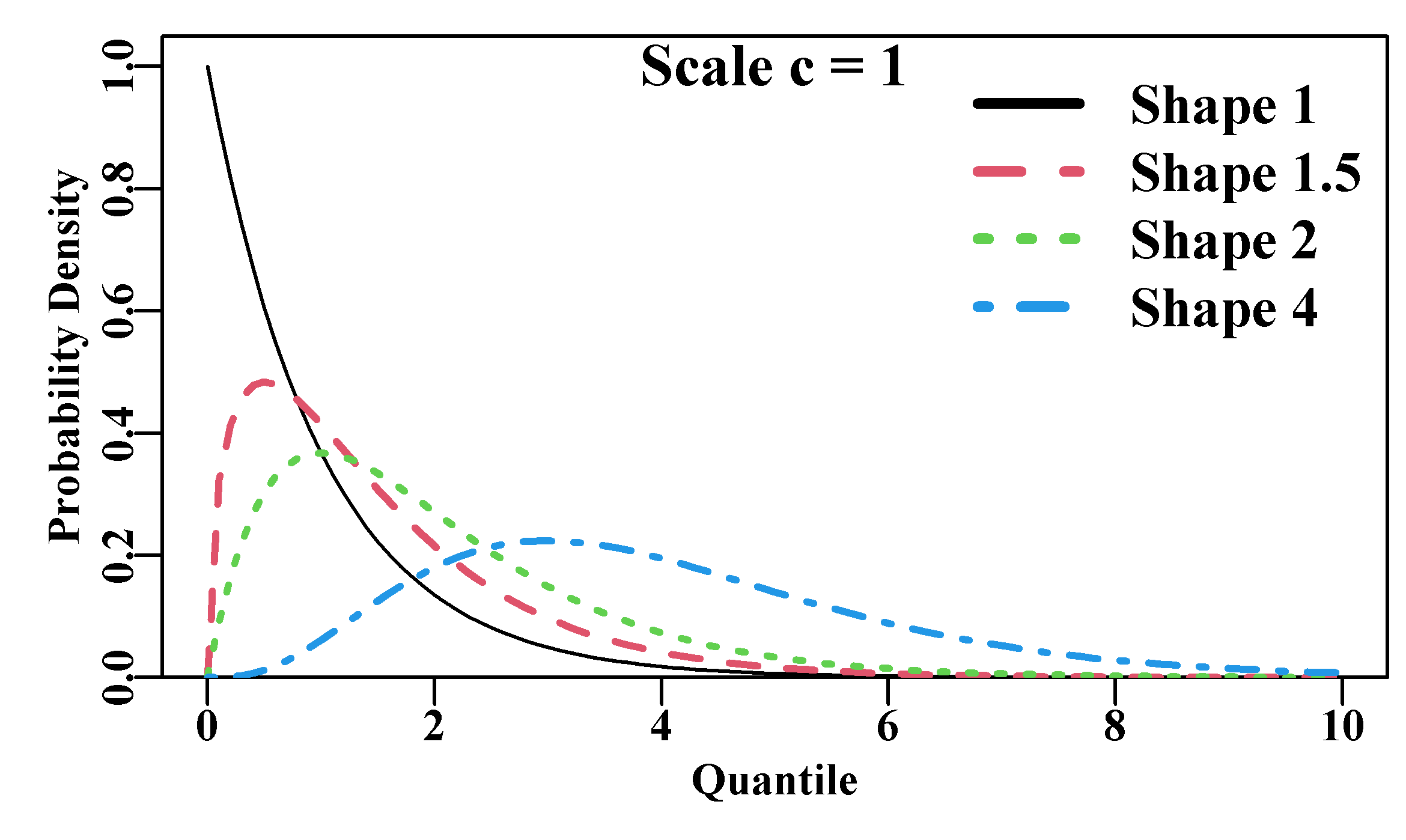

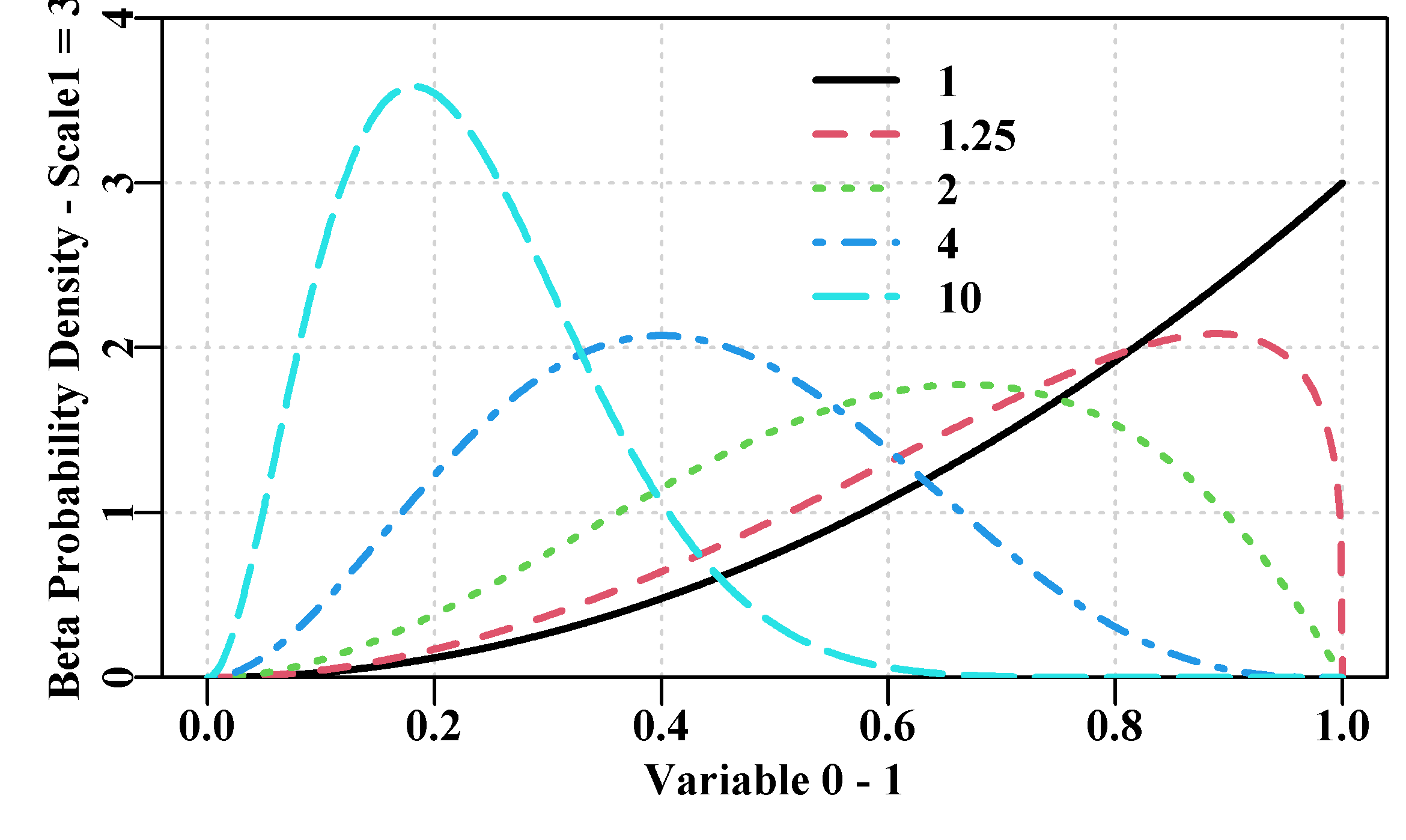

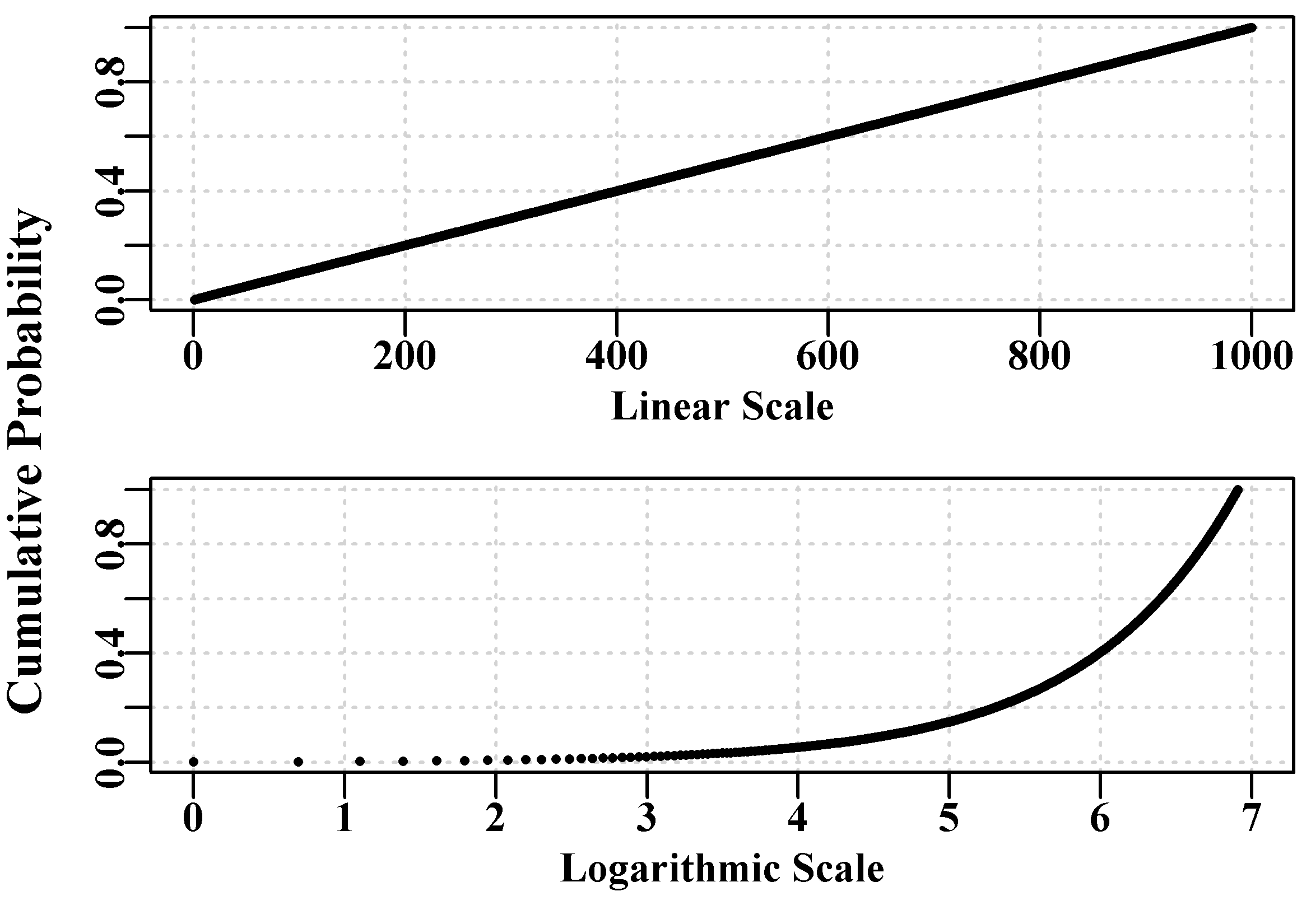

4.5 Maximum Likelihood

The use of likelihoods is relatively straightforward in R as there are built-in functions for very many Probability Density Functions (PDFs) as well as an array of packages that define other PDFs. To repeat, the aim with maximum likelihood methods is to use software to search for the model parameter set that maximizes the total likelihood of the observations. To use this criterion of optimal model fit requires the model to be defined so that it specifies probabilities or likelihoods for each of the observations (the available data) as a function of the parameter values and other variables within the model (Equ(4.2) and Equ(4.11)). Importantly, such a specification includes an estimate of the variability or spread of the selected PDF (the \(\sigma\) in the Normal distribution; which is only a by-product of the least-squares approach). A major advantage of using maximum likelihood is that the residual structure or the expected distribution of observations about the expected central tendency of the data need not be normally distributed. If the probability density function (PDF) can be defined, it can be used in a maximum likelihood framework; see Forbes et al, (2011) for the definitions of many useful probability density functions.

4.5.1 Introductory Examples

We will use the well-known Normal distribution to illustrate the methods but then extend the approach to include an array of alternative PDFs. For the purposes of model fitting to data the primary interest for each PDF is in the definition of the probability density or likelihood for individual observations. For a Normal distribution with a mean expected value of \({\bar{x}}\) or \({\mu}\), the probability density or likelihood of a given single value \(x_i\) is defined as:

\[\begin{equation} L\left( {x_i}|\mu_{i} ,\sigma \right)=\frac{1}{\sigma \sqrt{2\pi }}{{e}^{\left( \frac{-{{\left( {x_i}-\mu_{i} \right)}^{2}}}{2\sigma^2 } \right)}} \tag{4.11} \end{equation}\]

where \({\sigma}\) is the standard deviation associated with \({\mu_{i}}\). This identifies an immediate difference between least-squared methods and maximum likelihood methods, in the latter, one needs a full definition of the PDF, which in the case of the Normal distribution includes an explicit estimate of the standard deviation of the residuals around the mean estimates \(\mu\). Such an estimate is not required with least-squares, although is easily derived from the SSQ value.

As an example, we can generate a sample of observations from a Normal distribution (see ?rnorm) and then calculate that sample’s mean and stdev and compare how likely the given sample values are for these parameter estimates relative to how likely they are for the original mean and stdev used in the rnorm function, Table(4.1):

# Illustrate Normal random likelihoods. see Table 4.1

set.seed(12345) # make the use of random numbers repeatable

x <- rnorm(10,mean=5.0,sd=1.0) # pseudo-randomly generate 10

avx <- mean(x) # normally distributed values

sdx <- sd(x) # estimate the mean and stdev of the sample

L1 <- dnorm(x,mean=5.0,sd=1.0) # obtain likelihoods, L1, L2 for

L2 <- dnorm(x,mean=avx,sd=sdx) # each data point for both sets

result <- cbind(x,L1,L2,"L2gtL1"=(L2>L1)) # which is larger?

result <- rbind(result,c(NA,prod(L1),prod(L2),1)) # result+totals

rownames(result) <- c(1:10,"product")

colnames(result) <- c("x","original","estimated","est > orig") | x | original | estimated | est > orig | |

|---|---|---|---|---|

| 1 | 5.5855 | 0.33609530 | 0.33201297 | 0 |

| 2 | 5.7095 | 0.31017782 | 0.28688183 | 0 |

| 3 | 4.8907 | 0.39656626 | 0.49010171 | 1 |

| 4 | 4.5465 | 0.35995784 | 0.45369729 | 1 |

| 5 | 5.6059 | 0.33204382 | 0.32465621 | 0 |

| 6 | 3.1820 | 0.07642691 | 0.05743702 | 0 |

| 7 | 5.6301 | 0.32711267 | 0.31586172 | 0 |

| 8 | 4.7238 | 0.38401358 | 0.48276941 | 1 |

| 9 | 4.7158 | 0.38315644 | 0.48191389 | 1 |

| 10 | 4.0807 | 0.26144927 | 0.30735328 | 1 |

| product | 0.00000475 | 0.00000892 | 1 |

The bottom line of Table(4.1) contains the product (obtained using the R function prod()) of each of the columns of likelihoods. Not surprisingly the maximum likelihood is obtained when we use the sample estimates of the mean and stdev (estimated, L2) rather than the original values of mean=5 and sd=1.0 (original, L1); that is 8.9201095^{-6} > 4.7521457^{-6}. I can be sure of these values in this example because the set.seed() R function was used at the start of the code to begin the pseudo-random number generators at a specific location. If you commonly use set.seed() do not repeatedly use the same old sequences, such as 12345, as you risk undermining the idea of of the pseudo-random numbers being a good approximation to a random number sequence, perhaps use getseed() to provide a suitable seed number instead.

So rnorm() provides pseudo-random numbers from the distribution defined by the mean and stdev, dnorm() provides the probability density or likelihood of an observation given its mean and stdev (the equivalent of Equ(4.11)). The cumulative probability density function (cdf) is provided by the function pnorm(), and the quantiles are identified by qnorm(). The mean value will naturally have the largest likelihood. Note also that the normal curve is symmetrical around the mean.

# some examples of pnorm, dnorm, and qnorm, all mean = 0

cat("x = 0.0 Likelihood =",dnorm(0.0,mean=0,sd=1),"\n")

cat("x = 1.95996395 Likelihood =",dnorm(1.95996395,mean=0,sd=1),"\n")

cat("x =-1.95996395 Likelihood =",dnorm(-1.95996395,mean=0,sd=1),"\n")

# 0.5 = half cumulative distribution

cat("x = 0.0 cdf = ",pnorm(0,mean=0,sd=1),"\n")

cat("x = 0.6744899 cdf = ",pnorm(0.6744899,mean=0,sd=1),"\n")

cat("x = 0.75 Quantile =",qnorm(0.75),"\n") # reverse pnorm

cat("x = 1.95996395 cdf = ",pnorm(1.95996395,mean=0,sd=1),"\n")

cat("x =-1.95996395 cdf = ",pnorm(-1.95996395,mean=0,sd=1),"\n")

cat("x = 0.975 Quantile =",qnorm(0.975),"\n") # expect ~1.96

# try x <- seq(-5,5,0.2); round(dnorm(x,mean=0.0,sd=1.0),5) # x = 0.0 Likelihood = 0.3989423

# x = 1.95996395 Likelihood = 0.05844507

# x =-1.95996395 Likelihood = 0.05844507

# x = 0.0 cdf = 0.5

# x = 0.6744899 cdf = 0.75

# x = 0.75 Quantile = 0.6744898

# x = 1.95996395 cdf = 0.975

# x =-1.95996395 cdf = 0.025

# x = 0.975 Quantile = 1.959964As we can see, individual likelihoods can be relatively large numbers, however, when multiplied together they can quickly lead to relatively small numbers. Errors can arise when the number of observations increases. Even with only ten numbers when we multiply all the individual likelihoods together (using prod()) the outcome quickly becomes very small indeed. With another similar ten numbers to the ten used in Table(4.1) the overall likelihood could easily be down to 1e-11 or 1e-12. As the number of observations increases the chances of a rounding error (even on a 64-bit computer) begin to increase. Rather than multiply many small numbers to get an extremely small number the standard solution to multiplying these small numbers together is to natural-log-transform the likelihoods and then add them together. Maximizing the sum of the log-transformed likelihoods obtains the optimum parameters exactly as does maximizing the product of the individual likelihoods. In addition, many optimizers, in software, appear to have been designed to be most efficient at minimizing a function. The simple solution is instead of maximizing the product of the individual likelihoods we minimize the sum of the negative log-likelihoods (-veLL or negLL()).

4.6 Likelihoods from the Normal Distribution

Likelihoods can appear to be rather strange beasts. When from continuous PDFs they are not strictly probabilities although they share many of their properties (Edwards, 1972). Strictly they relate to the probability density at a particular point under a probability density function. The area under the full curve must, by the definition of probabilities, sum to 1.0, but the area under any single point of a continuous PDF becomes infinitesimally small. Normal likelihoods are defined using Equ(4.11)), whereas the cumulative density function is:

\[\begin{equation} {cdf}=1=\int\limits_{x=-\infty }^{\infty }{\frac{1}{\sigma \sqrt{2\pi }}}{{e}^{\left( \frac{-{{\left( x-\mu \right)}^{2}}}{2{{\sigma }^{2}}} \right)}} \tag{4.12} \end{equation}\]

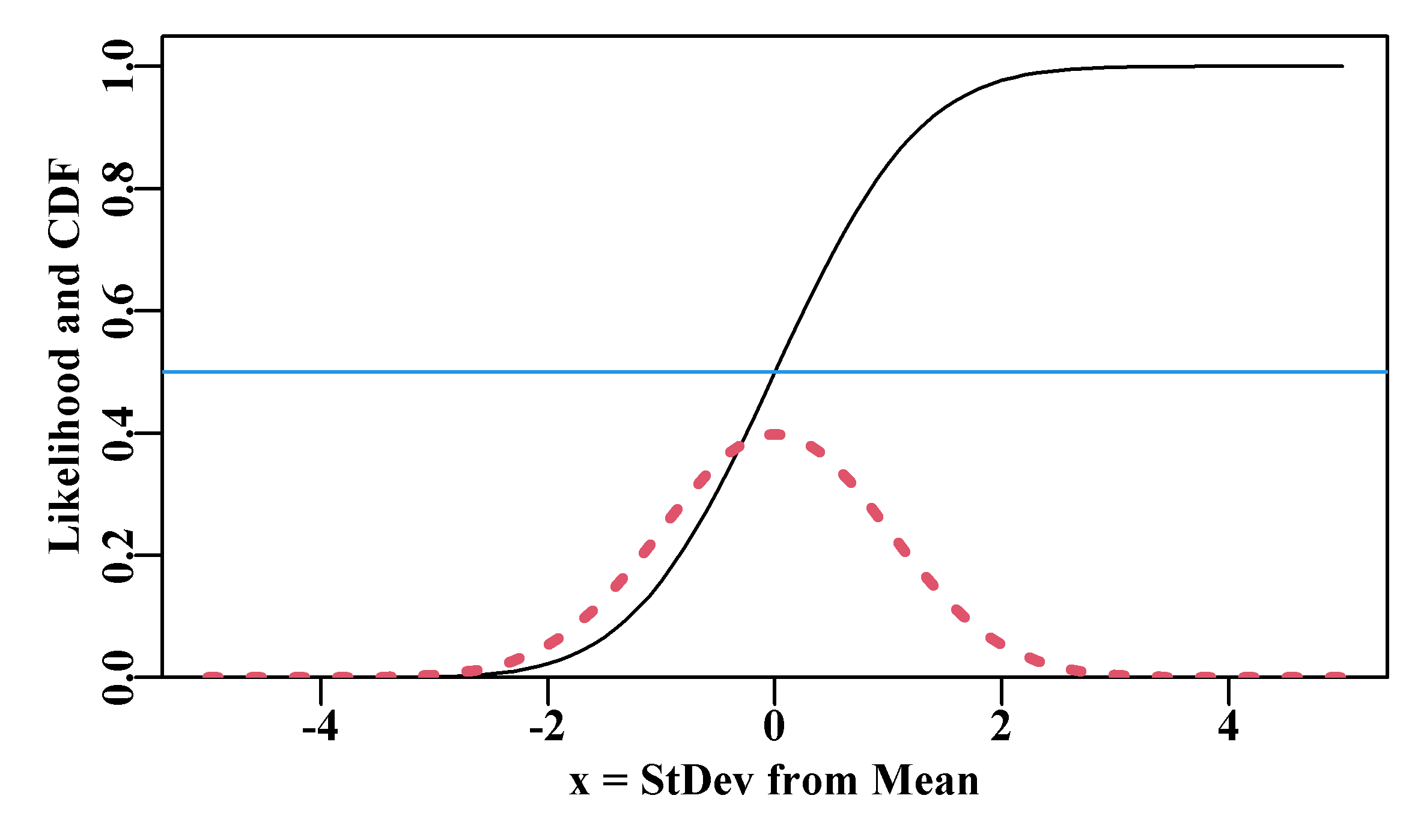

We can use dnorm() and pnorm() to calculate both the likelihoods and the cdf (Figure(4.5)).

# Density plot and cumulative distribution for Normal Fig 4.5

x <- seq(-5,5,0.1) # a sequence of values around a mean of 0.0

NL <- dnorm(x,mean=0,sd=1.0) # normal likelihoods for each X

CD <- pnorm(x,mean=0,sd=1.0) # cumulative density vs X

plot1(x,CD,xlab="x = StDev from Mean",ylab="Likelihood and CDF")

lines(x,NL,lwd=3,col=2,lty=3) # dashed line as these are points

abline(h=0.5,col=4,lwd=1)

Figure 4.5: A dotted red curve depicting the expected normal likelihoods for a mean = 0 and sd = 1.0, along with the cumulative density of the same normal likelihoods as a black line. The blue line identifies a cumulative probability of 0.5.

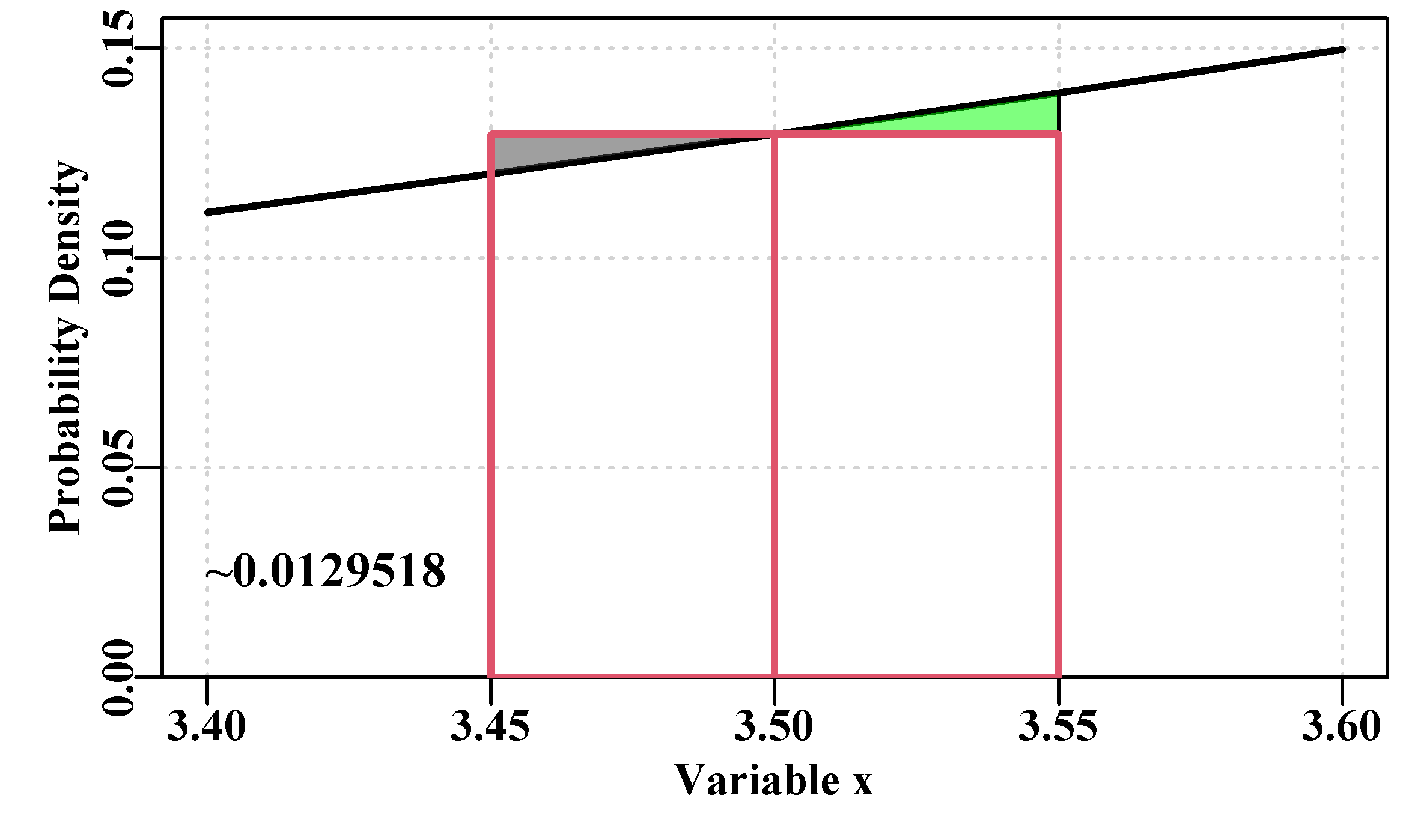

That all sounds fine, but what does it mean to identify a specific value under such a curve for a specific value of the \(x\) variable? In Figure(4.5) we used a dotted line to suggest that the likelihoods in the plot are local estimates and do not make up a continuous line. Each represents the likelihood at exactly the given \(x\) value. We have seen above that the probability density at a value of 0.0 for a distribution with mean = 0.0 and stdev = 1.0 would be 0.3989423. Let us briefly examine this potential confusion between likelihood and probability. If we consider a small portion of the probability density function with a mean = 5.0, and st.dev = 1.0 between the values of x = 3.4 to 3.6 we might see something like Figure(4.6):

#function facilitates exploring different polygons Fig 4.6

plotpoly <- function(mid,delta,av=5.0,stdev=1.0) {

neg <- mid-delta; pos <- mid+delta

pdval <- dnorm(c(mid,neg,pos),mean=av,sd=stdev)

polygon(c(neg,neg,mid,neg),c(pdval[2],pdval[1],pdval[1],

pdval[2]),col=rgb(0.25,0.25,0.25,0.5))

polygon(c(pos,pos,mid,pos),c(pdval[1],pdval[3],pdval[1],

pdval[1]),col=rgb(0,1,0,0.5))

polygon(c(mid,neg,neg,mid,mid),

c(0,0,pdval[1],pdval[1],0),lwd=2,lty=1,border=2)

polygon(c(mid,pos,pos,mid,mid),

c(0,0,pdval[1],pdval[1],0),lwd=2,lty=1,border=2)

text(3.395,0.025,paste0("~",round((2*(delta*pdval[1])),7)),

cex=1.1,pos=4)

return(2*(delta*pdval[1])) # approx probability, see below

} # end of plotpoly, a temporary function to enable flexibility

#This code can be re-run with different values for delta

x <- seq(3.4,3.6,0.05) # where under the normal curve to examine

pd <- dnorm(x,mean=5.0,sd=1.0) #prob density for each X value

mid <- mean(x)

delta <- 0.05 # how wide either side of the sample mean to go?

parset() # a pre-defined MQMF base graphics set-up for par

ymax <- getmax(pd) # find maximum y value for the plot

plot(x,pd,type="l",xlab="Variable x",ylab="Probability Density",

ylim=c(0,ymax),yaxs="i",lwd=2,panel.first=grid())

approxprob <- plotpoly(mid,delta) #use function defined above

Figure 4.6: Probability densities for a normally distributed variate X, with a mean of 5.0 and a standard deviation of 1.0. The PDF value at x = 3.5 is 0.129518, so the area of the boxes outlined in red is 0.0129518, which approximates the total probability of a value between 3.45 - 3.55, which would really be the area under the curve.

The area under the complete PDF sums to 1.0, so the probability of obtaining a value between, say, 3.45 and 3.55, in Figure(4.6), is the sum of the areas of the oblongs minus the left triangle plus the right triangle. The triangles are almost symmetrical and so approximately cancel each other out, so an approximate solution is simply 2.0 times the area of one of the oblongs. With a delta (width of oblong on x-axis) of 0.05 that probability = 0.0129518. If you change the delta to become 0.01 then the approximate probability = 0.0025904, and as the delta value decreases so does the total probability although the probability density at 3.5 remains the same at 0.1295176. Clearly likelihoods are not identical to probabilities in continuous PDFs (see Edwards, 1972). The best estimate of the probability of the area under the curve is obtained with pnorm(3.55,5,1) - pnorm(3.45,5,1), which = 0.0129585.

4.6.1 Equivalence with Sum-of-Squares

When using normal likelihoods to fit a model to data what we actually do is set things up so as to minimize the sum of the negative log-likelihoods for each available observation. Fortunately, we can use dnorm() to estimate those likelihoods. In fact, if we fit a model using maximum likelihood methods with Normally distributed residuals, or log-transformed Log-Normal distributed data, then the parameter estimates obtained are the same as those obtained using the least-squared approach (see the derivation and form of Equ(4.19), below). Fitting a model would require generating a set of predicted values \({\hat{x}}\) (x-hat) as a function of some other independent variable(s) \(\theta(x)\), where \({\theta}\) is the list of parameters used in the functional relationship. The log-likelihood for \(n\) observations would be defined as:

\[\begin{equation} LL(x|\theta)=\sum\limits_{i=1}^{n}{log\left( \frac{1}{\hat{\sigma }\sqrt{2\pi }}{{e}^{\left( \frac{-{{\left( {x_i}-{{\hat{x}}_{i}} \right)}^{2}}}{2{{{\hat{\sigma }}}^{2}}} \right)}} \right)} \tag{4.13} \end{equation}\]

\(LL(x|\theta)\) is read as the log-likelihood of \(x\), the observations, given \(\theta\) the parameters (\(\mu\) and \(\hat{\sigma}\)); the symbol \(|\) is read as “given”. This superficially complex equation can be greatly simplified. First, we could move the constant before the exponential term outside of the summation term by multiplying it by \(n\), and the natural log of the remaining exponential term back-transforms the exponential:

\[\begin{equation} LL(x|\theta)=n log\left( \frac{1}{\hat{\sigma }\sqrt{2\pi }} \right)+\frac{1}{2{\hat{\sigma }^{2}}}\sum\limits_{i=1}^{n}{\left( -{{\left( x_i-\hat{x}_i \right)}^{2}} \right)} \tag{4.14} \end{equation}\]

The value of \({\hat{\sigma }^{2}}\) is the maximum likelihood estimate of the variance of the data (note the division by \(n\) rather than \(n-1\):

\[\begin{equation} {{\hat{\sigma }}^{2}}=\frac{\sum\limits_{i=1}^{n}{{{\left( x_i-\hat{x}_i \right)}^{2}}}}{n} \tag{4.15} \end{equation}\]

If we replace the use of \({\hat{\sigma }^{2}}\) in Equ(4.14)) with Equ(4.15), the \((x_i-{\hat{x}_i})^2\) cancels out leaving \(-n/2\):

\[\begin{equation} LL(x|\theta)=n{log}\left( \left( {\hat{\sigma }\sqrt{2\pi }} \right)^{-1} \right) - \frac{n}{2} \tag{4.16} \end{equation}\]

simplifying the square root term means bringing the \(-1\) out of the log term, which changes the \(n\) to \(-n\) and we can change the square root to an exponent of 1/2 and add \(\log{(\hat \sigma)}\) to the log of the \(\pi\) term:

\[\begin{equation} LL(x|\theta)=-n\left( {log}\left( {{\left( 2\pi \right)}^{\frac{1}{2}}} \right)+{log}\left( {\hat{\sigma }} \right) \right)-\frac{n}{2} \tag{4.17} \end{equation}\]

then move the power of \(1/2\) to outside the first \(log\) term:

\[\begin{equation} LL(x|\theta)=-\frac{n}{2}\left( {log}\left( 2\pi \right)+2{log}\left( {\hat{\sigma }} \right) \right)-\frac{n}{2} \tag{4.18} \end{equation}\]

then simplify the \(n/2\) and multiply throughout by \(-1\) to convert to a negative log-likelihood to give the final simplification of the negative log-likelihood for normally distributed values:

\[\begin{equation} -LL(x|\theta)=\frac{n}{2}\left( {log}\left( 2\pi \right) + 2{log} \left( {\hat{\sigma }} \right) + 1 \right) \tag{4.19} \end{equation}\]

The only non-constant part of this is the value of \(\hat \sigma\), which is the square root of the sum of squared residuals divided by \(n\), so now it should be clear why the parameters obtained when using maximum likelihood, if using normal random errors, are the same as derived from a least squares approach.

4.6.2 Fitting a Model to Data using Normal Likelihoods

We can repeat the example using the simulated female Redfish data in the data-set LatA, Figure(4.7), which we used to illustrate the use of sum-of-squared residuals. Ideally, we should obtain the same answer but with an estimate of \(\sigma\) as well. The MQMF function plot1() is just a quick way of plotting a single graph (either type=“l” or type=“p”; see ?plot1), without too much white space. Edit plot1() if you like more white space than I do!

#plot of length-at-age data Fig 4.7

data(LatA) # load the redfish data set into memory and plot it

ages <- LatA$age; lengths <- LatA$length

plot1(ages,lengths,xlab="Age",ylab="Length",type="p",cex=0.8,

pch=16,col=rgb(1,0,0,1/5))

Figure 4.7: The length-at-age data contained within the LatA data set for female Redfish Centroberyx affinis. Full colour means >= 5 points.

Now we can use the MQMF function negNLL() (as in negative normal log-likelihoods) to determine the sum of the negative log-likelihoods using normal random errors (negLL() does the same but for log-normally distributed data). If you look at the code for negNLL() you will see that, just like ssq(), it is passed a function as an argument, which is then used to calculate the predicted mean values for each input age (in this case lengths-at-age using the MQMF function vB()), and then uses dnorm() to calculate the sum of the -veLL using the predicted values as the mean values and the observations of the length-at-age values in the data. The ages data is passed through the ellipsis (…) without being explicitly declared as an argument in negNLL(). The function is very similar in structure to ssq() in that it has identical input requirements, however, pars is passed explicitly rather than inside …, because the last value of pars, must be the stdev of the residuals, which is used inside negNLL() rather than just inside funk. The use of likelihoods means there is a need to include an estimate of the standard deviation at the end of the vector of parameters. negNLL() thus operates in a manner very similar to ssq() in that it is a wrapper for calling the function that generates the predicted values and then uses dnorm() to return a single number every time it is called. Thus, nlm() minimizes negNLL(), which in turn calls vB().

# Fit the vB growth curve using maximum likelihood

pars <- c(Linf=27.0,K=0.15,t0=-3.0,sigma=2.5) # starting values

# note, estimate for sigma is required for maximum likelihood

ansvB <- nlm(f=negNLL,p=pars,funk=vB,observed=lengths,ages=ages,

typsize=magnitude(pars))

outfit(ansvB,backtran=FALSE,title="vB by minimum -veLL") # nlm solution: vB by minimum -veLL

# minimum : 747.0795

# iterations : 26

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 26.8354474 4.490629e-07

# 2 0.1301554 1.659593e-05

# 3 -3.5868868 3.169513e-07

# 4 1.9500896 8.278354e-06If you look back at the ssq() example for the von Bertalanffy curve, you will see we obtained an SSQ value of 1361.421 from a sample of 358 fish (try nrow(LatA)). Thus the estimate of \(\sigma\) from the ssq() approach was \(\sqrt{ \left (1361.421 / 358 \right)} = 1.95009\), which, as expected, is essentially identical to the maximum likelihood estimate.

Just as before all we need do is substitute a different growth curve function into the negNLL() to get a result. We just have to remember to include a fourth parameter (\(\sigma\)) in the p vector. Once again, using Normal random errors leads to essentially the same numerical solution as that obtained using the ssq() approach.

#Now fit the Michaelis-Menton curve

pars <- c(a=23.0,b=1.0,c=1.0,sigma=3.0) # Michaelis-Menton

ansMM <- nlm(f=negNLL,p=pars,funk=mm,observed=lengths,ages=ages,

typsize=magnitude(pars))

outfit(ansMM,backtran=FALSE,title="MM by minimum -veLL") # nlm solution: MM by minimum -veLL

# minimum : 743.6998

# iterations : 34

# code : 1 gradient close to 0, probably solution

# par gradient

# 1 20.7464280 -6.195465e-06

# 2 1.4183165 1.601881e-05

# 3 0.9029899 2.461405e-04

# 4 1.9317669 -3.359816e-06Once again the gradients of the solution are small, which increases confidence that the solution is not just a local minimum, so we should plot out the solution to see its relative fit to the data.

By plotting the fitted curves on top of the data points the data do not obscure the lines. The actual predictions that can now be produced from this analysis can also be tabulated along with the residual values. By including the individual squared residuals, it becomes more clear which points (see record 3) could have the most influence.

#plot optimum solutions for vB and mm. Fig 4.8

Age <- 1:max(ages) # used in comparisons

predvB <- vB(ansvB$estimate,Age) #optimum solution

predMM <- mm(ansMM$estimate,Age) #optimum solution

parset() # plot the deata points first

plot(ages,lengths,xlab="Age",ylab="Length",type="p",pch=16,

ylim=c(10,33),panel.first=grid(),col=rgb(1,0,0,1/3))

lines(Age,predvB,lwd=2,col=4) # then add the growth curves

lines(Age,predMM,lwd=2,col=1,lty=2)

legend("bottomright",c("von Bertalanffy","Michaelis-Menton"),

col=c(4,1),lwd=3,bty="n",cex=1.2,lty=c(1,2))

Figure 4.8: Optimum von Bertalanffy and Michaelis-Menton growth curves fitted to the female LatA Redfish data. Note the two curves are effectively coincident where the observations are most concentrated. Note the y-axis starts at 10.

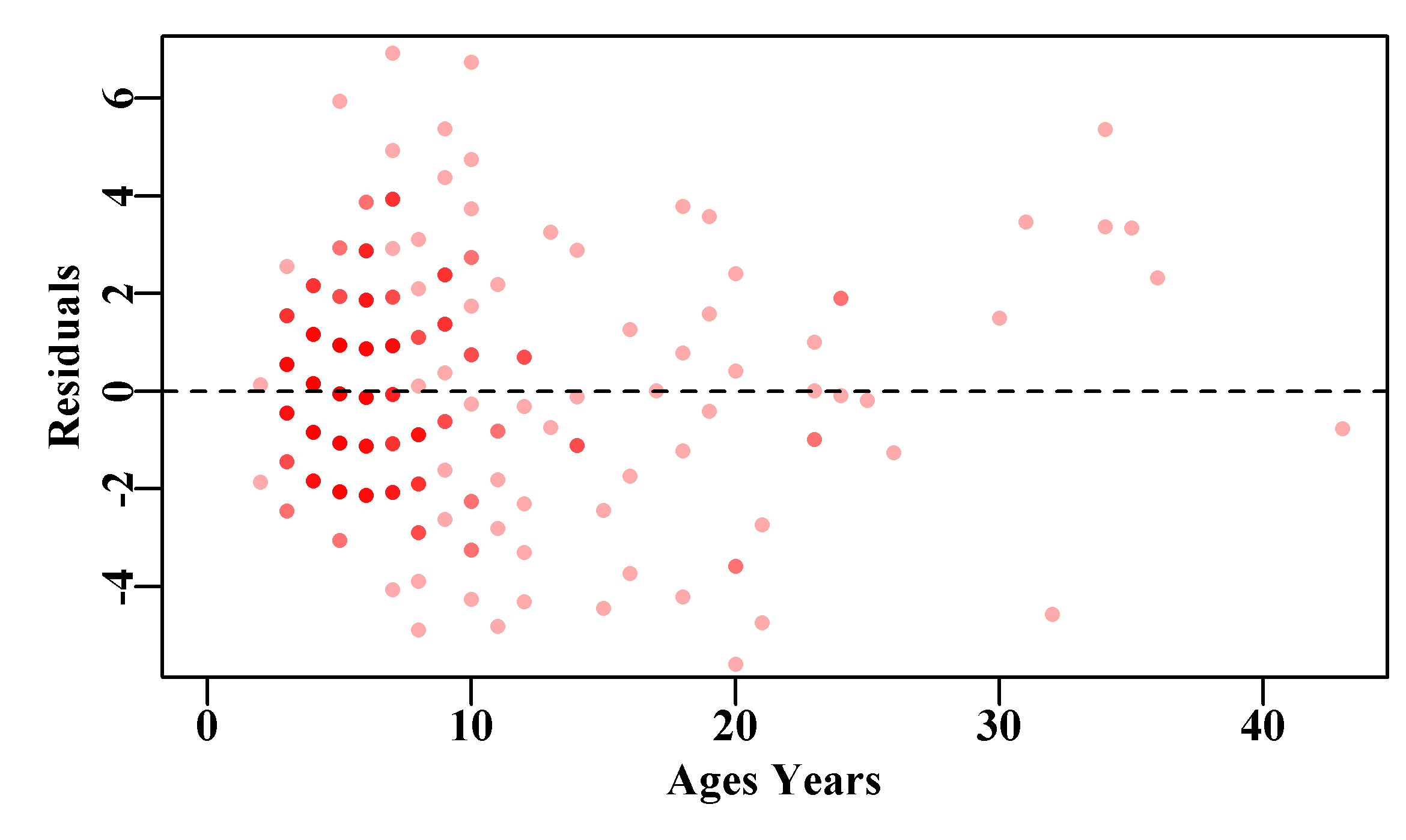

Generally, one would generate residual plots so as to check for patterns in the residuals, Figure(4.9).

# residual plot for vB curve Fig 4.9

predvB <- vB(ansvB$estimate,ages) # predicted values for age data

resids <- lengths - predvB # calculate vB residuals

plot1(ages,resids,type="p",col=rgb(1,0,0,1/3),xlim=c(0,43),

pch=16,xlab="Ages Years",ylab="Residuals")

abline(h=0.0,col=1,lty=2) # emphasize the zero line

Figure 4.9: The residual values for von Bertalanffy curve fitted to the female LatA data. There is a clear pattern between the ages of 3 - 10, which reflects the nature of residuals when the mean expected length for a given age is constant and compared to these rounded length measurements.

In the plots of the growth data (Figure(4.8) and Figure(4.9)) the grid-like nature of the data is a clear indication of the lengths being measured to the nearest centimeter, and the rounding of the ages to the lowest whole year. Such rounding on both the x and y axes is combined with the issue that we are fitting these models with a classical y-on-x approach (Ricker, 1973), and that assumes there is no variation on the measurements taken on the x-axis, but unfortunately, with relation to ages, this assumption is simply false. Essentially we are treating the variables of length and age as discrete rather than continuous, and the ageing data as exact with no error. These patterns would warrant further exploration but also serve to emphasize that when dealing with data from the living world, it is difficult to collect and generally we are dealing with less than perfect information. The real trick when modelling in fisheries and real world ecology is to obtain useful and interesting information from that less than perfect data, and do so in a defensible manner.

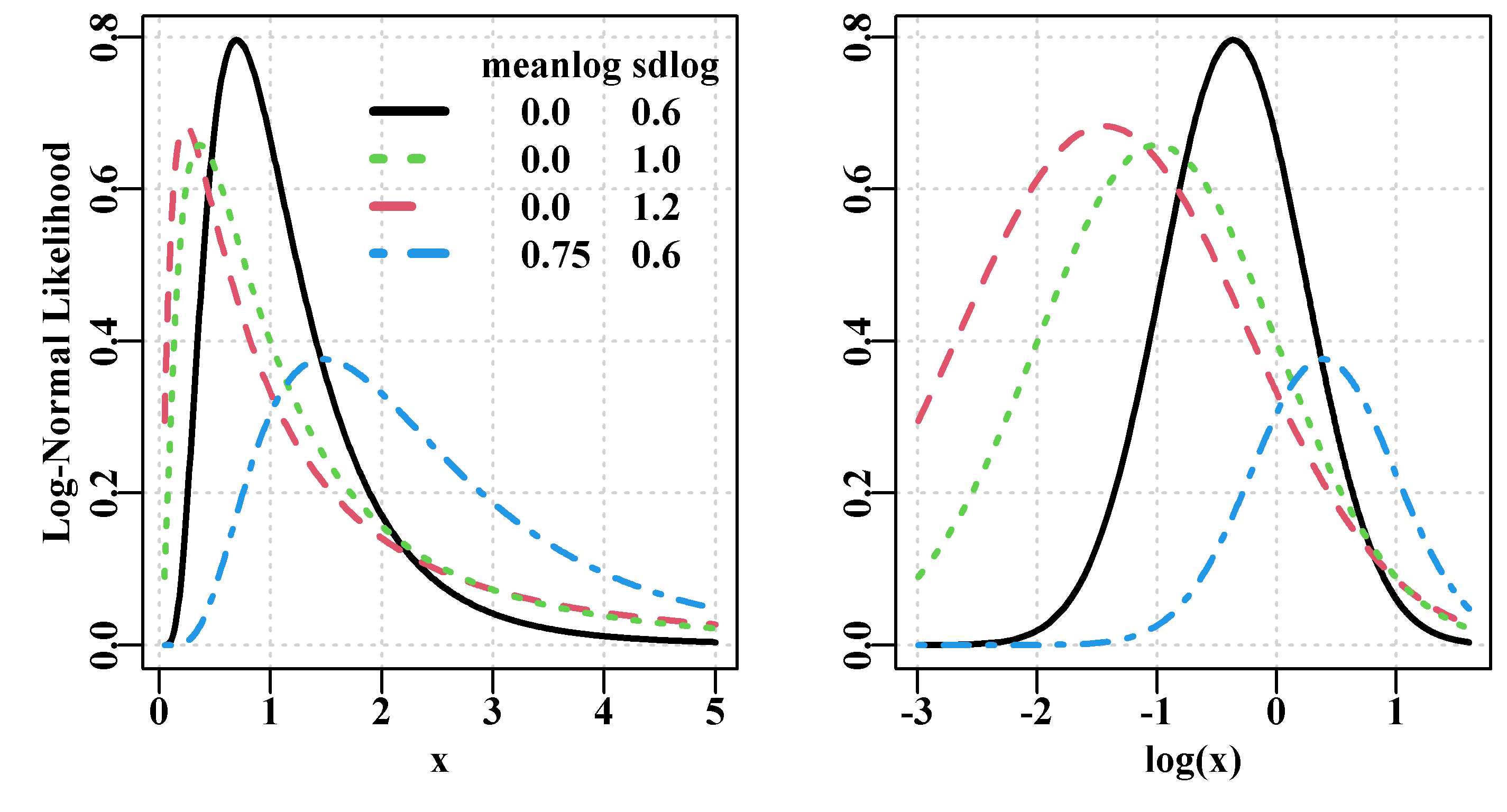

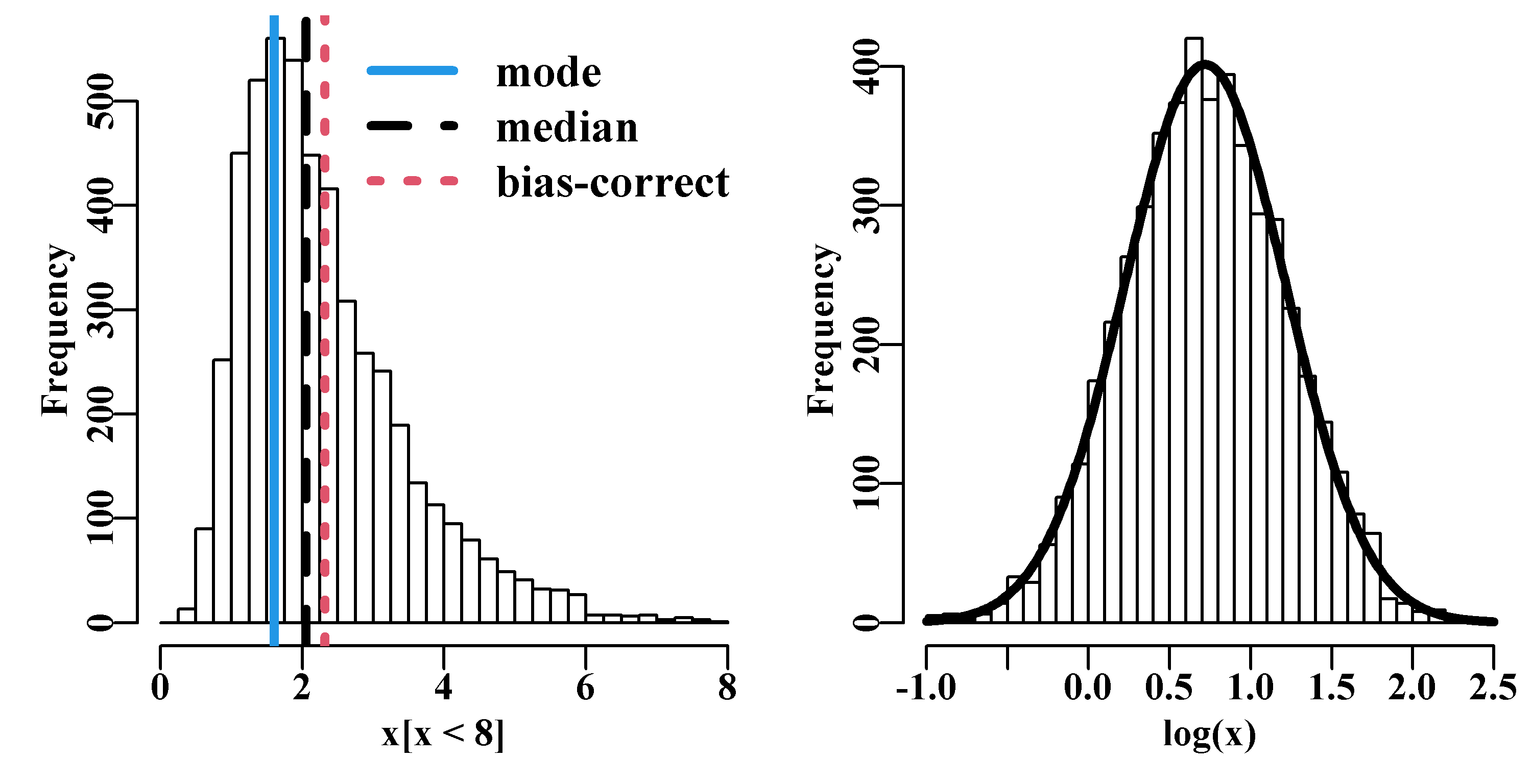

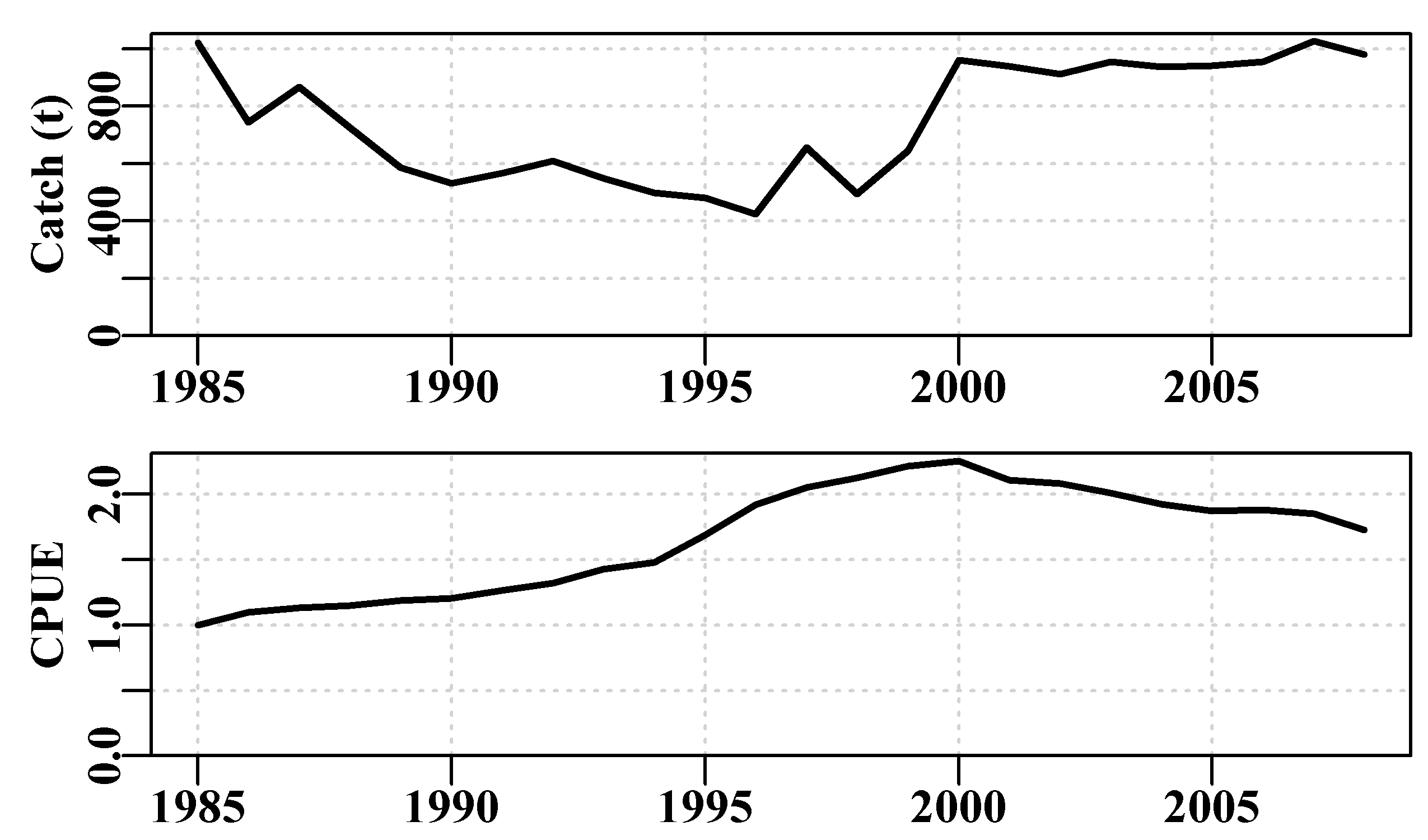

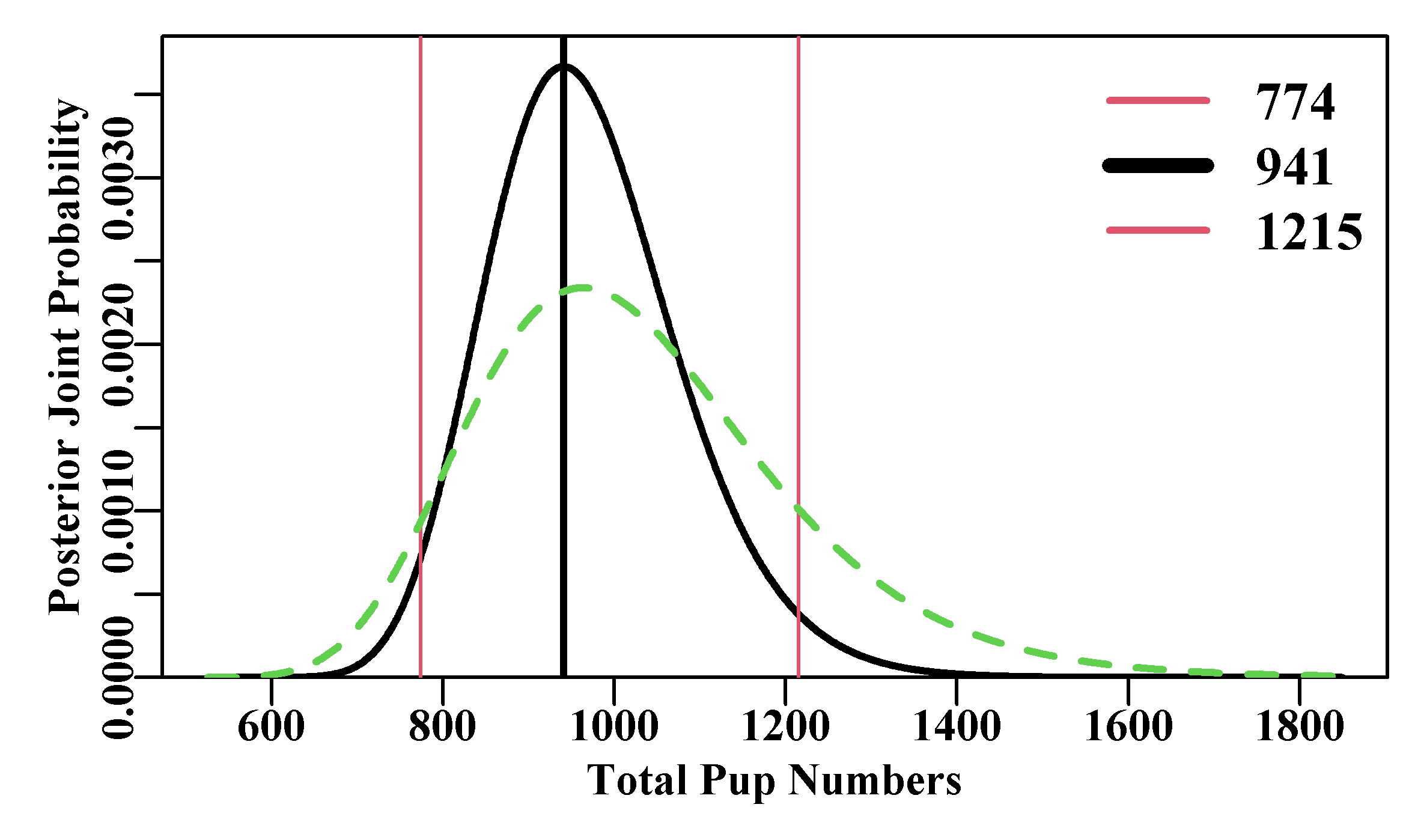

4.7 Log-Normal Likelihoods